Humankind and Ubiquitous Autonomous AI: A Symbiotic or Dystopian Interaction? A Socio-Philosophical Inquiry

Journal of Engineering Research and Sciences, Volume 1, Issue 5, Page # 109-118, 2022; DOI: 10.55708/js0105012

Keywords: Artificial Moral Agents, Amensalism, Artificial Intelligence, Transhumanism, Superintelligence, Technoethics

(This article belongs to the Section Ethics (ETH))

Export Citations

Cite

Vidalis, M. A. and Andreatos, A. S. (2022). Humankind and Ubiquitous Autonomous AI: A Symbiotic or Dystopian Interaction? A Socio-Philosophical Inquiry. Journal of Engineering Research and Sciences, 1(5), 109–118. https://doi.org/10.55708/js0105012

Michael A. Vidalis and Antonios S. Andreatos. "Humankind and Ubiquitous Autonomous AI: A Symbiotic or Dystopian Interaction? A Socio-Philosophical Inquiry." Journal of Engineering Research and Sciences 1, no. 5 (May 2022): 109–118. https://doi.org/10.55708/js0105012

M.A. Vidalis and A.S. Andreatos, "Humankind and Ubiquitous Autonomous AI: A Symbiotic or Dystopian Interaction? A Socio-Philosophical Inquiry," Journal of Engineering Research and Sciences, vol. 1, no. 5, pp. 109–118, May. 2022, doi: 10.55708/js0105012.

The technological revolution in Artificial Intelligence (AI) and Autonomous Robotics is expected to transform societies in ways we cannot even imagine. The way we live, interact, work, and fight wars, will not be like anything witnessed before in human history. This qualitative research paper endeavors to examine the effect of said technological advancements on multiple socio-philosophical planes, including societal structure and ethics. AI mismanagement which we are already beginning to witness, coupled with humankinds’ historical ethical infractions, serve as an awakening call for global action to safeguard humanity; AI ethics ought to be examined through the Social Principle and the Social Contract. A proactive, vigilant stance seems imperative, in order to safeguard misuse, as in the case of robot-soldiers or armed drones, which is a case of amensalism disguised. As technological progress is already interfering with humankind and conscience, and in light of expressed concerns from legal and civil liberties groups, it is imperative to immediately criminalize any research in AI weapons, shumans and the crossbreeding of humans with machines, considering these as crimes against Humanity.

1. Introduction

“The rise of powerful AI will be either the best or the worst thing ever to happen to humanity. We do not yet know which”. Stephen Hawking, 2017.

Most of us in the Western world, including the authors of this paper, grew up reading the comic strips of Buck Rogers and watching Dr. Who, Star Trek and the like, idealizing technological progress as well as admiring the capabilities of the human brain. In our formative years, technology seemed certain to assure an ideal future, a more just world, while enhancing and improving our lives.

However, since then, many questionable developments have been envisioned or realized, such as, Artificial Intelligence, brain transplants, autonomous robotics, and transhumanism. Artificial Intelligence and autonomous robots have been used in an ever increasing variety of applications, positive (ethical) or negative (of questionable ethics), such as, autonomous automobiles, electronic payment protection, digital marketing, human resources, big data processing, medicine (such as medical imaging and radiology), human companions, bomb detonation, surveillance, warfare, etc.

This qualitative research paper argues that AI including autonomous robots, and brain transplants, may indeed benefit humanity as attested by its various pragmatic applications; however, its mismanagement which we are already beginning to witness, coupled with humankinds’ historical ethical infractions, serve as an awakening call for global action to safeguard humanity. Hawking’s now infamous quote above, presents an opportunity to assure that AI will remain peaceful, purposeful, and most importantly, ethical, serving humankind instead of possibly controlling or dominating it, as will be explicated below. It is up to us to assure the direction of AI’s future outcome, eliminating the uncertainty of a dystopian future Hawking entertained as a possible scenario.

Humans have made great strides throughout history, resulting in profound and purposeful inventions, such as electricity, the telephone, the automobile, the X-Ray, or the internet, all of which undeniably contributed to our welfare and enhanced our quality of life. Unfortunately, scientists have also concentrated their research on ways to harm humankind; or, purposeful inventions, were turned into devastating weapons of mass destruction. Besides the obvious atomic or nuclear bomb examples, the list seems endless. Deadly Sarin gas, used by the Nazis, has also been employed recently in the Iran-Iraq war. Chlorine gas was used by the Germans in WW1. “Agent Orange” (triiodobenzoic acid), originally developed as a chemical to accelerate the growth of soybeans in areas of a short growing season, was employed extensively in high concentrations by the United States during the Vietnam, over a period of ten years, resulting in countless casualties and birth defects. Last, but not least, landmines abandoned or forgotten since WW2 or other conflicts, kill annually thousands of innocent civilians, mostly children (in 2019 less than 7,000 people died as a result of landmines) [1]. It immediately becomes apparent that in order to win a war, or change the outcome of a conflict, countries like Germany or the United States, have resorted to unethical means, embracing a Machiavellian approach; which of course does not excuse a crime against humanity.

The idiosyncratic perspective of the authors as partly reflected in the title, as well as a field at its infancy, have made it challenging to locate relative applicable research – with the exception of [2]–[4]. In [2], it presents four different scenarios (a. the optimists; b. the pessimists; c. the pragmatists; d. the doubters) relative to future AI progress, however, although he acknowledges the potentially devastating consequences for humanity, and he appears hopeful that scientists will safeguard applicable research. He expresses his uncertainty relative to possible risks in the following statement:

“What is uncertain is if such an impact will lead to a utopian or dystopian future, or somewhere in between. Elsewhere he notes in concern: Whether this dream is a utopian or dystopian future is left up to the reader to decide, not underestimating however, that intelligent ma- chines will eventually become at least as smart as us and a serious competitor to the human race if left unchecked and if their great potential to augment our own intellectual abilities is not exploited to the maximum” [2].

In [3], adopts and astutely explicates the argument that self-preservation should be humankind’s first ethical priority. He supports that without humankind moral argument could not occur (on his assumption that only humans possess complex morality), therefore, “the most fundamental human moral obligation is to avoid extinction” [3]. While Jimenez [4] states that humanoid robots with human like self-awareness AI, will use consumer brands as a means of self-expression. He expects these robots to play a prominent role in society, especially in the healthcare, education and re- lationship sectors, i.e., he foresees an increased human-robot interaction [4].

Next, a few terms ought to be defined. Artificial Intelligence (AI) “… or sometimes called machine intelligence, is intelligence demonstrated by machines, in contrast to the natural intelligence displayed by humans and other animals. Some of the activities that it is designed to do is speech recognition, learning, planning and problem solving. Since Robotics is the field concerned with the connection of perception to action, Artificial Intelligence must have a central role in Robotics if the connection is to be intelligent”. The independent High-Level Expert Group on Artificial Intelligence, of the European Commission (E.C.), adopted the following definition of AI: “Artificial intelligence systems are software (and possibly also hardware) systems designed by humans that, given a complex goal, act in the physical or digital dimension by perceiving their environment through data acquisition, interpreting the collected structured or unstructured data, reasoning on the knowledge, or processing the information, derived from this data and deciding the best actions to take to achieve the given goal. AI systems can either use symbolic rules or learn a numeric model, and they can also adapt their behavior by analyzing how the environment is affected by their previous actions. As a scientific discipline, AI includes several approaches and techniques, such as machine learning (of which deep learning and reinforcement learning are specific examples), machine reasoning (which includes planning, scheduling, knowledge representation and reasoning, search, and optimization), and robotics (which includes control, perception, sensors and actuators, as well as the integration of all other techniques into cyber-physical systems)” [5].

Symbiosis, from the Greek ‘Syn+bios’ (meaning ‘together’+‘life’= living together), is “1: The living together in more or less intimate association or close union of two dissimilar organisms (as in parasitism or commensalism). Especially: mutualism; 2. A cooperative relationship (as between two persons or groups)” [6]. The term was adopted by the life sciences in the nineteenth century, specifically by biology and medicine. Symbiosis can be either mutually beneficent, i.e., a cooperative relationship, in which case it is characterized as mutualism, or, one organism living off another at the other’s expense, which is characterized as parasitism. Relative to this is the concept of commensalism, where members of one species gain benefits while those of the other species neither benefit nor are harmed. Amensalism “is an interaction in which presence of one species does not allow individuals of other species to grow or live… this is called antibiosis” [7]. It should be noted that the term symbiosis, in a general use, i.e., without clarifying it as per the aforementioned, signifies a long-term interaction.

Simply stated, Dystopia is anti-utopia. Specifically, Dystopia is “an imagined world or society in which people lead wretched, dehumanized, fearful lives”. As such, it is undesirable and considered as hostile to humankind [8].

Society is “a large group of interacting people in a defined territory, sharing a common culture” [9]. The consideration of a potential future coexistence or interface between hu- mans and autonomous AI machines or autonomous robots, inevitably gives rise to a plethora of sociological and philo- sophical issues, some of which are formulated and expli- cated below.

Last, transhumanism “is a class of philosophies of life that seek the continuation and acceleration of the evolution of intelligent life beyond its currently human form and hu- man limitations by means of science and technology, guided by life-promoting principles and values” [10]. Known as “Humanity Plus”, it claims it seeks to elevate the human condition [11]. Also, as extropy, is an essential element of transhumanism [12].

This paper is organized into five parts. It first overviews the subject and provides the working definitions of seminal terminology. Second, it investigates the era we are currently in, specifically, the Fourth Industrial Revolution. Third, the effect on labor is examined, although in a laconic fashion, at- tempting to gauge whether this new technological progress will result in additional employment or unemployment. Fourth, societal and ethical issues are investigated and expli- cated. Last, in the fifth section, we question whether brain transplants or a human-robot symbiosis are possible, or most importantly, legal or desirable, from an ethical stance. The contribution of this work is an idiosyncratic yet holistic perspective on a field in its infancy, based on an extensive lit- erature review. Furthermore, we have pointed out pertinent issues in the recent Russo-Ukrainian conflict.

The consideration of a potential future coexistence or interface between humans and autonomous AI machines or autonomous robots, inevitably gives rise to a plethora of sociological and philosophical issues, some of which are formulated and explicated below.

2. The Fourth Industrial Revolution

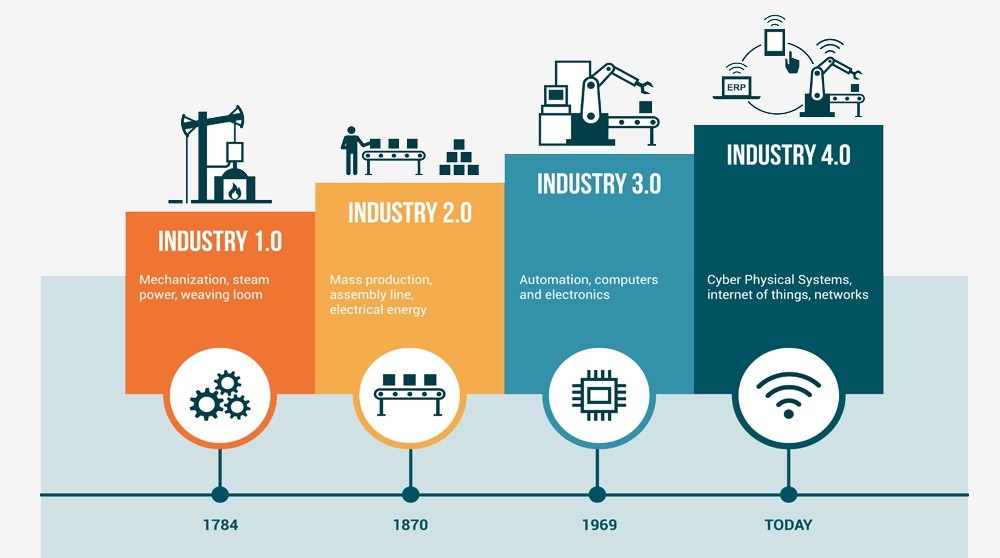

We are in an age of profound and systemic change. To comprehend this statement, one needs to consider the modernization of industrial progress, from the 18th century until today; and its four distinct phases. Our present phase may be defined as the Fourth Industrial Revolution [13]. Specifically, these phases are:

- The Machine

- Mass

- The Digital

- The Fourth Industrial

The underlying technologies of the Fourth Industrial Revolution are: intense interconnectivity (implemented by 5G), the Internet of Things (IoT), AI, gene sequencing and nanotechnology (Figure 1). Most of the changes in phases three and four were fast-tracked, ultimately spreading glob- ally. This provided a new level of change that had never been witnessed before. Not only were people aware of events happening halfway across the world, but economies had become so intertwined that they were affected by them. Schwab proceeds to recommend four types of intelligence in order to deal with the new reality: Contextual, emotional, inspired and physical. This fourth wave of technological change may be encapsulated in a reader’s review of the keystone book on the subject:

“This book documents how people will be con- solidated as valueless beings in a world ran by robots, AI and those in the upper echelon of politics and wealth. This outlines the coming power of globalization over all nations every- where. Read it and look at it from a big picture perspective. It is scary” [14].

3. AI and robotics on labor: Societal and ethical issues

The application of AI on labor raises some ethical implications. Granted, a company may wish to maximize its revenues by utilizing robots or AI. However, what happens when such a decision is adopted on a wide scale results in massive unemployment? When do the rights (if maximiza- tion of corporate profit is a right) of the few and powerful outweigh the rights of the many?

Various studies of the effect of progress on the work scene, especially, potential employment opportunities or resulting unemployment, have been conducted. There seems to be an ongoing debate on humans versus robots and whether AI will replace workers thus increasing the unemployment rate, or, aid workers to ultimately become more productive. Technological unemployment, the jobs lost as a result of technological progress or advances, is not a new phenomenon. Weiyu Wang and Keng Siau state that “some jobs, that have disappeared as technology has advanced, include steam-train operators, switchboard operators, ele- vator operators, and typists. The disappearance of obsolete jobs that have been replaced by technologies is referred to as “technological job obliteration”. Each time an indus- trial revolution has occurred, people have been concerned about technological unemployment and technological job obliteration” [15].

However, humans seem to have innate qualities, such as morality and ethics, which cannot be replicated by robotics or AI, while in some professions, interpersonal skills and communication is utterly important. Jack Dorsey, CEO of Twitter, warns that AI may threaten entry level computer programming jobs, as these will no longer be relevant. Even beginning-level software engineers will face less demand, as AI will soon write its own programs [16]. As with all new technologies, especially in the field of AI, fear of the unknown is present.

Andy Haldane (2015), the Chief Economist of the Bank of England, predicts that up to 15 million jobs in Britain could be lost, due to robots. Hardest hit are expected to be the administrative, clerical and production tasks. As Haldane stated, in the 20th Century machines have substituted not just for manual tasks but for cognitive as well; machines are becoming even smarter and can reproduce the set of human skills at a lower cost [17].

One needs to remember the first automobile assemblylines being labor intensive and how these ultimately became automated; while the initial jobs were lost, new specializations surfaced, requiring labor. Likewise, the introduction of the personal computer in the 1970s and 1980s, created millions of new jobs (semiconductor makers, software and app developers, information analysts, etc.), as technology has been historically a net job creator [18]. According to a modeling scenario, up to 14% of the global workforce will need to change occupational categories by the year 2030. Different modeling scenarios have also attempted to predict the number of jobs lost due to automation. According to the fastest scenario modeled, up to 30% or 800 million workers, could be displaced in the period 2016-2030 [18].

As this is a field with on-going developments occurring at a high speed, we can state that it is presently inconclusive whether the associated technological progress will affect overall employment negatively. The evidence of studies to date is inconclusive. However, we need to endeavor to strike a balance between the needs of the employers against the needs of the labor force, such that an ethical symbiosis can be realized.

4. Societal and ethical issues

A human is an individual, while an aggregation of humans forms families and ultimately, societies; a human is considered the core or cell of society (as defined above). Whereas, an AI robot or autonomous machine is initially a unit, while an aggregation of these, simply results in more units; not society, as we perceive it. Immediately, we perceive the duality “man” versus “machine”. Even if these can communicate, interact or interface, between themselves and humans, they are still devoid of the idiosyncratic human characteristics or innate human qualities (such as empathy or an impulse to play and fool around) central to all societal conceptions; at least for the time being. In [19], the author has entertained and presented the frightening concept of singularity, the union of the best of human and machine, where our knowledge and skills (located within our brains) would merge with the superior capacity, speed, knowledge and sharing abilities of our machines. Given the author’s prominence, who also happens to be a noted inventor, one can only speculate that scientists around the globe are secretly researching this dystopian scenario. AI has many seemingly practical applications, such as, in medicine, insurance, facial recognition, predictive policing, self-driving automobiles, mortgage or job applications, judicial algorithms, etc. An example of the latter is the ‘COMPAS’ software used in the

U.S. [20]. COMPAS (an acronym for “Correctional Offender Management Profiling for Alternative Sanctions”) is an algorithm to assess potential recidivism risk; employed by some judicial systems in the U.S. It is a disputed risk assessment method, with an accuracy of 65%.

However, a closer examination, invariably reveals a plethora of ethical issues. For example, facial recognition may be acceptable in Facebook, should one accept to use the specific social medium and elect to apply that option, however, when done by a network of cameras on the streets and other public spaces, it can violate our privacy; for it would reveal our associations, our mood, and the like. In

the case of autonomous vehicles, an ethical issue arises, when considering the following possible scenario. A child suddenly crosses the road in front of the moving vehicle, while the available choices are only two: Kill the child, or, hit a wall in order to save the child which will result in the passenger’s death. What will the AI system be programmed to do? More specifically, what sort of ethical constraints should be built in [21]?

Predictive policing is employed in order to predict where crimes are likely to occur. In predictive policing as done in the U.S. or Europe, areas habited by black people may be targeted, resulting in bias and unjust discrimination. As reported by the New York Times, Clearview, a facial recognition provider, has been sued by the American Civil Liberties Union (ACLU) for its controversial practices. Privacy, as we know it, will end, according to Nathan Freed Wessler, senior staff attorney at the ACLU. It is supported that Clearview’s facial recognition system is used by more than 2,200 law enforcement agencies around the world, and businesses like Best Buy and Macy’s. The ACLU’s press release stated: “The New York Times revealed the company was secretly capturing untold numbers of biometric identifiers for purposes of surveillance and tracking, without notice to the individuals affected. The company’s actions embodied the nightmare scenario privacy advocates long warned of, and accomplished what many companies – such as Google – refused to try due to ethical concerns” [22].

The ACLU appears committed to defend privacy rights against the growing threat of this unregulated surveillance technology. Larry Page, a former Google CEO, stated in 2010 in the book “In the Plex: How Google Thinks, Works and Shapes Our Lives” that “eventually you’ll have the implant, where if you think about a fact, it will just tell you the answer” [23].

Robots camouflaged as seals have been employed in nursing homes to keep companionship to residents having no family or friends to visit them. While practical in its conception, it also serves as a sad commentary on contemporary society. Robotic “pet seals” have also been used in nursing homes in England, in therapeutic applications for dementia patients. A robot disguised as a baby harp seal, known as “Paro”, is meant to remind the residents of their past pets and improve their quality of life [24].

During the contested Coronavirus (Covid-19) pandemic and the associated quarantine, drones were used in China, England and other countries, for the surveillance of public space, temperature measurement of citizens walking in public areas, occasionally for delivery of medicine, etc. Once spotted, the person was notified via loudspeaker, to promptly leave the area. It proved to be an effective police practice, regardless of the possible ethical considerations involved. Critics have considered the policing application of drones by law enforcement agencies, as an infringement on privacy [25].

Drones, now owned by many national armies, employ AI, like autonomous weapons, in order to kill without human intervention [21]. Obviously, this does not allow for aborting the decision should an unexpected or unprogrammed parameter surface seconds before firing. So the logical question surfaces, what happens when it is too late?

In the recent phase of the Russo-Ukrainian conflict that commenced on February 24, 2022, drones were used by both sides, in order to engage in reconnaissance so the air force that followed would deliver successful strikes. Ukraine re- lied on the Turkish made Bayraktar TB2 drones that proved quite effective despite their technical shortcomings; surprisingly the Russians relied less on drones. Ukraine’s drones also shot guided missiles at Russian missile launchers [26]. Gone are the days of man-to-man confrontations. The new reality minimizes the risk of the aggressor, in effect rendering conflicts free of political cost in case of diseased soldiers? Wouldn’t this practice render wars more common?

The U.S. Army has developed prototype robot-soldiers poised to fight the wars of the future. These automated robots were initially developed to detonate bombs, or per- haps to clear mine fields; indeed, a noble goal. However, it was apparently decided to fully exploit these robots in military applications (Figure 2). This robot was developed by Virginia Tech engineering students to support functions in the military; it can extinguish fires that break out on Naval ships.

Both the U.S. and Russia are exploring the possibility of swarms of robotic soldiers on the battlefield; supposedly to protect the lives of actual soldiers, as these AI machines target faster and more accurately than humans. However, “it doesn’t help much, either, than the militaries responsible for pursuing this kind of battlefield autonomy already have atrocious records when it comes to avoiding civilian deaths” [27].

Richard Moyes, a co-founder of the Campaign to Stop Killer Robots (CSKR), is very concerned that autonomous military robots may develop their own intelligence and turn against their human creators; or, turn against real soldiers fighting at their side. CSKR has repeatedly called for the banning of autonomous robot soldiers, however, these are still being deployed in war-zones. As stated “… amorality is no longer an accurate characteristic of robotic systems. When discussing the ethics of artificial intelligence, experts warn that autonomous systems are inherently ‘tainted’ by its programmers. Decision-making processes, for example, are biased toward the person who designed the software” [28].

Unfortunately, it appears that for the U.S. and China, military soldiers will play a major role in the future [29]. In the past, men fought face to face, like real men; then came weapons, later stealth bombers and ships, and drones, while now, machines will hunt down and kill humans. Figure 3 shows MAARS (Modular Advanced Armed Robotic System). Having a modular design, it allows the controller to outfit it with a variety of armaments, ranging from lasers to tear gas. Once the political cost of dead soldiers is removed, wars can be started at will [30]. Joseph Weizenbaum had argued in 1976 that AI should not be applied to a few specific applications, one of them being soldiers.

A novel way has been proposed to effectively confront the challenging ethical dilemmas involved in AI – human interaction; by successfully utilizing the unique strengths of humans and the unique strengths of AI systems, in paradigm-shift solutions [31].

Another researcher embraces the view that it is imperative to immediately criminalize any research in AI weapons, and the crossbreeding of humans with machines, considering these as crimes against humanity; in order to avoid the likelihood where the other half of myself will be my robot [32].

Human Rights Watch addresses this issue quite eloquently:

“Allowing innate human qualities to inform the use of force is a moral imperative. Weapons systems do not possess compassion and empathy, key checks on the killing of civilians. Further- more, as inanimate objects, machines cannot truly appreciate the value of human life and thus delegating life-and-death decisions to ma- chines undermines the dignity of their victims. Human control also promotes compliance with international law. For example, human judgment is essential to correctly balance civilian harm and military advantage and comply with international humanitarian law’s proportionality test. Machines cannot be pre-programmed to respond appropriately to all of the complex scenarios they may face” [33].

The independent High-Level Expert Group on AI of the European Commission, published “Ethics Guidelines for Trustworthy Artificial Intelligence” [34]. The recommendations presented seven key requirements AI should meet in order to be deemed “trustworthy”:

- Human agency and

- Technical robustness and

- Privacy and data

- Transparency

- Diversity, non-discrimination and

- Societal and environmental well-being.

- Accountability [34].

4.1. Implantable brain devices

On August 28, 2020, Elon Musk presented Neuralink’s new seamlessly implantable brain device, having more than a thousand neuron channels, which supposedly can remedy neurological problems, such as brain and spine issues (strokes, spinal cord injuries, etc.) techno ethics and artificial mora. This device which is connected to a computer and may be removed according to Musk, even allows us to control the autopilot of our Tesla automobile; simply thinking we need our automobile, the appropriate signal is transmitted to it, and it arrives. The whole surgical procedure is performed by a fully automated robot, in about an hour. The battery needs to be inductively charged daily. This device which has received FDA approval, was previously tested on pigs. The initial cost is expected to be “a few thousand”, with the cost rapidly dropping in a short time. In the near future it is expected to save and replay memories. Neutralink’s brain chip will literally make us superhuman, taking the word “fiction” out of “science fiction” [35]. How far do we experiment with human nature? Is this an ethical application of science (at least the summoning of our automobile part)?

4.2. Technoethics and Artificial Morality

This new age has given rise to an emerging, interdisciplinary field, centering around the idea of creating artificial moral agents (AMAs), through the implementing of moral competence in AI systems. An AMA is “an artificial autonomous agent that has moral value, rights and/or responsibilities. This is easy to say but I must acknowledge that no traditional ethical theory takes this notion seriously” [36]. This noble goal, however futile judging by the plethora of ethical infractions throughout human history, is to build the same ethical principles governing societies into machines. As ethics are subjective, culture and historic period dependent, it should be evident that this endeavor offers little hope. As an example, contemporary women’s dresses or swimsuits, would be considered absolutely scandalous in the Victorian era. Moreover, some modes of behavior considered normative in Western European culture would annoy or be considered as unacceptable, in some Middle Eastern countries.

5. Human-robot symbiosis?

Anthropomorphic simulation of AI systems to such an ex- tent that it becomes difficult to discern humans from robots, or even to entrench a social belief in the equality of man and machine, appears as an option scientists are willing to entertain, however unethical or contrary to theological doctrine that may be. The question, however, is not how to prepare for the upcoming autonomous artificial systems intelligence, but how to employ it in our ontological corre- lation with everyone and everything (Fellow humans, the world, God); for a machine by design, cannot enjoy bliss. Realbotix, a high-tech company in California, is already de- signing and producing sex robots with AI, life-like replicas of females, who can converse with their owners and engage in sexual intercourse. Harmony is the name of their first product, a thin blond with emphasized cheek bones, and protruding breast and buttocks. A client simply specifies the desirable facial characteristics, color of hair, body shape or traits, and personality, and purchases a specific robot for about $20,000. It is projected that in the coming decades, this may be viewed as mainstream, socially acceptable be- havior. Aside from ontological or ethical concerns, critics insist that virtual partners over real partners will result in psychological distancing between people, affect human relationships, and a lower world population. The company is already working on a male version [37]. Robot dogs developed by Ghost Robotics will soon start patrolling the perimeter of the Tyndal Air Force base, in Panama City, Florida. Employed to enhance security and surveillance patrolling, these autonomous drones with high-tech sensors, two-way communication, seven hour power, and the ability to defend themselves, may be employed to all Air Force bases should testing provide satisfactory results [38].

If human bliss requires our deep and permanent association with others, the recognition of the “Social Principle” as a prerequisite for the operation of AI systems, appears as a necessary condition. A seminal point in any ethics conception of AI, should be the “Social Principle” [32].

Such a conception (i.e., based on the Social Principle), necessitates the operation of those systems to always, satisfy – whether short-term or long-term – the ontological need for a real coexistence of all people within the entire body of humanity. It is thus required to reject such systems that endanger the relative value of man, otherwise, man may willingly or not, be transformed into an inferior, sub-human entity; a man-beast or a man-machine (see the ‘Upgrade’ film [39]).

The technical and non-technical methods employed to satisfy the Social Principle’s role in the design and operation of AI systems, should be undertaken by the scientific community itself. In order to avoid the risk of deifying the individualism we inherited from Europe in the last century, resulting into a dystopic situation where the other half of myself will be my robot [32]! A sociological study of dystopia inevitably searches these three key issues: (a) Justice; (b) Liberation; and (c) Humanity [40]. Furthermore, a survey of dystopic literature highlights these five common points:

- Government

- Environmental

- Technological

- Survival

- Loss of

Obviously, the third point, that of “technological control” is pertinent to this paper and is indeed potentially alarming; as are the rest of the points. The Matrix, the Terminator, Upgrade [39] and other dystopic productions, portray a future society controlled by technology, whether in the form of AI computers or robots. Entities employ technology to control the masses, as a result, humans lose their sense of freedom and individuality. Jaron Zepel Lanier, a digital pioneer turned technological denouncer and web critic, has supported that technologies reflect and encourage the worst aspects of human nature; as a result, people are not acting responsibly [41].

In fact, new technologies tend to harm our interpersonal communication, relationships, and communities [42].

Human thought and emotion, the whole range of human emotions in general, including introspection and empathy, pose a challenge if we are considering a transfer to a non- human.

Oxford philosopher and founding director of the Future of Humanity Institute Nick Bostrom, whose work focuses on AI and has popularized the term “superintelligence”, is very skeptical of the implications of a possible misuse of AI. Superintelligence is defined as any intellect that greatly exceeds the cognitive performance of humans in virtually all domains of interest [43]. Although we are not powerless, Bostrom views the rise of superintelligence as potentially disastrous for humankind. Vigilance is needed against the risks involved; in fact, in 2017 he cosigned a list of 23 principles that AI should adhere to.

In [44], the authors envisions the radical possibilities of our merging with the intelligent technology we are creating. Earlier he supported that the human being will be succeeded by a superintelligent entity that is partly biological, partly computerized [19]. A plausible future where machine intelligence outpaces the biological brain, transcending our biological limitations. In [45], the authors articulates the issue of whether our species can survive, exploring the perils of the heedless pursuit of advanced AI. Arthur C. Clarke, a technophile sci-fi legend, was highly skeptical of the future. He held that it is just a matter of time before machines dominate humankind, as AI would win.

Steven Poole raises the alarm, with an urgent call for action,

“Wake up, humanity! A hi-tech dystopian future is not inevitable” [46].

There is

“the worry that machines will take over. Could machines outsmart us and control us? Is AI still a mere tool, or is it slowly but surely becoming our master? What is to become of us? Will we become the slaves of machines?” [21]

The discussions regarding AI need to become main- stream, especially relative to ethics and the need for regulation [47]. AI could possibly be our final invention [45], in respect to AI’s catastrophic downside, not to be entertained by Google, Apple, IBM, and DARPA. Table 1 summarizes the ethical issues examined, relative to AI and autonomous robotics. A close reading portrays a pessimistic reality already being witnessed.

Specifically, five (5) out of the ten (10) issues presented in Table 1, or 50% of these, already are a cause of concern. Robotic soldiers, armed drones, surveillance drones, implantable brain devices (store/recall memories, summon the automobile, etc.) and sex robots, de facto constitute ethical infractions (E.I.), as discussed and further elaborated in the Conclusions section.

Table 1: Technoethics

TECHNOETHICS | AERM | PEI | EI |

Singularity | * | ||

Superintelligence | * | ||

Artificial Moral Agents | * | ||

Facial recognition providers | * | ||

Robot soldiers and robot dogs | * | ||

Armed drones | * | ||

Surveillance drones | * | ||

Implantable brain devices (store/ recall memories, summon the au- tomobile, etc.) | * | ||

Sex robots | * | ||

Robots for human companionship/ interaction | * |

where:

A.E.R.M. = Attempted ethical remedial measure

P.E.I. = Potential ethical infraction

E.I. = Ethical infraction

6. Conclusions

It seems certain that the new technological revolution in AI and autonomous robots will transform society in ways we cannot even imagine. The way we live, interact, work and fight wars, will not be like anything witnessed before in human history, based on the glimpse of things to come. As elaborated, we are already beginning to witness the adverse effects of some relative developments. At this point it is imperative that we reiterate the aforementioned definition of society, which is pivotal to our discourse. Society is “a large group of interacting people in a defined territory, sharing a common culture”. It is immediately apparent that three ideas surface: Interaction, people and culture. Can a machine or an aggregation of machines, however intelligent, constitute “people” who “interact” and produce “culture”? Furthermore, one ought to remember the theory of Social Contract, through which societies are regulated; otherwise, social disorder and chaos results. The justification of a society or a state, depends on showing that everyone essentially consents to it; the issue of justification is seminal. From Epicurus, to Hobbes, Locke, and Rousseau, the role of the Social Contract explicates how societies are structured and regulated; societies are expected to be legitimate, just, obligating, etc. [48]. This implies that man and machine are philosophically and sociologically incompatible, as said relationship would violate this widely adopted informal convention. Additionally, we are reminded of the Social Principle as a necessary ethical condition for the operation of AI systems, which unfortunately evades the researchers [32].

While the consequences on labor are unclear, the consequences on society seem pessimistic. Brain chips can now summon our automobile, while in the near future they may be manipulated to control a malicious robot? Storing and replaying memories sounds unnatural; whatever happened to authentic memories?

Furthermore, in reference to the aforementioned brain transplant that Google envisioned, we may question whether it is a noble or ethical goal for technology to take command of the human brain? Isn’t transhumanism going to result in global overpopulation (by extending the human life span), while interfering with religious beliefs? Thus, is genetic engi- neering and biotechnology as manifested in transhumanism, legal or really desirable?

An epoch that resorts to AI sex robots for companionship and sexual gratification, like Realbotix’s Harmony, raises Ontological, sociological, psychological and ethical questions. Virtual partners over real partners seems unnatural, however, the ground is being set to recognize this behavior as socially acceptable, or mainstream. The robotic therapeu- tic “pet seal” mentioned above, improving the quality of life of nursing home residents, is an example of technology summoned to serve humankind. Although one may wonder why a real pet was not used instead, but an invented one; a trained cat, for instance, would be a preferred alternative. Or, more importantly, whatever happened to real, altruistic and caring human beings, serving as volunteers?

As explicated above, Barrat, Arthur C. Clarke, Lekkas and others, have been highly skeptical of a safe and ethical human-AI interaction. It is imperative to reiterate the call to immediately criminalize any research in AI weapons, and the crossbreeding of humans with machines, considering these as crimes against humanity [32].

Legality as it relates to ethics (the legalization of ethics?) and technology, are issues to also be considered. In the aftermath of 9-11, the American people were willing to forsake some of their freedoms in order to enhance their safety, or at least their perception of safety; thus they apparently accepted the surveillance measures imposed by the Government, without any protests. Clearly, this is an issue of civil liberties vs. security [49].

The ACLU’s legal action against facial recognition provider Clearview, is indicative of an unregulated industry, infringing upon our privacy. The present surveillance technology is already a nightmare for human rights; our facial characteristics, secretively and without our consent, have become a source of profit for others. Human Rights Watch is alarmed at the reality of autonomous lethal weapons, disregarding human life. As it supports, being inanimate objects, these machines cannot appreciate the value of hu- man life, therefore, delegating life-and-death decisions to autonomous machines undermines the dignity of their victims; furthermore, such an approach, does not comply with international law. CSKR’s repeated pleas for the legal prohibition of robot soldiers has landed on deaf ears. A proactive and vigilant stance seems imperative in order to safeguard probable misuse as in the case of the U.S.’ robot-soldiers; an act which if left unchecked, will be copied by other major armies in the world. Obviously this is an example of the weaponization of AI, with a total disregard for its implications on humanity. It can effortlessly be surmised that the owner of these robot-soldiers may be immune from crimes against humanity, by possibly claiming “programming malfunction”; thus, a possibly deliberate small-scale genocide may be left unpunished. A democratic and true to its founding goals United Nations should have curtailed this at the very beginning. However, this is an additional testament of the U.N.’s questionable modus operandi, which traditionally supports the global superpowers’ actions. The weaponization of AI systems is amensalism disguised, there- fore, the global community needs to object vehemently to such potentially disastrous and inhuman practices, before Pandora’s Box is opened. For it is highly probable that these robot-soldiers may even be used against peaceful urban protesters. If human soldiers have historically engaged in atrocities and genocides, can these machines be expected to act in a more humane or ethical manner? For human history, has showed time and time again, that in military confrontations, personal or group gains often prevail at the expense of ethics.

The current state of technology may allow for a version of a Blade Runner (1982 motion picture) replicant, a synthetic, bio-engineered anthropoid, in the near future, raising questions such as, how far can technological “progress” be allowed to go. Is science for science’s sake, over and above ethics? The purpose of technology is to serve humankind; it is not a self-serving aim, neither a tool serving monopolies or oligarchs. We are reminded of Protagoras (c.490 – c.420 B.C.), the Greek philosopher who introduced the conception of anthropocentrism, as expressed in the radical statement “Man is the measure of all things” (Protagoras entertained the idea of relativism and stressed the need for a moral and political ideal). “Dehumanization” and “fear” are characteristics of dystopia, thus, a near future with surveillance drones, armed drones, robot-soldiers, brain transplants and the like, can only be considered as dystopic; as verified by the definition of the relative term. The ethical stakes are high, as technological “progress” is already interfering with humankind and conscience.

AI and robotics may very well be the end of life as we know it. Scientists engulfed in technological optimism are not often concerned or cannot fully comprehend the dialectics of avant-garde technology and societal implications; for them the unbridled quest for knowledge supersedes the ontological conception of homo sapiens; clearly, a case of futurology over sociology; it is technological revolution versus biological evolution. Avoiding the issue as to whether machines will one day truly think, become conscious and self-aware, thus, probably being able to turn against humans. Ideally, there ought to be a balance of the benefits and gains of technological advancement against the potential harm to humankind and society. The issue is whether it will enhance and improve our lives and society at large, or, potentially cause major upheavals to humankind and societal structure. Conceptual debates and judicious choices, should consider the proper place of technological practice in human life; ethically exploring the idea of technology as a social practice and as a medium of political power. Responsible research and innovation is part of the solution; needed to be imposed by a legal framework, global in scope. The Ethical Guidelines presented by the E.U., although incomplete from an ontological perspective, are certainly a step in the right direction; however, the aforementioned confirm said guide- lines are ignored or dismissed. Assigning personhood, acceptable social behavior, or expecting ethical behavior from autonomous machines to facilitate their relationship with humans, is not unlike expecting humans to act like machines. For machines will never possess human qualities, like morality and ethics (as we explicated above). When humans historically have found ways to manipulate ethics (from the work-place to governments waging wars around the globe in the name of “peace”, as in the case of the U.S.A., Russia or Turkey), is it possible or logical for AI machines programmed by humans to indeed be more “ethical” than humans? Has AI been deified in a global Network Society

[50] which is desperately in search of the appropriate values? Have we ultimately mystified information science? Is the New World Order working on an agenda, de facto acting as our self-appointed guardians, envisioning a future for us without even asking us?

Finally, is the protagonist of the future going to be hu- mankind or AI? Some of these quasi-philosophical questions are obviously rhetorical in nature and this forum has pro- vided an exceptional opportunity to highlight these.

Table 1 portrays a bleak view of reality, which can only be described as dystopian. Therefore, it appears that the conception of the future during our formative years was rather naive, as life has not been rendered easier, happier or more peaceful, because of AI and autonomous robots. Unfortunately, aside from a few peaceful applications (such as the nursing home pet, or improved medical imaging), the bulk of relative research has been pursued in warfare, surveillance and corporate profit applications; brain trans- plants, AI and autonomous robots offer a glimpse of an ominous and pessimistic future probably gloaming over us. We started with a quote, likewise we shall end with quotes. AI, the invention of all inventions, could spell the end of the human race. As Hawking stated, AI could be “the worst event in the history of our civilization”. Therefore, it is entirely up to us to ensure that a dystopian future will not be realized. For it is not AI we should fear, but mankind itself…

“The superficial post-war dream that technology would solve the world’s social problems has transformed into a nightmare of electronically enabled global surveillance and suppression”. Alejandro Garcia de la Garza, 2013.

Conflict of Interest The authors declare no conflict of interest.

- H. Jeppesen, “Land mines still a global threat, despite fewer deaths”, DW (online), 2020. Available at: https://www.dw.com/en/land- mines-still-a-global-threat-despite-fewer-deaths/a-53018602 (Ac- cessed 28 Aug 2020).

- S. Makridakis, “The forthcoming Artificial Intelligence (AI) revo- lution: Its impact on society and firms”, Futures, vol. 90, pp. 46-60, 2017, doi.org/10.1016/j.futures.2017.03.006.

- B. P. Green, “Self-preservation should be humankind’s first ethical priority and therefore rapid space settlement is necessary”, Futures, vol. 110, pp. 35-37, 2019, doi.org/10.1016/j.futures.2019.02.006.

- H. G. Jimenez, “Taking the fiction out of science fiction: (Self-aware) robots and what they mean for society, retailers and marketers”, Fu- tures, vol. 98, pp. 49-56, 2018, doi 10.1016/j.futures.2018.01.004.

- The European Commission, “A Definition of AI: Main Ca- pabilities and Disciplines”, The Independent High-Level Ex- pert Group on Artificial Intelligence, 26 June, 2019.

- “Symbiosis”, Merriam-Webster Dictionary. Available at: https://www.merriam-webster.com/dictionary/symbiosis (accessed 24 June 2020).

- I.P. Rastogi, B. Kishore, A complete course in ISC Biology, Vol. I, New Delhi, India: Pitambar Publishing Company, 2006.

- “Dystopia”, Merriam-Webster Dictionary. Available at: https://www.merriam-webster.com/dictionary/dystopia (accessed 7 Aug. 2020).

- “Society”, Open Education Sociology Dictionary. Available at: https://sociologydictionary.org/society/ (accessed 22 July 2020).

- M. More, “The Overhuman in the Transhuman”, Journal of Evo- lution and Technology, vol. 21, issue 1, pp. 1-4, 2010. Available at: https://jetpress.org/v21/more.htm (accessed 12 Apr. 2022).

- Humanity+, Transhumanist FAQ (2016-2021). Available at: https://www.humanityplus.org/transhumanist-faq (accessed 12 Apr. 2022).

- About Extropy Institute (n.d.). Available at: https://www.extropy.org/About.htm (accessed: 14 Apr. 2022).

- K. Schwab, The fourth industrial revolution, Currency, 2017.

- Review of the Fourth Industrial Revolution. Available at: https://www.amazon.com/Fourth-Industrial-Revolution-Klaus- Schwab/dp/1524758868/ref=sr_1_1?dchild=1&keywords=%22The+ fourth+industrial+revolution%22&qid=1594999178&s=books&sr=1- 1 (accessed 17 July 2020).

- W. Wang, K. Siau, “Artificial Intelligence, Machine Learning, Au- tomation, Robotics, Future of Work and Future of Humanity: A Review and Research Agenda”, Journal of Database Management, issue 1, article 4, pp. 61-79, 2019.

- C. Clifford, “Twitter billionaire Jack Dorsey: Automation will even put tech jobs in jeopardy”, CNBC make it, 22 May 2020, https://www.cnbc.com/2020/05/22/jack-dorsey-ai-will- rdize-entry-level-software-engineer-jobs.html (accessed 28 July

0). - L. Elliot, “Robots threaten 15m UK jobs, says Bank of Eng- land’s chief economist”, The Guardian (online), 2015. Available at: https://www.theguardian.com/business/2015/nov/12/robots- threaten-low-paid-jobs-says-bank-of-england-chief-economist (ac- cessed 28 Aug. 2020).

- J. Manyika, K. Sneader, “AI, automation, and the future of work: Ten things to solve for”, June 1, 2018, Executive Briefing, McKinsey Global Institute, McKinsey & Company, 2018. Available at: https://www.mckinsey.com/featured-insights/future-of- work/ai-automation-and-the-future-of-work-ten-things-to-solve- for (accessed 22 Aug. 2020).

- R. Kurzweil, The singularity is near, New York: The Viking Press, 2005.

- E. Yong, “A Popular Algorithm Is No Better at Predicting Crimes Than Random People”, The Atlantic, 17 January 2018. Available at: https://www.theatlantic.com/technology/archive/2018/01/ equivant-compas-algorithm/550646 (accessed 21 Nov. 2019).

- M. Coeckelbergh, AI ethics. Cambridge, Massachusetts: The MIT Press, 2020.

- R. Daws, “ACLU sues Clearview AI calling it a ‘night- mare scenario’ for privacy”, AI News, 26 May 2020. Avail- able at: https://artificialintelligence-news.com/2020/05/29/aclu- clearview-ai-nightmare-scenario-privacy (accessed 28 July 2020).

- S. Levy, In the Plex: How Google Thinks, Works, and Shapes Our Lives, Simon and Schuster, 2011.

- M. Ford, Nursing home welcomes new robotic seal ‘therapy pet’, Nursing Times, 2019. Available at: https://www.nursingtimes.net/news/older-people/nursing- home-welcomes-new-robotic-seal-therapy-pet-19-03-2019 (accessed 25 June 2020).

- CNN Business, “See how drones are helping fight coro- navirus”. Available at: https://edition.cnn.com/videos/ business/2020/04/30/drones-coronavirus-pandemic-lon-orig- tp.cnn (accessed 31 July 2020).

- D. Philipps, E. Schmitt, Over Ukraine, Lumbering Turkish- Made Drones are an Ominous Sign for Russia, 2022. https://www.nytimes.com/2022/03/11/us/politics/ukraine- military-drones-russia.html (accessed 12 Apr. 2022).

- D. A. Kelsey, “Robots will replace soldiers in com- bat says Russia”, Forbes, April 30, 2020. Available at: https://www.forbes.com/sites/kelseyatherton/2020/04/30/robots- will-replace-soldiers-in-combat-says-russia/#520740533c71 (ac- cessed 7 Aug. 2020).

- R. Johansson, “AI expert warns that military robots could go off the rails and wipe out the very people they are supposed to protect”, Robot News, Feb. 20, 2019. Available at: https://robots.news/2019-02-20-ai-expert-warns-that-military- robots-could-go-off-the-rails.html (accessed 7 Aug. 2020).

- S. Deoras, “For U.S. and China’s military the future is robots, how is India competing”, Analytics India Magazine, Feb. 15, 2018. Avail- able at: https://analyticsindiamag.com/us-chinas-military-future- robots-india-competing (accessed 7 Aug. 2020).

- J. Bachman, The U.S. Army is Turning to Robot Soldiers. Bloomberg, (online), 2018. Available at: https://www.bloomberg.com/news/articles/2018-05-18/the-u-s- army-is-turning-to-robot-soldiers (accessed 25 June 2020).

- J. Chen, “Who Should Be the Bosses? Machines or Humans?”, 1st onference on the Impact of Artificial Intelligence and Robotics,

79, 2019. - Elder Dr. G. Lekkas, Advisor of the Representation Of- fice of the Church of Greece in the EU, “Artificial intelli- gence and applied ethics. But which ethics?”, 2020. Avail- able at: https://www.romfea.gr/ekklisia-ellados/26881-texniti- noimosuni-kai-efarmosmeni-ithiki-alla-poia-ithiki

- B. Docherty, Statement on meaningful human control, CCW meeting on lethal autonomous weapons systems. April 11, 2018. Available at: https://www.hrw.org/news/2018/04/11/statement-meaningful- human-control-ccw-meeting-lethal-autonomous-weapons-systems (accessed 22 July 2020).

- European Commission, “Ethics guidelines for trustworthy AI”, 8 April, 2019. Available at: https://ec.europa.eu/digital-single- market/en/news/ethics-guidelines-trustworthy-ai (accessed 31 July 2020).

- E. Musk, Neuralink Progress Update, Summer 2020. Available at: https://www.youtube.com/watch?v=DVvmgjBL74w (accessed 29 Aug. 2020).

- R. Lupiccini, R. Adel, Handbook of research on technoethics. New York: Hersey, 2009.

- K. Couric, “You can soon buy a sex robot equipped with artifi- cial intelligence for about $20,000”, ABC News, 2018. Available at: https://www.youtube.com/watch?v=-cN8sJz50Ng (Accessed 22 Aug. 2020).

- About Ghost Robotics. Available at: https://www.ghostrobotics.io/ about (accessed 23 Nov. 2020).

- Upgrade (film). https://en.wikipedia.org/wiki/Upgrade_(film) (ac- cessed 24 July 2020).

- D. L., Brunsma, D. Overfelt, Sociology as documenting dystopia: Imagining a sociology without borders – A critical dialogue. Societies without Borders, 2, pp. 63-74, 2007.

- Rushkoff, D., Cyberia: Life in the trenches of cyberspace, 2nd ed. Manchester: Clinamen Press Ltd., 2002.

- R. Rosenbaum, “What Turned Jaron Lanier Against the Web?”, 2013. Available at: http://www.smithsonianmag.com/innovation/what- turned-jaron-lanier-against-the-web-165260940/?all&no-ist (accessed 12 Apr. 2022).

- N. Bostrom, Superintelligence: Paths, dangers, strategies. Oxford: Oxford University Press, 2016.

- R. Kurzweil, How to create a mind: The secret of human thought revealed. New York: Penguin Books, 2013.

- J. Barrat, Our final invention: Artificial intelligence and the end of the human era. New York: St. Martin’s Press, 2013.

- S. Poole, Wake up, humanity! A hi-tech dystopian future is not inevitable. The Guardian (online), Opinion section, 2020. Available at: https://www.theguardian.com/commentisfree/2019/feb/18/ technological-progress-superjumbo-airbus-dystopia-future (ac- cessed 20 June 2020).

- S. Sarangi, P. Sharma, Artificial Intelligence: Evolution, ethics and public policy, Abingdon, Oxon: Routledge, 2019.

- Stanford Encyclopedia of Philosophy, “Contemporary approaches to the Social Contract”, 2017. Available at: https://plato.stanford.edu/ entries/contractarianism-contemporary (accessed 22 Aug. 2020).

- C. Doherty, Balancing Act: National Security and Civil Liberties in Post-9/11 Era, 2013. Available at: https://www.pewresearch.org/fact-tank/2013/06/07/balancing- act-national-security-and-civil-liberties-in-post-911-era (accessed 15 Apr. 2022).

- astells, The Rise of the Network Society, vol. 1, 2nd ed., Wiley, 10.

- Michael A. Vidalis, Antonios S. Andreatos, “Energy-Optimized Smart Transformers for Renewable-Rich Grids”, Journal of Engineering Research and Sciences, vol. 4, no. 10, pp. 21–28, 2025. doi: 10.55708/js0410003

- Michael A. Vidalis, Antonios S. Andreatos, “AI-Powered Decision Support in SAP: Elevating Purchase Order Approvals for Optimized Life Sciences Supply Chain Performance”, Journal of Engineering Research and Sciences, vol. 4, no. 8, pp. 41–49, 2025. doi: 10.55708/js0408005

- Michael A. Vidalis, Antonios S. Andreatos, “Magnetic AI Explainability: Retrofit Agents for Post-Hoc Transparency in Deployed Machine-Learning Systems”, Journal of Engineering Research and Sciences, vol. 4, no. 8, pp. 31–40, 2025. doi: 10.55708/js0408004

- Michael A. Vidalis, Antonios S. Andreatos, “Comparative Analysis of Supervised Machine Learning Models for PCOS Prediction Using Clinical Data”, Journal of Engineering Research and Sciences, vol. 4, no. 6, pp. 16–26, 2025. doi: 10.55708/js0406003

- Michael A. Vidalis, Antonios S. Andreatos, “Fire Type Classification in the USA Using Supervised Machine Learning Techniques”, Journal of Engineering Research and Sciences, vol. 4, no. 6, pp. 1–8, 2025. doi: 10.55708/js0406001