Software Development and Application for Sound Wave Analysis

Journal of Engineering Research and Sciences, Volume 4, Issue 3, Page # 8-21, 2025; DOI: 10.55708/js0403002

Keywords: Short-time Fourier transform, non-negative matrix decomposition, music, piano

(This article belongs to the Special Issue on SP6 (Special Issue on Computing, Engineering and Sciences (SI-CES 2024-25)) and the Section Acoustics (ACO))

Export Citations

Cite

Jekal, E. , Ku, J. and Park, H. (2025). Software Development and Application for Sound Wave Analysis. Journal of Engineering Research and Sciences, 4(3), 8–21. https://doi.org/10.55708/js0403002

Eunsung Jekal, Juhyun Ku and Hyoeun Park. "Software Development and Application for Sound Wave Analysis." Journal of Engineering Research and Sciences 4, no. 3 (March 2025): 8–21. https://doi.org/10.55708/js0403002

E. Jekal, J. Ku and H. Park, "Software Development and Application for Sound Wave Analysis," Journal of Engineering Research and Sciences, vol. 4, no. 3, pp. 8–21, Mar. 2025, doi: 10.55708/js0403002.

In this paper, we developed our own software that can analyze piano performance by using short-time Fourier transform, non-negative matrix decomposition, and root mean square. Additionally, we provided results that reflected the characteristics and signal analysis of various performers for the reliability of the developed software. The software was coded through Python, and it actively utilized Fourier transform to enable precise determination of the information needed to perform a performer's music, such as touch power, speed, and pedals. In conclusion, it shows the possibility that musical flow and waveform analysis can be visually interpreted in a variety of ways. Based on this, we were able to derive an additional approach suitable for designing the system to seamlessly connect hearing and vision.

1. Introduction

Sound wave analysis is essential for understanding music because it contains very complex structures and patterns in terms of science and technology, not just sensory feelings. Sound wave analysis allows a deep understanding of the components of music, which can be used for music creation, learning, and research [1–3]. In fact, acoustic analysis tools have long evolved to enable humans to better understand and utilize sound. These tools used to be more than just an auditory evaluation, but now they have evolved into advanced systems that utilize precise digital signal processing technology [4].

As mentioned above, in the early days of the development of acoustic analysis tools, subjective evaluation using human hearing was dominated. Oscillograph or analog spectrum analyzer allowed most mechanical devices to visually analyze the waveform or frequency of sound waves [5–7]. However, with the advent of the digital age, we began to analyze sound data using computers and software. Fast Fourier Transform (FFT) has become a key technique for frequency domain analysis, and improved precision has enabled accurate temporal and frequency characteristics of sounds [8,9]. With the recent development of machine learning and AI-based analysis, technology that recognizes sound patterns, separates speech and instruments, and analyzes emotions is also being utilized. Real-time sound analysis is possible through mobile devices and cloud computing, and 3D analysis considering spatial sound information is also possible thanks to 3D sound analysis. Extending this to other applications is expected to lead to infinite pioneering in a variety of fields, including healthcare (hearing testing), security (acoustic-based authentication), and environmental monitoring (noise measurement). But there are also obvious limitations.

First, there is a technical limitation of poor analysis accuracy in complex environments. It is difficult to extract or analyze specific sounds in noisy or resonant environments. In addition, it is difficult to process various sound sources, making it difficult to accurately separate sound sources with various characteristics such as musical instruments, human voices, and natural sounds [10].

Another limitation is the lack of practicality. While there are many tools optimized for a particular domain, no general-purpose system has been built to handle all the acoustic data.

Finally, the biggest limitation is the gap between the measurement of analytical tools and the actual hearing of humans. The difficulty of quantifying subjective sound quality assessments makes it difficult to fully quantify or replace a person’s subjective listening experience, and techniques for exquisitely analyzing human cognitive responses (emotions, concentration, etc.) to sound are still in its infancy [11].

2. Ease of Use Necessity needs for analytical tools

2.1. Understanding the Basic Components of Music

Music consists of physical elements such as amplitude, frequency, and temporal structure (rhythm). Sound wave analysis allows for quantitative measurement and understanding of these elements.

Frequency analysis makes it easier to check the pitch of a note and to understand the composition of chords and melodies. Time analysis can identify rhythm patterns and beat structures, and spectrum analysis can identify the unique tone (sound color) of an instrument [12].

2.2. Instruments and Timbre Analysis

Each instrument has its own sound (pitched tone), which comes from the ratio of its fundamental frequency to its background tone. Sound analysis visualizes these acoustic characteristics and helps to understand the differences between instruments.

For example, even at the same pitch as the violin and piano, the difference in tone is due to a combination of frequency components [13].

2.3. Understanding the Emotional Elements of Music

Music is used as a tool for expressing emotions, and certain frequencies, rhythms, and combinations induce emotional responses.

For example, slow rhythms and low frequencies are mainly used to induce sadness, and fast rhythms and high frequencies are used to induce joy. Sound wave analysis can study these relationships to determine the correlation between emotions and music [14].

2.4. A structural analysis of music

The structure of music is not just an arrangement of sounds, but includes complex patterns such as melody, chord, rhythm, and texture. Sound wave analysis allows us to visualize the structural elements of music. For example, harmonic analysis can help musician understand how chords and chords progression, and by analyzing the melody, musician can check the pitch and rhythm pattern. Additionally, if multiple melodies are played simultaneously, they can understand the interaction of each melody [15].

2.5. Support for music production and mixing

Sound wave analysis is essential for solving technical problems in the music production process.

Musicians adjust the sound volume by frequency band to balance the instruments and remove unwanted sound from recorded sound waves. Sound design also helps analyze and improve sound effects [16].

2.6. Music learning and research

Sound wave analysis provides music learners and researchers with tools to visually understand music theory.

2.7. Improved listening experience

Sound wave analysis can visually identify sound elements that are difficult to hear by the human ear (e.g., ultra-low, ultra-high) and improve the listening experience.

For example, we can find hidden detailed sounds in music through spectrograms, and users can visually see the acoustic complexity of music.

The level of analysis and tools required may vary depending on the purpose of analyzing music, but for whatever reason sonic analysis is a powerful tool to explore the scientific and artistic nature of music beyond just listening [17].

3. Prior studies

3.1. A way of expressing sound

There are many ways in which sound is expressed, but it is mainly explained by physical principles such as vibration, waves, and frequency. Sound is a pressure wave that is transmitted through air (or other medium) as an object vibrates. Let’s take a closer look at it. Sound is usually produced by an object vibrating. For example, when a piano keyboard is pressed, the strings vibrate, and the vibrations are transmitted into the air to be recognized as sound. This vibration is caused by an object moving and compressing or expanding air particles. And this sound is transmitted through a medium (air, water, metal, etc.). The particles of the medium vibrate, compress and expand to each other, and sound waves are transmitted. At this time, the important concepts are pressure waves and repetitive vibrations.

Compression is a phenomenon in which the particles of the medium get close to each other, and re-action is a phenomenon in which the particles of the medium get away, and the sound propagates by repeating these two processes, through which we hear the sound. These sounds can be distinguished by many characteristics. Mainly, the following factors play an important role in defining sounds.

- Frequency: The frequency represents the number of vibrations of the sound.At this time, the frequency is measured in Hertz (Hz). For example, 440 vibrations per second are 440 Hz.The higher the frequency, the higher the pitch, and the lower the pitch, the lower the pitch. Human ears can usually hear sounds ranging from 20 Hz to 20,000 Hz.

- Amplitude: The amplitude represents the volume of the sound, the larger the amplitude, the louder the sound, and the smaller the amplitude, the smaller the sound. Amplitude is an important determinant of the “strength” of sound waves. A larger amplitude makes the sound louder, and a smaller amplitude makes it sound weaker.

- Wavelength: The wavelength is the distance that a vibration in a cycle occupies in space. The longer the wavelength, the lower the frequency, and the shorter the frequency, the higher the frequency.

- Timbre (Timbre): Timbre is a unique characteristic of sound, formed by combining various elements in addition to frequency and amplitude.For example, the reason why the piano and violin make different sounds even if they play the same note is that each instrument has different tones. As in this study, in order to analyze sound with software, sound must be digitally expressed, and when expressing sound digitally, the sound is converted into binary number and stored.Digital sound samples analog signals at regular intervals, converts the sample values into numbers, and stores them, which are used to store and reproduce sounds on computers.In summary, sound is essentially a physical vibration and a wave that propagates through a medium. There are two main ways of expressing this, analog and digital, and each method produces a variety of sounds by combining the frequency, amplitude, wavelength, and tone of sound [18–20].

3.2. Traditional method of sound analysis

Frequency analysis is a method of identifying the characteristics of a sound by decomposing the frequency components of the sound. It mainly uses Fourier Transform techniques. Fourier transform is a mathematical method of decomposing a complex waveform into several simple frequency components (sine waves). This transform allows us to know the different frequencies that sound contains.

These Fourier Series and Fourier Transform allow us to analyze sound waves in the frequency domain.

Secondly, spectral analysis is a visual representation of the frequency components obtained through Fourier transform. This analysis visually shows the frequency and intensity of sound.

A spectrogram is a graph that shows the change in frequency components over time, and can visually analyze how sound changes over time.

In addition, time analysis is a method of analyzing sound waveforms over time. This method can track changes in the amplitude of sound over time.

Analyzing the waveform analysis at this time allows users to determine the sound volume, temporal change, and occurrence of specific events.

The waveform is a linear representation of the temporal variation of an analog signal or digital signal, and amplitude and periodicity can be observed.

Users can also track the volume change by analyzing the amplitude of a sound over time. For example, users can determine the beginning and end of a specific sound, or users can analyze the state of attenuation and amplification of the sound.

Further in waveform analysis, characteristic waveform characteristics can also be extracted, which is particularly important for classification or characterization of acoustic signals [21].

3.3. The characteristics of piano sound

The piano is a system with a built-in hammer corresponding to each key, and when the key is pressed, the hammer knocks on the string to produce a sound. The length, thickness, and tension of the string, and the size and material of the hammer are the main factors that determine the tone of the piano. Each note is converted into sound through vibrations with specific frequencies. Frequency is an important factor in determining the pitch of a note. Piano notes range from 20 Hz to 4,000 Hz. They range from the lowest note of the piano, A0 (27.5 Hz), to the highest note, C8 (4,186 Hz).

The pitch is directly related to the frequency, and the higher the frequency, the higher the pitch, and the lower the frequency, the lower the pitch.

The piano’s scale consists of 12 scales, separated by octaves. For example, the A4 is 440 Hz, and the A5 doubles its frequency to 880 Hz.

Tone is an element that makes sounds different even at the same frequency. In other words, it can be said to be the “unique color” of a sound.

The tone generated by the piano is largely determined by its structure of harmonics. Since the piano can produce non-sinusoidal waveform sounds, each note contains several harmonics in addition to the fundamental frequencies. This pattern of tone makes the piano’s tone unique.

For example, the mid-range of the piano has a soft and warm tone, the high-pitched range has clear and sharp characteristics, and the low-pitched range has deep and strong characteristics.

Dynamic is the intensity of a sound, or the volume of a sound. In a piano, the volume varies depending on the intensity of pressing the keyboard. The piano is an instrument that allows users to delicately control decremental and incremental dynamics. For example, the piano (p) is a weak sound, and the forte (f) is a strong sound. In addition to this, medium-intensity expressions such as mezzoforte (mf) and mezzo piano (mp) are possible.

In addition, the sound of the piano depends on the temporal characteristics such as attack, duration, and attenuation. Attack is a rapid change in the moment a note begins. The piano’s note begins very quickly, and the volume is determined when the hammer hits the string.

The piano’s attack is instantaneous and gives a faster reaction than other instruments. For example, the pitch on the piano pops out right away, and the other instruments, such as string or woodwind, can start more smoothly. Duration is a characteristic of how long a sound lasts after it is played. The piano strings gradually decay when they make a sound, because the string’s vibration gets weaker and weaker due to friction with the air or other factors. The length, thickness, and tension of the strings affect the duration of the sound at this time. If pianists use a pedal, they can increase the duration of the sound, but when they press the damper pedal, the strings continue to ring without stopping the vibration, making the sound longer. If users look at the waveform, the piano is an instrument that generates non-sine sound. This is a complex waveform, not a sine wave, and several frequency components are mixed to create a rich tone. The sound waves on the piano are rich in harmonics, so they have various tones and rich characteristics. For example, more low-frequency components are included in the lower register, and high-frequency components are more prominent in the upper register.

The notes generated by the piano can be divided into low, medium, and high notes, each range having the following characteristics.

- Blow (A0 to C4): It contains deep, rich, and strong low-frequency components. For example, Blow C1 has a very low frequency of 32.7 Hz.

- Middle tones (C4 to C5): range similar to the human voice, which is the key range of the piano. The mid tones of the piano have a balanced sound and a warm tone.

- High note (C6 to C8): It has a sharp, clear sound, and a clearer sound is produced at a fast tempo or high note.

In conclusion, the characteristics of the sound produced by the piano are influenced by a combination of several factors, including frequency, tone, attack and duration, dynamic, and attenuation. The piano is an instrument with very rich and complex harmonics, and its sound is characterized by fast attacks and various dynamic controls. In addition, the tone is determined by the characteristics of the strings used and the material of the hammer, which makes the piano sound a unique and distinctive sound.

3.4. Characteristics of classical piano music

Piano classical music usually includes classical and romantic music, and its style has characteristic elements in musical structure, dynamics, emotional expression, and technical techniques.

First, complex chords and colorful tones are important features in piano classical music. Since the piano can play multiple notes at the same time, it is excellent at expressing different chords.

The way chords are created is the synthesis of sound waves, which combine several frequency components to create more complex and rich notes. In this process, each note has its own harmonics, which provides a touching and colorful tone to the music.

The second feature is that it delicately controls the dynamics. It can express dramatic changes and subtle emotions by crossing the piano(p) and the forte(f). It also expresses the rhythm and melody by using various technical techniques such as precise rhythms and arpeggios, trills, and scales.

These techniques require fast and repetitive vibrations, resulting in more complicated waveform fluctuations. Lastly, classical piano music focuses on expressing emotional depth, and deals with epic development and emotional flow. The music delicately utilizes the dynamics and rhythm in expressing dramatic contrast or emotional height. When viewed from the perspective of a scientific wave, the notes of classical piano music are not just sine waves but complex non-sinusoidal waveforms. Because of this, the piano’s sound includes harmonics, making it richer and more colorful in tone. This sonic quality is very important in classical music.

- Complex waveform structure. The waveform consists of a fundamental frequency and multiple overtones. For example, the piano’s sound vibrates according to its harmonic series, which means that in addition to the fundamental frequency, the background sounds such as 2x frequency, 3x frequency, and 4x frequency are also present. Piano tones have frequencies higher than the basic notes, and they enhance or distort the characteristics of the basic notes. The tone of a piano is formed by the way these tones are nonlinearly combined. For example, there are many and strong tones at lower notes, and relatively few and microscopic tones at higher notes.

- (2) Quick attack and sudden waveform changes. In a piano, notes begin quickly, which is a part called an attack. The moment a note begins, the waveform undergoes a drastic change. For example, the sound pressure on a piano rise very quickly as soon as the hammer hits the string, and then it attenuates rapidly. This causes a drastic change in the waveform. The attack part is very short, producing an abnormal waveform indicating a spike with a fast frequency change. The waveform at this moment takes the form of a sharp peak and then a quick decrease in amplitude.

- Nonlinearity. The sound of classical piano music has nonlinear characteristics, forming unexpected waveforms through multiple nonlinear interactions, even at the same frequency. For example, the moment a hammer hits a string, complex nonlinear oscillations can occur depending on the hammer’s mass, speed, and string tension. This results in a mixed waveform in addition to the fundamental frequency, which forms its own tone.

To sum up, the characteristics of piano classical music are very complex and colorful, ranging from its musical composition to the physical characteristics of the sound. Musically, it is characterized by complex chords and various dynamics, and it deals with emotional expression as important. These musical characteristics are physically revealed through the wave peculiarities of sound—complex waveforms, fast attacks, dynamic frequency changes, etc., and the singularity is well represented by the piano’s tonal structure and nonlinear waves. The sound waves of the piano are very rich and complex, which contribute to expressing the emotional depth and emotion that classical music is trying to convey well.

4. Method

4.1. Mathematical techniques

4.1.1. Short-time Fourier transform (STFT)

For a given signal x(t), the short-time fourier transform (STFT) is defined as follows:

$$STFT(t,\omega) = \int_{-\infty}^{\infty} x(\tau) \cdot \omega(\tau – t) \cdot e^{-j\omega \tau} \, d\tau$$

Here, x(t) is time domain signal to analyze, while ω(τ-t) defined window function depending on .

The x(t) is called the window function, and it is used to extract only certain sections of the signal. Typical window functions include Hamming, Hanning, and Gaussian windows. The shorter the length of the window function, the higher the time resolution, and the longer the frequency resolution. The sliding window analyzes the signal by sliding the ω(τ-t) over time t. After these processes, the results of STFT are given as complex numbers, amplitude represents the strength of the frequency component, and phase represents the phase of the frequency component.

In this case, the information provided in the form of a plural number includes two. The first is the intensity of the frequency expressed in the |STFT(t,ω)|, and the second is the phase information of the frequency component expressed in the ∠STFT(t,ω).

However, when actually analyzing a signal on the computer, the signal is discrete, so it is calculated by discretizing the STFT for continuous signals. For discrete signal 𝑥[𝑛], the STFT is defined as follows:

$$STFT[m,k] = \sum_{n=-\infty}^{\infty} x[n] \cdot \omega[n-m] \cdot e^{-j\frac{2\pi kn}{N}}$$

where 𝑥[𝑛] denotes the discrete signal, and ω[n-m] is the window function applied in the time 𝑚.

Since N represents the length of the window or the size of the FFT, the frequency resolution determination is determined by N.

4.1.2. Autocorrelation

Autocorrelation is an important tool for analyzing the self-similarity of signals, measuring how repetitive or periodic they are over time.

The autocorrelation function R_x (τ) of the continuous signal x(t) is defined as follows:

$$R_x(\tau) = \int_{-\infty}^{\infty} x(t) \cdot x(t+\tau) \, dt$$

Autocorrelation functions can be applied in many ways in sonic analysis, first of all they are excellent for periodicity analysis. For example, an automatic correlation function in which a signal is repeated can estimate the fundamental frequency from a voice signal.

It is also used to distinguish noise from useful components in signals. Noise is generally less correlated in time, while useful signals are highly correlated.

And this study can also measure the signal energy mathematically.

$$R_x(0) = \int_{-\infty}^{\infty} x^2(t) \, dt \quad (\text{a continuous signal})$$

$$R_x[0] = \sum_{n=0}^{N-1} x^2[n] \quad (\text{discrete signal})$$

Finally, it can be used to calculate the period (e.g., pitch) of a speech signal, or to detect a rhythm in a music signal.

Autocorrelation functions are closely related to Fourier transforms. In particular, according to the Wiener-Hinchin theorem:

$$R_x(\tau) \overset{\text{Fourier Transform}}{\longleftrightarrow} |X(\omega)|^2$$

In other words, the autocorrelation function R_x (τ) of the signal pairs the size square of the Fourier transform |X(ω)|^2 of the signal with the Fourier transform X(ω). This allows us to quickly compute the automatic correlation function via FFT.

$$R_x[k] = \mathcal{F}^{-1} \left| \mathcal{F}(x) \right|^2$$

The automatic correlation function is a very important tool in signal analysis and is used for a variety of purposes, including periodicity detection, noise cancellation, and energy calculation. For discrete signals, it can be calculated quickly using FFT and has a wide range of applications such as acoustic analysis, speech recognition, and bio-signal analysis.

4.1.3. Non-negative matrix factorization (NMF)

Non-negative matrix factorization (NMF) is a technique that decomposes a non-negative matrix into two non-negative matrices, and is used in various fields such as data analysis, dimensionality reduction, signal processing, and text mining. In this paper, we will explain this mathematically and provide intuition.

The NMF is based on the process of solving the following optimization problems:

$$\min_{W,H} \| X – WH \|_F^2$$

The Frobenius norm represents the square of the Euclidean distance between the matrix 𝑋 and WH.

NMF optimization is classified as a nonlinear optimization problem due to non-negative constraints. To address this, the following update method is used:

$$H \leftarrow H \circ \frac{W^T X}{W^T W H}$$

$$W \leftarrow W \circ \frac{X H^T}{W H H^T}$$

At this point, it ends when the convergence condition (e.g., the change in the Frobenius norm is below the threshold), and at each stage, it solves the least-squares problem with non-negative constraints to maintain the non-negative constraints.

NMF is a simple yet powerful matrix decomposition technique that is very useful for extracting patterns in data and interpreting hidden structures. However, it can be effectively utilized only when users understand the limitations and characteristics of NMF and set the appropriate parameters for the data.

4.2. Physical Tools

In this paper, we differentiated and coded the acoustic signal analysis tool by providing a user interface. The tool works modular, allowing users to blend and match different tools to tailor their interfaces and features to specific analysis requirements.

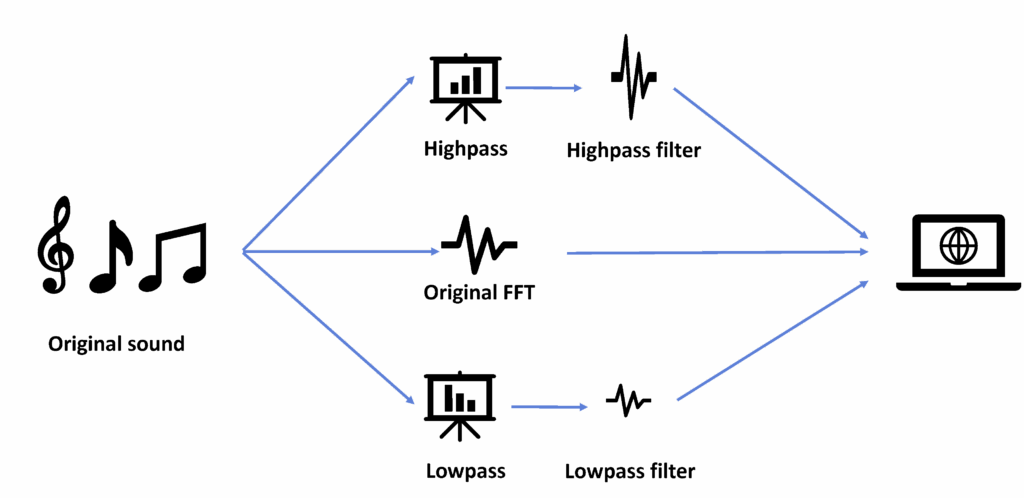

The key idea here is the analysis chain. This research interconnect the signal itself or the signal analysis results to different windows to form a functional block sequence. It includes file input, data collection modules, FFT analysis, measuring instruments, etc.

There is only one segment of the signal needed to visually represent a musical signal. Efficient physical tools were used to effectively imitate real-time signal analysis using pre-recorded offline signals.

The sequence of all values is “signal” at this time. The concept of “signal” includes an array of all X/Y values, including audio signals, spectra, or other data representations. This broad definition means that all sequences are attributed to the sampling rate, which can also be applied to data not derived from digital sampling of analog signals. For example, the “sampling rate” of the FFT analysis results is determined by the number of bins (values) per unit of frequency (Hz) on the X-axis.

This framework enables a flexible analysis approach, such as performing FFT on the results of previous FFTs. The biggest advantage of this work is that there are no restrictions on analytical exploration. I would like to compare and analyze classical piano music while pioneering a unique methodology to achieve the desired insights.

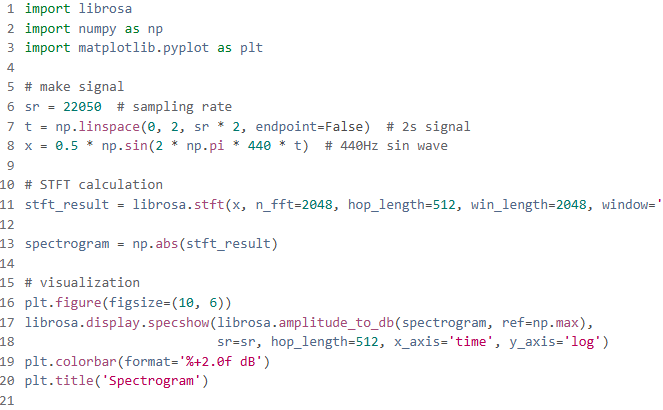

The above code is a Python code that calculates the STFT of a 440Hz sine wave and visualizes it as a spectrogram.

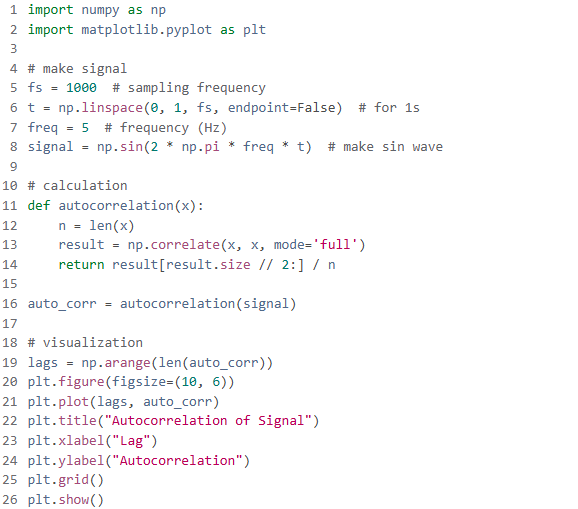

The code above generates a sine wave of 5 Hz and calculates the automatic correlation function of the signal using np.correlate. The visualization of the result is then plotted according to the lag.

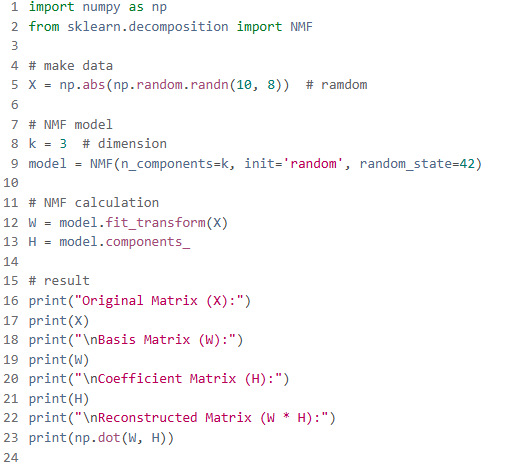

When implementing NMF in Python, the Sklearn library makes it easy to handle.

4.3. The specifications of a piano

The piano used for the performance and recording was the Yamaha Grand Piano GC1, which was produced in Japan. There were a total of 88 keys, a soft pedal (left), a Sostenuto pedal (center), and a damper pedal (right). The top lid was open in the recording environment.

The depth of the grand piano varies depending on the model, but it usually ranges from 151 cm to 188 cm. For the grand piano used in this study, it should be noted that the total length from the keyboard to the longest string end is 161 cm, therefore the actual sound and the recorded sound may differ if no sinusoidal waves are made at that length.

4.4. Characteristics of performed music and performers characteristics

4.4.1. Twinkle, Twinkle, Little Star

The original song is titled “Mozart Variations 12 on ‘Ah, vous dirai-je, Maman’ in Camajor, K265″, but it is popularly known as a twinkling little star.

The theme is a folk melody consisting of 12 simple and simple bars. It is composed in C major and features a clear and clear sound pattern.

4.4.2. Gavotte composed by Cornelius Gurlitt

In this paper, we chose Gavotte composed by Cornelius Gurlitt because it is a specific song that can show the connection and disconnection of notes, and changes in articulation, a playing technique that represents legato and staccato, due to its relatively fast rhythm. It also has the advantage of being able to clearly express the visual through the waveform graph because the phrase section on the score is clear.

A total of five notes are connected to the regato until the first note of the next bar, followed by two staccato notes. If we look closely at this part, this study can distinguish the waveform difference between the regato and staccado methods.

There’s also a part that gradually becomes a crescendo from the 9th bar to the 12th bar, and from the 13th bar to the 16th bar, this study can even look at the volume in a single piece because it’s played quietly with the right hand only without the left hand.

4.4.3. Rachmaninov Piano Concerto No. 2 in c minor, Op. 18

In the case of the first seven bars, the two-handed chord that is pressed at the same time and the left-handed bass F alternate. In addition, since the right-handed inner part changes with each bar, it is easy to observe the change of sound waves with right-handed chords.

The volume of the sound is also gradually increasing from pp to ff, so this study can see if the “feel of something coming from a distance” is also expressed on the graph when drawn in a waveform.

4.4.4. Characteristics of performers

- Younju Kim, Female: 166cm, 54kg. She is characterized by having relatively long arms and is a performer with good movement. However, instead of having a large arm movement, the force cannot be transmitted quickly to the fingertips, making it difficult to perform strong strokes. The sound resonates well, but the amplitude is not large.

- Juhyun Ku, Female: 163.5cm, 60kg, hand size: From the first note of Do to the next octave Mi. The weight of the arms is heavier and the fingertips are harder than other pianists. There is less movement of the arms and the fingertips are attached to the piano to control the speed. It is characterized by less movement of the arms.

- Hyoeun Park, Female : 161cm, 47kg. The weight of the arms is light and the movement is fast. She is one of the musicians who produce accurate sounds. When playing the piano, the movement is big, but the time when the hands are attached to the piano keys is short.

- Eunsung Jekal, Female: 158, 42kg, beginner. Unlike other pianists, he recorded on the Kawai Digital Piano. He has been learning for about a year, so he has no movement in his arms and is very weak in other health compared to professional pianists.

5. Results

5.1. Magnitude of sounds

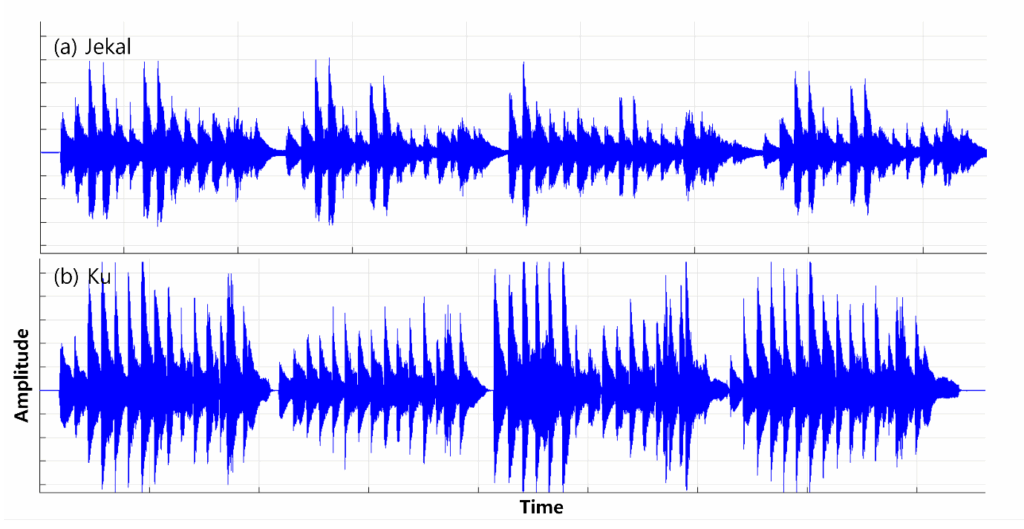

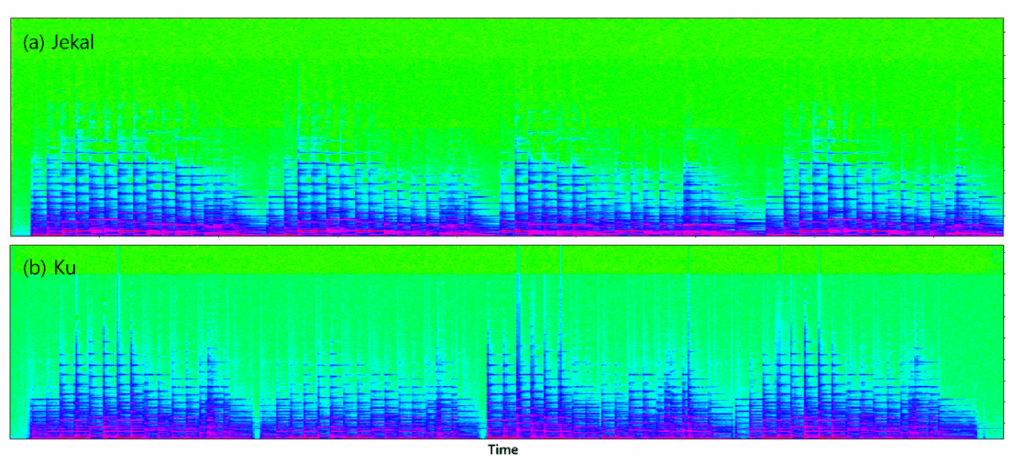

Root Mean Square (RMS) represents the average energy of a signal and is the basic method for quantitative comparison of sound magnitudes as shown in figure 5. In this paper, two recorded files were imported into the software and calculated as the rms function of MATLAB. Afterwards, this study measured the Loudness Units Full Scale (LUFS). LUFS is an international standard that measures the loudness of sounds based on the volume felt by the human ear. The LUFS values of the two performances were compared using the Loudness Normalization function, and it is generally considered that the lower the LUFS value, the higher the volume. That is, the size increases as it approaches zero. Finally, this research analyzed decibels (dB), and we gave it as fig.6. Decibels compare the loudness based on the peak amplitude or average amplitude of the signal. In this paper, the Peak Level and Average Level were measured using their own software, and the Peak Amplitude was calculated using MATLAB’s max (abs(signal).

Performer Jekal:

RMS: -20.5dB

LUFS: -15 LUFS

Dynamic range: 10 dB

Performer Ku:

RMS: -18.2dB

LUFS: -12 LUFS

Dynamic range: 14 dB

In conclusion, Ku makes an overall louder sound, and the dynamic range is also wider, showing that it is more expressive.

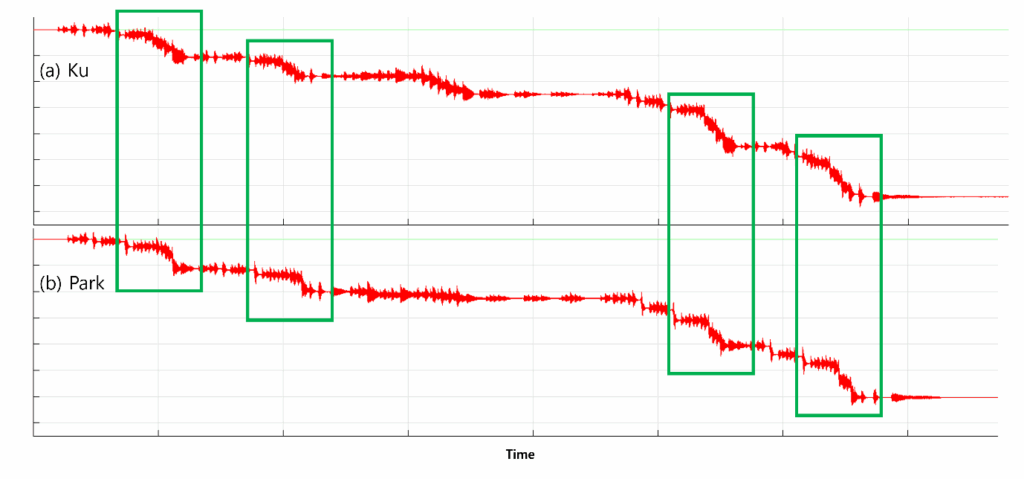

5.2. Velocity

By differentiating the waveform graph, this research can get information that represents the rate of change of the signal. This rate of change can be interpreted as speed, which varies depending on the physical or mathematical properties of the signal. For acoustic signals, differentiation is useful for analyzing the rate of amplitude change or for deeply understanding the characteristics of the signal.

1st differential expressed as

$$v(t) = \frac{dx(t)}{dt}$$

2nd differential expressed as

$$a(t) = \frac{d^{2}x(t)}{dt^{2}}$$

Here, if it is calculated as a discrete signal instead of a continuous signal, it may be approximated as follows:

$$v[n] = \frac{x[n+1] – x[n]}{\Delta t}$$

Figure. 7 shows the change in speed by differentiating the waveform. Comparing (a) and (b) in the boxed sections, this research can see that the change in (b) is more rapid. In other words, (b) the performer hits one note while playing the piano and then moves on to the next note compared to (a). This may have been due to the performer’s movement or body shape.

5.3. Touch intensity

To mathematically analyze the strength (touch strength) of pressing the keys when playing the piano, users need to obtain and analyze data related to the force on the keys through acoustic signals or physical sensors. This can be represented by the amplitude of an acoustic signal, or the physical movement of the keys.

In this paper, amplitude-based analysis was utilized. This is because the amplitude of the generated acoustic signal increases when the keyboard is pressed hard, so the intensity can be estimated by analyzing the amplitude.

For this purpose, the recorded acoustic signal was imported into its own analysis software, and the average amplitude was measured by calculating the RMS value of the signal.

The RMS is calculated as follows:

$$RMS = \sqrt{\frac{1}{N} \sum_{i=1}^{N} x[i]^2}$$

Here, x[i] expresses value of sample signal and N denotes number of samples.

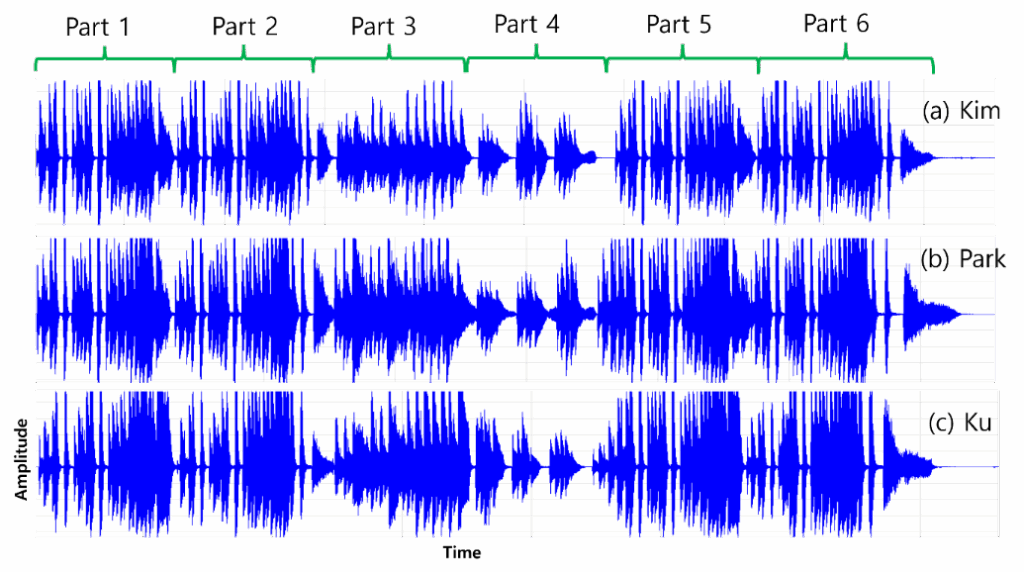

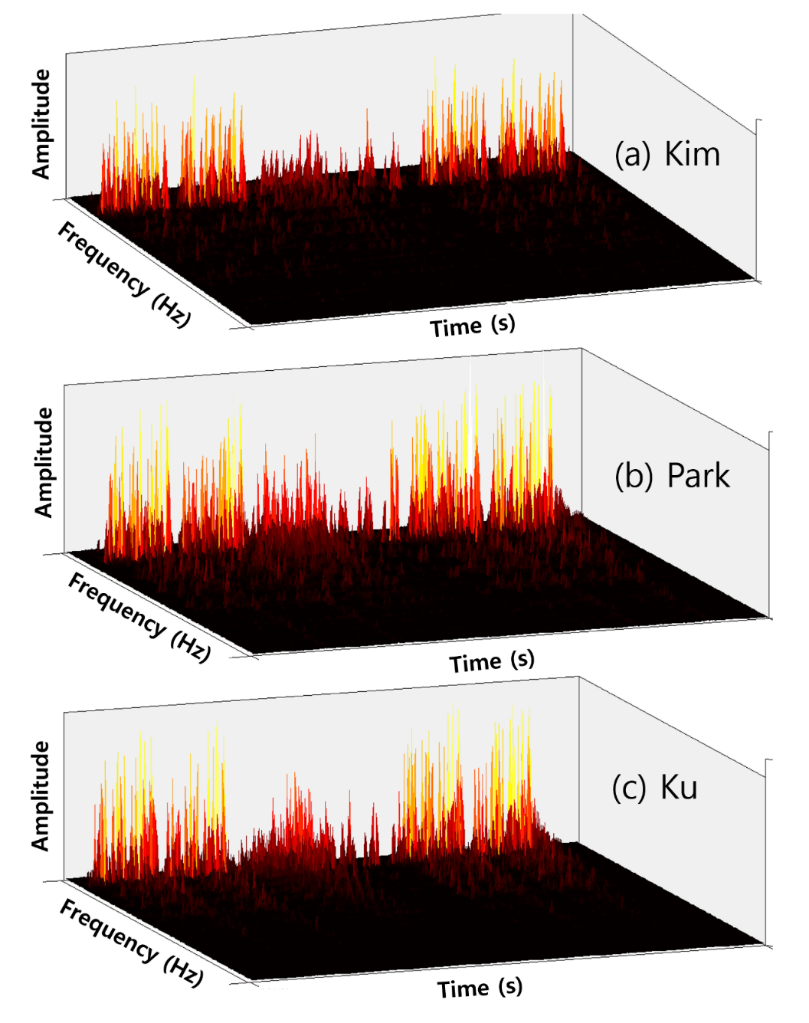

Gavotte composed by Cornelius Gurlit, expressed in the form of sound waves in fig.9, can be largely divided into six parts. Sections 1 and 2, and sections 5 and 6 are all played with the same note and rhythm. However, in section 4, it can be seen that the sudden sound becomes smaller, and the difference between the three pianists can be seen more clearly here. The note played by Kim grows and decreases again, the note of the beat becomes louder and louder, and the note of the sphere becomes smaller and smaller. In the case of part 5 as well, Kim starts with a loud sound following section 4 and Park starts with a small sound before playing louder and louder. In fact, it is often difficult to hear the change in detail when listening to fast songs such as Gavotte. However, the software used in this study shows changes in the overall structure and musical image of the song well through the waveform graph.

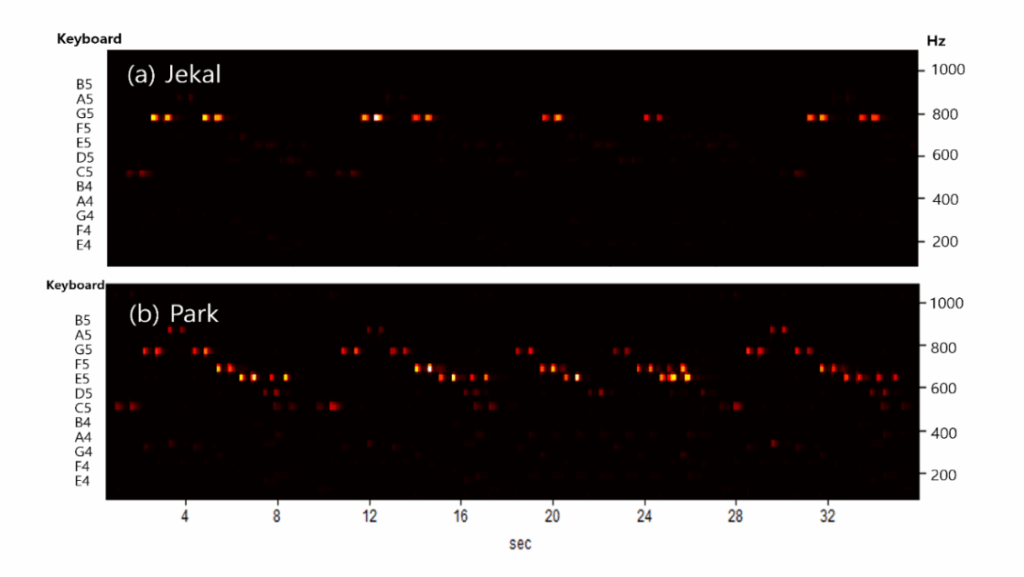

5.4. Music flow

Graph theory was used in this paper because it can be very useful in structural and relational analysis of piano music, and the results are shown in Fig.10.

First, the graph G consists of node set V and edge set E, so it is expressed as G=(V,E).

Music has a direction, so this study need a graph that considers the direction here, and the in-degree is expressed as

$$deg_{in}(v) = |\{(u,v) \in E\}|$$

The out-degree is expressed as

$$deg_{out}(v) = |\{(v,u) \in E\}|$$

and the sum of entry and exit orders for all nodes is expressed as

$$\sum_{v \in V} deg_{in}(v) = \sum_{v \in V} deg_{out}(v) = |E|$$

Entropy of graph denoted as

$$H(G) = -\sum_{v \in V} p(v) \log p(v)$$

where \(p(v) = \frac{deg(v)}{2|E|}\).

In this way, graph theory can be used to create a key tool for analyzing music or solving problems that arise during the practice process.

6. Discussion

In this work, we explore ways to improve the smooth connection of visual and auditory flows through waveform analysis of music.

The sound was analyzed using mathematical techniques such as Short-Time Fourier Transform (STFT), Non-Negative Matrix Factorization (NMF), and Root Mean Square (RMS), and the analysis results reflecting the characteristics of various performers were presented. Analyzing sound in this way allows various applications such as emotional expression, structural analysis, understanding differences in musical instrument tones, and supporting music production.

The sound volume analysis compared the size of the performance and the dynamic range through the analysis of the performer’s RMS, LUFS, and dB.

For velocity analysis, the rate of change of the signal and the difference in the movement of the performer were evaluated through the first and second derivatives of the waveform. As for the touch intensity, the intensity of the keyboard touch was estimated by RMS, and the expressive power of the player was compared.

In addition, in the flow of music, the structural change of the song was visualized as a waveform graph to clearly confirm the difference in the interpretation of the performer.

7. Limitations and future works

7.1. Overcome the difference between soundproof room and hall

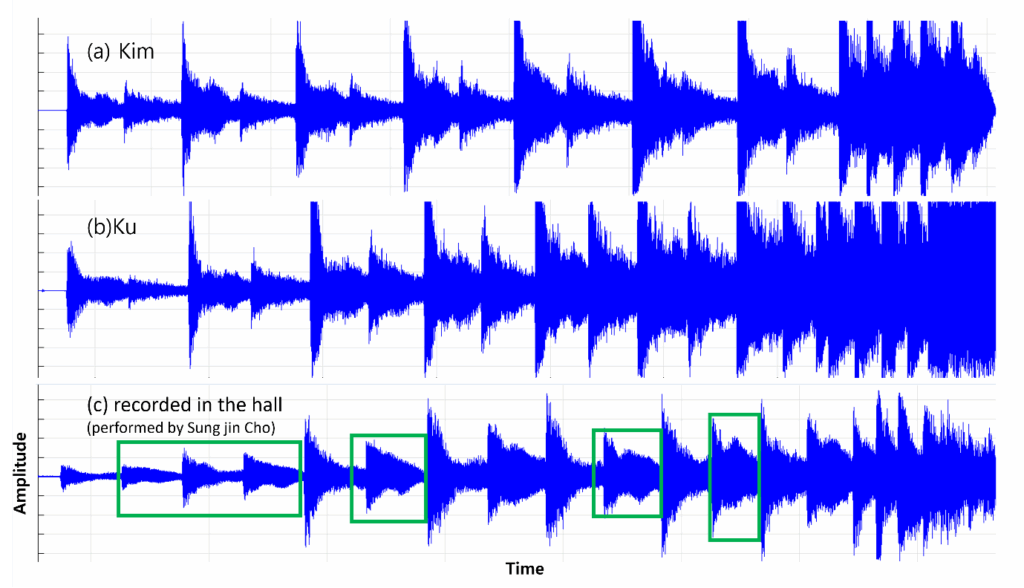

This graph is a visualized graph of three performances from the introduction of movement 1 to 9 of Rachmaninoff Piano Concerto No. 2. Although it is a concerto, it is easy to compare the sound wave graphs in the soundproof room and hall because only the piano is played at the introduction without an orchestra.

Figure 11(a) and (b) are recorded on the same piano in the same soundproof room, and Fig.11(c) is recorded in a large concert hall.

Looking at the green box, it can be seen that the up-and-down symmetry of the sound wave is greatly broken in Fig.11(c) played in the hall, which is seen as irregularity in the effect on the echo of the hall.

7.2. Improved visual-audible connection smoothness

Music waveforms can naturally have irregular, sharp shapes. To visualize them seamlessly, the signals need to be processed smoothly.

Low-pass Filter can be utilized for smoothing the signal, which reduces the high frequency component (noise) and softens the waveform.

$$y[n] = \frac{1}{N} \sum_{k=0}^{N-1} x[n-k]$$

In addition, Spline Interpolation creates curves that seamlessly connect data points, enabling smoother waveform representations.

There’s another way to enhance visual synchronization. It reinforces visual effects, which align with auditory perception, by emphasizing features such as music’s rhythm, tempo, and range. For example, users might consider extracting the music’s rhythm through beat detection algorithms to synchronize visual “beat” or change the color according to frequency band or amplitude.

Conflict of Interest

The authors declare no conflict of interest.

1. Introduction

Sound wave analysis is essential for understanding music because it contains very complex structures and patterns in terms of science and technology, not just sensory feelings. Sound wave analysis allows a deep understanding of the components of music, which can be used for music creation, learning, and research [1–3]. In fact, acoustic analysis tools have long evolved to enable humans to better understand and utilize sound. These tools used to be more than just an auditory evaluation, but now they have evolved into advanced systems that utilize precise digital signal processing technology [4].

As mentioned above, in the early days of the development of acoustic analysis tools, subjective evaluation using human hearing was dominated. Oscillograph or analog spectrum analyzer allowed most mechanical devices to visually analyze the waveform or frequency of sound waves [5–7]. However, with the advent of the digital age, we began to analyze sound data using computers and software. Fast Fourier Transform (FFT) has become a key technique for frequency domain analysis, and improved precision has enabled accurate temporal and frequency characteristics of sounds [8,9]. With the recent development of machine learning and AI-based analysis, technology that recognizes sound patterns, separates speech and instruments, and analyzes emotions is also being utilized. Real-time sound analysis is possible through mobile devices and cloud computing, and 3D analysis considering spatial sound information is also possible thanks to 3D sound analysis. Extending this to other applications is expected to lead to infinite pioneering in a variety of fields, including healthcare (hearing testing), security (acoustic-based authentication), and environmental monitoring (noise measurement). But there are also obvious limitations.

First, there is a technical limitation of poor analysis accuracy in complex environments. It is difficult to extract or analyze specific sounds in noisy or resonant environments. In addition, it is difficult to process various sound sources, making it difficult to accurately separate sound sources with various characteristics such as musical instruments, human voices, and natural sounds [10].

Another limitation is the lack of practicality. While there are many tools optimized for a particular domain, no general-purpose system has been built to handle all the acoustic data.

Finally, the biggest limitation is the gap between the measurement of analytical tools and the actual hearing of humans. The difficulty of quantifying subjective sound quality assessments makes it difficult to fully quantify or replace a person’s subjective listening experience, and techniques for exquisitely analyzing human cognitive responses (emotions, concentration, etc.) to sound are still in its infancy [11].

2. Ease of Use Necessity needs for analytical tools

2.1. Understanding the Basic Components of Music

Music consists of physical elements such as amplitude, frequency, and temporal structure (rhythm). Sound wave analysis allows for quantitative measurement and understanding of these elements.

Frequency analysis makes it easier to check the pitch of a note and to understand the composition of chords and melodies. Time analysis can identify rhythm patterns and beat structures, and spectrum analysis can identify the unique tone (sound color) of an instrument [12].

2.2. Instruments and Timbre Analysis

Each instrument has its own sound (pitched tone), which comes from the ratio of its fundamental frequency to its background tone. Sound analysis visualizes these acoustic characteristics and helps to understand the differences between instruments.

For example, even at the same pitch as the violin and piano, the difference in tone is due to a combination of frequency components [13].

2.3. Understanding the Emotional Elements of Music

Music is used as a tool for expressing emotions, and certain frequencies, rhythms, and combinations induce emotional responses.

For example, slow rhythms and low frequencies are mainly used to induce sadness, and fast rhythms and high frequencies are used to induce joy.

Sound wave analysis can study these relationships to determine the correlation between emotions and music [14].

2.4. A structural analysis of music

The structure of music is not just an arrangement of sounds, but includes complex patterns such as melody, chord, rhythm, and texture. Sound wave analysis allows us to visualize the structural elements of music.

For example, harmonic analysis can help musician understand how chords and chords progression, and by analyzing the melody, musician can check the pitch and rhythm pattern. Additionally, if multiple melodies are played simultaneously, they can understand the interaction of each melody [15].

2.5. Support for music production and mixing

Sound wave analysis is essential for solving technical problems in the music production process.

Musicians adjust the sound volume by frequency band to balance the instruments and remove unwanted sound from recorded sound waves. Sound design also helps analyze and improve sound effects [16].

2.6. Music learning and research

Sound wave analysis provides music learners and researchers with tools to visually understand music theory.

2.7. Improved listening experience

Sound wave analysis can visually identify sound elements that are difficult to hear by the human ear (e.g., ultra-low, ultra-high) and improve the listening experience.

For example, we can find hidden detailed sounds in music through spectrograms, and users can visually see the acoustic complexity of music.

The level of analysis and tools required may vary depending on the purpose of analyzing music, but for whatever reason sonic analysis is a powerful tool to explore the scientific and artistic nature of music beyond just listening [17].

3. Prior studies

3.1. A way of expressing sound

There are many ways in which sound is expressed, but it is mainly explained by physical principles such as vibration, waves, and frequency. Sound is a pressure wave that is transmitted through air (or other medium) as an object vibrates. Let’s take a closer look at it.

Sound is usually produced by an object vibrating. For example, when a piano keyboard is pressed, the strings vibrate, and the vibrations are transmitted into the air to be recognized as sound. This vibration is caused by an object moving and compressing or expanding air particles.

And this sound is transmitted through a medium (air, water, metal, etc.). The particles of the medium vibrate, compress and expand to each other, and sound waves are transmitted. At this time, the important concepts are pressure waves and repetitive vibrations.

Compression is a phenomenon in which the particles of the medium get close to each other, and re-action is a phenomenon in which the particles of the medium get away, and the sound propagates by repeating these two processes, through which we hear the sound. These sounds can be distinguished by many characteristics. Mainly, the following factors play an important role in defining sounds.

- Frequency: The frequency represents the number of vibrations of the sound.At this time, the frequency is measured in Hertz (Hz). For example, 440 vibrations per second are 440 Hz.The higher the frequency, the higher the pitch, and the lower the pitch, the lower the pitch. Human ears can usually hear sounds ranging from 20 Hz to 20,000 Hz.

- Amplitude: The amplitude represents the volume of the sound, the larger the amplitude, the louder the sound, and the smaller the amplitude, the smaller the sound. Amplitude is an important determinant of the “strength” of sound waves. A larger amplitude makes the sound louder, and a smaller amplitude makes it sound weaker.

- Wavelength: The wavelength is the distance that a vibration in a cycle occupies in space. The longer the wavelength, the lower the frequency, and the shorter the frequency, the higher the frequency.

- Timbre (Timbre): Timbre is a unique characteristic of sound, formed by combining various elements in addition to frequency and amplitude.For example, the reason why the piano and violin make different sounds even if they play the same note is that each instrument has different tones. As in this study, in order to analyze sound with software, sound must be digitally expressed, and when expressing sound digitally, the sound is converted into binary number and stored.Digital sound samples analog signals at regular intervals, converts the sample values into numbers, and stores them, which are used to store and reproduce sounds on computers.In summary, sound is essentially a physical vibration and a wave that propagates through a medium. There are two main ways of expressing this, analog and digital, and each method produces a variety of sounds by combining the frequency, amplitude, wavelength, and tone of sound [18–20].

3.2. Traditional method of sound analysis

Frequency analysis is a method of identifying the characteristics of a sound by decomposing the frequency components of the sound. It mainly uses Fourier Transform techniques. Fourier transform is a mathematical method of decomposing a complex waveform into several simple frequency components (sine waves). This transform allows us to know the different frequencies that sound contains.

These Fourier Series and Fourier Transform allow us to analyze sound waves in the frequency domain.

Secondly, spectral analysis is a visual representation of the frequency components obtained through Fourier transform. This analysis visually shows the frequency and intensity of sound.

A spectrogram is a graph that shows the change in frequency components over time, and can visually analyze how sound changes over time.

In addition, time analysis is a method of analyzing sound waveforms over time. This method can track changes in the amplitude of sound over time.

Analyzing the waveform analysis at this time allows users to determine the sound volume, temporal change, and occurrence of specific events.

The waveform is a linear representation of the temporal variation of an analog signal or digital signal, and amplitude and periodicity can be observed.

Users can also track the volume change by analyzing the amplitude of a sound over time. For example, users can determine the beginning and end of a specific sound, or users can analyze the state of attenuation and amplification of the sound.

Further in waveform analysis, characteristic waveform characteristics can also be extracted, which is particularly important for classification or characterization of acoustic signals [21].

3.3. The characteristics of piano sound

The piano is a system with a built-in hammer corresponding to each key, and when the key is pressed, the hammer knocks on the string to produce a sound. The length, thickness, and tension of the string, and the size and material of the hammer are the main factors that determine the tone of the piano. Each note is converted into sound through vibrations with specific frequencies.

Frequency is an important factor in determining the pitch of a note. Piano notes range from 20 Hz to 4,000 Hz. They range from the lowest note of the piano, A0 (27.5 Hz), to the highest note, C8 (4,186 Hz).

The pitch is directly related to the frequency, and the higher the frequency, the higher the pitch, and the lower the frequency, the lower the pitch.

The piano’s scale consists of 12 scales, separated by octaves. For example, the A4 is 440 Hz, and the A5 doubles its frequency to 880 Hz.

Tone is an element that makes sounds different even at the same frequency. In other words, it can be said to be the “unique color” of a sound.

The tone generated by the piano is largely determined by its structure of harmonics. Since the piano can produce non-sinusoidal waveform sounds, each note contains several harmonics in addition to the fundamental frequencies. This pattern of tone makes the piano’s tone unique.

For example, the mid-range of the piano has a soft and warm tone, the high-pitched range has clear and sharp characteristics, and the low-pitched range has deep and strong characteristics.

Dynamic is the intensity of a sound, or the volume of a sound. In a piano, the volume varies depending on the intensity of pressing the keyboard. The piano is an instrument that allows users to delicately control decremental and incremental dynamics. For example, the piano (p) is a weak sound, and the forte (f) is a strong sound. In addition to this, medium-intensity expressions such as mezzoforte (mf) and mezzo piano (mp) are possible.

In addition, the sound of the piano depends on the temporal characteristics such as attack, duration, and attenuation. Attack is a rapid change in the moment a note begins. The piano’s note begins very quickly, and the volume is determined when the hammer hits the string.

The piano’s attack is instantaneous and gives a faster reaction than other instruments. For example, the pitch on the piano pops out right away, and the other instruments, such as string or woodwind, can start more smoothly.

Duration is a characteristic of how long a sound lasts after it is played. The piano strings gradually decay when they make a sound, because the string’s vibration gets weaker and weaker due to friction with the air or other factors. The length, thickness, and tension of the strings affect the duration of the sound at this time. If pianists use a pedal, they can increase the duration of the sound, but when they press the damper pedal, the strings continue to ring without stopping the vibration, making the sound longer. If users look at the waveform, the piano is an instrument that generates non-sine sound. This is a complex waveform, not a sine wave, and several frequency components are mixed to create a rich tone. The sound waves on the piano are rich in harmonics, so they have various tones and rich characteristics. For example, more low-frequency components are included in the lower register, and high-frequency components are more prominent in the upper register.

The notes generated by the piano can be divided into low, medium, and high notes, each range having the following characteristics.

- Blow (A0 to C4): It contains deep, rich, and strong low-frequency components. For example, Blow C1 has a very low frequency of 32.7 Hz.

- Middle tones (C4 to C5): range similar to the human voice, which is the key range of the piano. The mid tones of the piano have a balanced sound and a warm tone.

- High note (C6 to C8): It has a sharp, clear sound, and a clearer sound is produced at a fast tempo or high note.

In conclusion, the characteristics of the sound produced by the piano are influenced by a combination of several factors, including frequency, tone, attack and duration, dynamic, and attenuation. The piano is an instrument with very rich and complex harmonics, and its sound is characterized by fast attacks and various dynamic controls. In addition, the tone is determined by the characteristics of the strings used and the material of the hammer, which makes the piano sound a unique and distinctive sound.

3.4. Characteristics of classical piano music

Piano classical music usually includes classical and romantic music, and its style has characteristic elements in musical structure, dynamics, emotional expression, and technical techniques.

First, complex chords and colorful tones are important features in piano classical music. Since the piano can play multiple notes at the same time, it is excellent at expressing different chords.

The way chords are created is the synthesis of sound waves, which combine several frequency components to create more complex and rich notes. In this process, each note has its own harmonics, which provides a touching and colorful tone to the music.

The second feature is that it delicately controls the dynamics. It can express dramatic changes and subtle emotions by crossing the piano(p) and the forte(f). It also expresses the rhythm and melody by using various technical techniques such as precise rhythms and arpeggios, trills, and scales.

These techniques require fast and repetitive vibrations, resulting in more complicated waveform fluctuations. Lastly, classical piano music focuses on expressing emotional depth, and deals with epic development and emotional flow. The music delicately utilizes the dynamics and rhythm in expressing dramatic contrast or emotional height. When viewed from the perspective of a scientific wave, the notes of classical piano music are not just sine waves but complex non-sinusoidal waveforms. Because of this, the piano’s sound includes harmonics, making it richer and more colorful in tone. This sonic quality is very important in classical music.

- Complex waveform structure. The waveform consists of a fundamental frequency and multiple overtones. For example, the piano’s sound vibrates according to its harmonic series, which means that in addition to the fundamental frequency, the background sounds such as 2x frequency, 3x frequency, and 4x frequency are also present. Piano tones have frequencies higher than the basic notes, and they enhance or distort the characteristics of the basic notes. The tone of a piano is formed by the way these tones are nonlinearly combined. For example, there are many and strong tones at lower notes, and relatively few and microscopic tones at higher notes.

- (2) Quick attack and sudden waveform changes. In a piano, notes begin quickly, which is a part called an attack. The moment a note begins, the waveform undergoes a drastic change. For example, the sound pressure on a piano rise very quickly as soon as the hammer hits the string, and then it attenuates rapidly. This causes a drastic change in the waveform. The attack part is very short, producing an abnormal waveform indicating a spike with a fast frequency change. The waveform at this moment takes the form of a sharp peak and then a quick decrease in amplitude.

- Nonlinearity. The sound of classical piano music has nonlinear characteristics, forming unexpected waveforms through multiple nonlinear interactions, even at the same frequency. For example, the moment a hammer hits a string, complex nonlinear oscillations can occur depending on the hammer’s mass, speed, and string tension. This results in a mixed waveform in addition to the fundamental frequency, which forms its own tone.

To sum up, the characteristics of piano classical music are very complex and colorful, ranging from its musical composition to the physical characteristics of the sound. Musically, it is characterized by complex chords and various dynamics, and it deals with emotional expression as important. These musical characteristics are physically revealed through the wave peculiarities of sound—complex waveforms, fast attacks, dynamic frequency changes, etc., and the singularity is well represented by the piano’s tonal structure and nonlinear waves. The sound waves of the piano are very rich and complex, which contribute to expressing the emotional depth and emotion that classical music is trying to convey well.

- D. Arfib, “Digital synthesis of complex spectra by means of multiplication of nonlinear distorted sine waves,” 1979.

- B. Bank, “Nonlinear Interaction in the Digital Waveguide With the Application to Piano Sound Synthesis.,” in ICMC, 2000.

- B. Bank, V. Valimaki, “Robust loss filter design for digital waveguide synthesis of string tones,” IEEE Signal Processing Letters, vol. 10, no. 1, pp. 18–20, 2003, doi:10.1109/LSP.2002.806707.

- J. Bensa, S. Bilbao, R. Kronland-Martinet, J.O. Smith III, “The simulation of piano string vibration: From physical models to finite difference schemes and digital waveguides,” The Journal of the Acoustical Society of America, vol. 114, no. 2, pp. 1095–1107, 2003, doi:10.1121/1.1587146.

- J. Berthaut, M.N. Ichchou, L. Jézéquel, “Piano soundboard: structural behavior, numerical and experimental study in the modal range,” Applied Acoustics, vol. 64, no. 11, pp. 1113–1136, 2003, doi:https://doi.org/10.1016/S0003-682X(03)00065-3.

- G. Borin, G. De Poli, D. Rocchesso, “Elimination of delay-free loops in discrete-time models of nonlinear acoustic systems,” IEEE Transactions on Speech and Audio Processing, vol. 8, no. 5, pp. 597–605, 2000, doi:10.1109/89.861380.

- C. Cadoz, A. Luciani, J. Florens, C. Roads, F. Chadabe, “Responsive Input Devices and Sound Synthesis by Stimulation of Instrumental Mechanisms: The Cordis System,” Computer Music Journal, vol. 8, no. 3, pp. 60–73, 1984, doi:10.2307/3679813.

- A. Chaigne, A. Askenfelt, “Numerical simulations of piano strings. I. A physical model for a struck string using finite difference methods,” The Journal of the Acoustical Society of America, vol. 95, no. 2, pp. 1112–1118, 1994, doi:10.1121/1.408459.

- A. Chaigne, A. Askenfelt, “Numerical simulations of piano strings. II. Comparisons with measurements and systematic exploration of some hammer‐string parameters,” The Journal of the Acoustical Society of America, vol. 95, no. 3, pp. 1631–1640, 1994, doi:10.1121/1.408549.

- J.M. Chowning, “The synthesis of complex audio spectra by means of frequency modulation,” Journal of the Audio Engineering Society, vol. 21, no. 7, pp. 526–534, 1973.

- H.A. Conklin Jr., “Piano design factors—Their influence on tone and acoustical performance,” The Journal of the Acoustical Society of America, vol. 81, no. S1, pp. S60–S60, 2005, doi:10.1121/1.2024314.

- A. Fettweis, “Wave digital filters: Theory and practice,” Proceedings of the IEEE, vol. 74, no. 2, pp. 270–327, 1986, doi:10.1109/PROC.1986.13458.

- J.L. Flanagan, R.M. Golden, “Phase Vocoder,” Bell System Technical Journal, vol. 45, no. 9, pp. 1493–1509, 1966, doi:https://doi.org/10.1002/j.1538-7305.1966.tb01706.x.

- H. Fletcher, E.D. Blackham, R. Stratton, “Quality of Piano Tones,” The Journal of the Acoustical Society of America, vol. 34, no. 6, pp. 749–761, 1962, doi:10.1121/1.1918192.

- K. Karplus, A. Strong, “Digital Synthesis of Plucked-String and Drum Timbres,” Computer Music Journal, vol. 7, no. 2, pp. 43–55, 1983, doi:10.2307/3680062.

- J. Laroche, J.-L. Meillier, “Multichannel excitation/filter modeling of percussive sounds with application to the piano,” IEEE Transactions on Speech and Audio Processing, vol. 2, no. 2, pp. 329–344, 1994, doi:10.1109/89.279282.

- M. Le Brun, “Digital waveshaping synthesis,” Journal of the Audio Engineering Society, vol. 27, no. 4, pp. 250–266, 1979.

- T. Singh, M. Kumari, D.S. Gupta, “Rumor identification and diffusion impact analysis in real-time text stream using deep learning,” The Journal of Supercomputing, vol. 80, no. 6, pp. 7993–8037, 2024, doi:10.1007/s11227-023-05726-x.

- T. singh, M. Kumari, D.S. Gupta, “Context-Based Persuasion Analysis of Sentiment Polarity Disambiguation in Social Media Text Streams,” New Generation Computing, vol. 42, no. 4, pp. 497–531, 2024, doi:10.1007/s00354-023-00238-x.

- T. Singh, M. Kumari, D.S. Gupta, “Real-time event detection and classification in social text steam using embedding,” Cluster Computing, vol. 25, no. 6, pp. 3799–3817, 2022, doi:10.1007/s10586-022-03610-6.

- H. Li, K. Chakraborty, S. Kanemitsu, “Music as Mathematics of Senses,” Advances in Pure Mathematics, vol. 08, no. 12, pp. 845–862, 2018, doi:10.4236/apm.2018.812052.

- Eunsung Jekal, Juhyun Ku, Hyoeun Park, “Neural Networks and Digital Arts: Some Reflections”, Journal of Engineering Research and Sciences, vol. 1, no. 1, pp. 10–18, 2022. doi: 10.55708/js0101002