Surface Defect Detection using Convolutional Neural Network Model Architecture

Journal of Engineering Research and Sciences, Volume 1, Issue 5, Page # 134-144, 2022; DOI: 10.55708/js0105014

Keywords: Quality Assurance, Industry 4.0, Deep Neural Network, Quality inspection, Machine Vision, Convolutional Neural Network

(This article belongs to the Section Artificial Intelligence – Computer Science (AIC))

Export Citations

Cite

Shaikh, S. , Hujare, D. and Yadav, S. (2022). Surface Defect Detection using Convolutional Neural Network Model Architecture. Journal of Engineering Research and Sciences, 1(5), 134–144. https://doi.org/10.55708/js0105014

Sohail Shaikh, Deepak Hujare and Shrikant Yadav. "Surface Defect Detection using Convolutional Neural Network Model Architecture." Journal of Engineering Research and Sciences 1, no. 5 (May 2022): 134–144. https://doi.org/10.55708/js0105014

S. Shaikh, D. Hujare and S. Yadav, "Surface Defect Detection using Convolutional Neural Network Model Architecture," Journal of Engineering Research and Sciences, vol. 1, no. 5, pp. 134–144, May. 2022, doi: 10.55708/js0105014.

With the dominance of a technical and volatile environment with enormous consumer demands, this study aims to investigate the advancements in quality assurance in the era of Industry 4.0. For better production efficiency, rapid and robust automated quality visual inspection is developing rapidly in product quality control. Deep neural network architecture is built for a real-world industrial case study to achieve automatic quality inspection built on image processing to replace the manual inspection, and its capacity to detect quality defects is analysed to minimise the errors. The primary goal is to understand the developments in quality inspection and their implications regarding finances, time expenditure, flexibility, and the model's optimum accuracy-precision compared to manual inspection. As an innovative technology, machine vision inspection offers reliable and rapid inspections and assists producers in improving quality inspection efficiency. The research provides a deep learning-based method for extended target recognition that uses visual data acquired in real-time for neural network training, validation, and predictions. The data made available by machine vision setup is utilised to evaluate error patterns and enable prompt quality inspection to achieve defect-free products. The proposed model uses all data provided by integrated technologies to find trends in data and recommend corrective measures to assure final product quality. As a result, the work in this study focuses on developing a deep convolutional neural networks (CNN) model architecture for defect identification that is also highly accurate and precise and suggests the machine vision inspection setup.

1. Introduction

In this modern world, recent changes have occurred in the industrial sector in the digital transformation of the traditional production process. This new paradigm in the production process tends to drive the world towards the Industrial Revolution 4.0. Industry 4.0 ultimately came into the role to meet the growing demand for various products with better final product quality.

Quality control is the procedure in the manufacturing industry that guarantees clients receive products that are free of faults and meet their requirements. Therefore, most industries do manual inspections on the final product to be delivered, using the acceptable quality limit chart (AQL chart) so that the clients receive a high-quality product. First, a quality inspector selects random samples from the lots for inspection. Then, based on the AQL chart level, whether the entire lot is accepted or rejected for dispatch is determined. However, this method has the drawback of including defective goods that were not taken as samples in the production batch and human errors or slow inspection speed. In addition, it can endanger harm a company’s reputation [1]. To counter the significant drawback, intelligent machines, storage technologies, and production facilities that autonomously share information, initiate operations, and manage each other can be adopted [2].

Furthermore, the continuous learning process can enable machines to act independently, make decisions, and develop automatically, which can be achieved by implementing the Artificial Intelligence subfield, machine vision [3]. Object connectivity is the first stage since it collects large amounts of data, increases productivity, improves machine health, improves production line automation, and leads to defect-free manufacturing through effective data exploitation and real-time analysis [4]. Moreover, it allows control of 100% of product inspections [3].

This paper contributes to the paradigm by proposing a machine vision architecture model based on the deep Convolutional Neural Network (CNN) presented in [3]. In this study, CNN model architecture was developed by employing an early stop function for the model, as model accuracy starts to repeat the accuracy and has a higher chance to overfit the model to get better and better accuracy in the training phase, eventually which will fail in the prediction phase due to the overfit. Therefore, to overcome the drawback of overfitting, a checkpoint was set up after each run of early stops of the model architecture to store the output, which is used as a learning aid for the next run for the same model architecture. The technique incorporates detecting faulty products and continuously enhancing industrial processes by anticipating the process variables to generate a defect-free product [3]. The model architecture intends to meet Industry Revolution 4.0 objectives by appropriately exploiting and analysing data produced by the connectivity of the supply chain to progress faster, more flexible, and more effective processes that deliver first-rate goods at a low cost [3], [4].

This research intends to build a neural network model and discuss the machine vision inspection setup for the application model to detect surface flaws for the impeller dataset to speed up the inspection process of the finished product with improved accuracy in real-time. As a result, the time-consuming manual inspection procedure in the industrial sector can replace by Artificial Intelligence.

The paper’s structure is the current state and relevant literature discussed in Section 2. Section 3 provides an overview of the machine vision and implementation model. Dataset used in the proposed model is discussed in section 4. The proposed model scenario describe in depth in Section 5. The virtual setup for the proposed model is described in Section 6. Finally, section 7 discusses the results of the analysis.

2. Related Works

Several research works have been conducted based on the extraction of data or data mining obtained by various integrated automation in the current manufacturing sector and various machine learning methodologies to develop an intelligent machine vision architecture system for quality inspection in the industry. As a result, the following are some remarkable achievements of machine vision architecture applications in various fields.

2.1. Machine Learning Technique for Inspection Purpose

In [5], the authors investigated the applicability and current machine learning technique for the production assembly lines. The author discussed the diverse production assembly lines and difficulties while acquiring various data and implying the methods. The challenges encountered during modelling are regularly used to optimise production assembly-line operations and mathematical and computational approaches. According to the authors, the machine learning approach has been frequently applied in numerous manufacturing fields to intensify Overall Equipment Effectiveness (OEE) comprises the indicators of quality, potential performance, and availability of setup [5]. Two significant study directions have emerged in recent years: quality control and problem diagnosis, for which the machine learning approach is effective. The author also discussed unsupervised learning, which is vastly outweighed by supervised learning because of further regression and classification problems used in production assembly lines [5]. It also demonstrates that data is plentiful in production assembly lines, and as a result, supervised learning may be leveraged to produce more accurate outcomes. The most typical tasks for quality prediction are regression and classification. According to research findings, ANN is the most commonly employed algorithm [5]. The success of neural networks in production assembly lines is presumably due to two fundamental factors. First, production assembly lines are a complicated system in which neural networks can handle the sophisticated interaction between characteristics and the dependent variable. Second, production assembly lines can produce massive amounts of data that neural networks can use and handle easily, as more extensive dataset aids in the performance of neural networks. The imbalanced datasets pose a complex problem in production processes. The majority of data points fall into one of two categories, with only a few falling into the other. Most data points do not represent this exact condition, especially in unusual cases; hence specific methods will be necessary to balance the datasets [5].

Investigation of the deep neural networks for automatic quality assessment based on image processing to overcome the drawbacks of the manual quality control process in the paper bag industry was carried out by [6]. In this study, the author explains the development and testing of the deep learning technique in real-world industrial production and its capacity to detect quality concerns [6]. A Faster Region with CNN (R-CNN) architecture was used in this work. Faster R-CNN enhances the notion of CNN architecture by eliminating wrapping and swapping it with spatial pyramid pooling [6]. In addition, the significantly lower resolution of the feature map reduces the needed computation, allowing the method to be faster and less resource-intensive. Data were divided into two sections to develop a model: training and testing. Finally, the test data is used to determine whether or not the model correctly predicts the outcome.

The work in [7] identified that the traditional weaving mill inspection procedure relies on human visual examination, revealing 60– 70 per cent of total fabric faults. Defective parts were typically thrown as waste, recycled or sold at a discount (generally 45–65 per cent off the free defect price). In [7], the authors provided a convenient automatic fault detection system that provides an alternative to replacing traditional manual fabric examination with computer vision technology that systematically analyses fabrics and ensures stable performance while highlighting the various categories of fabric faults with visual representations for each defect type. The author also describes the theoretical underpinning of principle component analysis (PCA). Following that, the principles of soft computing (particularly ANNs) has discussed as a decision-making device for data classification [7]. Finally, these ideas were used to evaluate actual samples, including sample preparation and digital imaging setup. ANN model was used for the study, for which varied network architectures have significantly different computational features, and the feed-forward and recurrent networks are the two most important structures to separate. The number of hidden layers and units per layer is critical in constructing an ANN. Since a too tiny network may not represent the required function, and too large networks, on the other hand, risk overfitting the data due to the weights present in the model, the ANN model will memorise all the cases make challenging to generalise the adequate inputs. However, each input is connected to several outputs via several weights in multilayer networks [7]. And it can be achieved by a back-propagation approach, which provides a mechanism that splits the gradient computation among the units and allows the change in each weight to be determined, reducing the error between each target and network predicted output [7].

Improving part quality in the Metal Powder Bed Fusion (PBF) process, In [8], the authors propose a unique Machine Learning (ML) methodology for producing feedback loops across the entire metal PBF process. Acceptability has considerably limited in the industry due to uneven part quality caused by incorrect product design, non-optimal process designs, and deficient process management [8]. Metal PBF feedback loop categorisation, including a generic structure and identifying critical data, and a discussion of the opportunities and problems for building the feedback loops is presented [8]. The metal PBF procedure is classified into 6 stages, for which the last stage is considered the product quality measurement. A fishbone diagram of each metal PBF method stage displayed the vital information that might be examined, managed, or quantified in each metal PBF feedback loop stage. The process developing parameters were controllable and were changed during the research work. In addition, high-performance supervised ML models that can deal with image data were established for real-time processing to aid the feedback loop [8]. Finally, the measured product characteristics are fed back into product development, process planning, and online process control phases to ensure process stability and eventual product quality. The use of machine learning methodologies in the metal PBF process allows for efficient and effective decision-making at each stage of the process, and so has a significant potential for reducing the number of tests necessary, saving time and money in metal PBF manufacture [8].

2.2. Machine Learning Techniques, Model Architecture and important factor consideration

The aforementioned literature presented techniques for Machine Learning approaches that were applied to the production assembly line, paper bag industry, traditional weaving mill, and Metal Powder Bed Fusion process to improve production quality. The image processing method is utilised to determine product quality during the process. Supervised learning or deep learning is the most common machine learning technique utilised in various fields. Human intervention is very significant in supervised learning, and the strategy necessitates more data processing for feature selection and anticipates parameter tweaking for a better algorithm setup [5]. Deep learning is a machine learning technique that learns patterns using neural networks, and supervised, semi-supervised, and unsupervised are different learning methods. Deep-learning architectures have been used in various fields and are preferred in image processing to produce results comparable to traditional approaches [6].

Model architecture, encompassing multiple layers involved in the machine learning technique, defines the vital process of converting raw data into training data sets to assist a system’s decision making. An Artificial Neural Network (ANN) is a commonly employed model architecture that is complex to build due to the hidden layers and unit per layer [5]. And has a risk of overfitting the data due to weight present in the model architecture. The error between the model predicted output and each target could be reduced using the back-propagation method, where each input is connected with different outputs via various weights in multilayer models [7]. Another technique that is being used for image processing is Convolutional Neural Network (CNN) or the Faster Region CNN (R-CNN) architecture, which reduces the computational time and resource-intensive required is low [6].

The factor that aids in the success of the model architecture is the massive amount of balanced data that contains less noise. Unfortunately, the noise present makes the dataset imbalanced, producing a complex problem while processing and predicting the faulty product [5].

In this study, CNN model architecture has been used for image processing by employing an early stop function for the model. The input data utilised was cleaned before it was split. In most of the research work, the dataset was divided into train and test sets [6], but the dataset for this study was divided into 3 sets train, validate, and test. The model was developed to achieve the time required is less for the training, validating and predicting the data, with accuracy and precision percentage and flexibility to develop the decision system further with different product input to obtain defect-free products. Mention points were considered to overcome the problem or error faced in different research work.

3. Theoretical Background

Machines have been used in manufacturing for an extended period to complete complex programmed operations. In the Industry 4.0 paradigm, machines are equipped with innovative networking technologies to become more intelligent, adaptable, cooperative, and independent in decision-making [3]. They cooperate and even function securely with humans, and the data generated is enormous, stored, and later analysed. Labour process automation directly impacts firm productivity, especially time and cost savings [9]. The proposed paradigm in this work, on the other hand, can be applied only once the product has been completely manufactured and visually inspected under the vision setup [3]. This automated quality check can replace manual inspections for the final product, enabling manufacturers to satisfy the growing demand for higher-quality products efficiently. A system must acquire data in real-time to evaluate the product and have a big storage capacity for the gathered data and a highly precise and accurate model architecture to predict product quality and decision-making.

Artificial intelligence (AI) technology allows the system to operate smartly and replace human operators to establish an automated quality inspection system [3]. AI is defined as “the science of teaching robots to perform tasks that would need intelligence if performed by humans” [10]. AI can be used to make decisions in Industry 4.0, and it promises to revolutionise the way quality checks are done by giving machines more intelligence capabilities [3]. As a result, machines will be able to detect and rectify errors, resulting in defect-free final items [11]. Many industries, including finance, health, education, and transportation, have benefited from AI. An artificial neural network solves the time-consuming, complex issue. It completely works on the input patterns and learns from them for the future prediction/work itself.

Machine learning technology is one of the most frequent AI technologies in the industry, and it’s used for machine-vision inspection. Machine learning has numerous advantages and enhances system performance dramatically [3]. A machine vision inspection system is designed with sequences of algorithms to form a deep neural network model that uses digital images and videos to input the machine, identifying and classifying the object visualised. As the neural network works on the pattern and the machine vision system is built up of the neural network model, it finds the patterns in the gathered data of the final product in the form of captured images that had passed through the camera to see if it meets the threshold accuracy.

Machine vision problems, for example, necessitate the processing of a large number of computing resources (e.g., images). Moreover, the components must connect and act autonomously without the human operator’s participation to implement the machine vision model, providing Industry 4.0 its unique nature. As a result, implementing a machine vision-based automated system in the manufacturing chain for detecting defective products necessitates a careful blend of Internet of Things (IoT), cloud computing, automation, big data and analytics, and artificial intelligence (AI) technologies in the manufacturing chain [3].

The Internet of Things (IoT) is regarded as automation’s backbone. The Internet of Things (IoT) is a network of interconnected devices with varying levels of intelligence: sensing and actuating, control, optimisation, and autonomy, all of which supports by specialised device technologies that help transfer data and interact with the items [12].

On the other hand, cloud computing satisfies this need because of the computational power and storage capacity accessible, and it also allows practitioners in the image processing environment to collaborate [13]. But, Edge computing is a type of distributed computing that uses decentralised processing power. Edge computing allows data to be processed immediately by the device that generates it or by a local server in its most basic form. Instead of transmitting data to the cloud or a data centre [13], the goal is to process data created at the network’s edge. Because data flows are processed locally in real-time, edge computing minimises bandwidth and information processing latency. As a result, edge computing is critical for integrating product inspection systems into manufacturing lines, as these machine vision systems must interpret images recorded by vision equipment in real-time to offer quick replies without disrupting manufacturing processes [3].

3.1. Confusion Matrix Terminology

A confusion matrix is a table that commonly illustrates how well a classification model performs on test data with known values. The categorisation performance for some test data is summarised in a confusion matrix. It’s a two-dimensional matrix with a specified object’s actual and predicted class, as shown in Table 1 [3]. A specific confusion matrix case is frequently used for two classes, one designated as positive and negative. True positives (TP), false positives (FP), true negatives (TN), and false negatives (FN) are the four cells of the matrix in this context, as shown in Table 1 [14].

Table 1: Confusion matrix Table [14]

Prediction | |||

Actual | Positive | Negative | |

Positive | True Positive | False Positive | |

Negative | False Positive | True Negative | |

Precision, Accuracy, and Recall are classification performance measures established by the classification outcomes generated by model architecture [3]. Precision refers to our model’s reliability, which means how many predicted positives are actually positive and vice-versa. Recall calculates how many actual positives it can capture by labelling them as positive by the model. Accuracy refers to the percentage of times our model’s predictions were correct. Finally, as per the application, the model must be developed to decrease the false positive product, which means the defective product is predicted as a non-defective product and dispatched to the market. Therefore, the precision percentage must be high for our model to determine the actually positive product among the predicted product. Equations (1) and (2) represent the mathematical formula to obtain the precision and accuracy of the model as per the Tajeddine Benbarrad [3].

$$\textit{Precision} = \frac{TP}{TP + FP} \quad\quad (1)$$

$$\quad\quad \textit{Accuracy} = \frac{TP + TN}{TP + TN + FP + FN} \quad (2)$$

The CNN model architecture of Tajeddine Benbarrad [3] obtains an accuracy of 90% and a precision of 88%. However, his work was based on the model’s accuracy, not the model’s precision as required by the application, where the defective product must eliminate before it is dispatched. Therefore, a deep CNN model architecture is developed and evaluated based on model precision.

4. Dataset Processing

This dataset comprises casting manufacturing products containing the image data labelled with ok(standard) and def(defective). A casting defect is an exceptionable irregularity in a metal casting process. There are many casting defects like blow holes, pinholes, burr, shrinkage, mould material, pouring metal, and metallurgical defects.

Table 2: Dataset details

Total number of images in the dataset | Train Dataset | Test Dataset | ||

Ok | Not Ok | Ok | Not Ok | |

7348 | 3748 | 2875 | 453 | 262 |

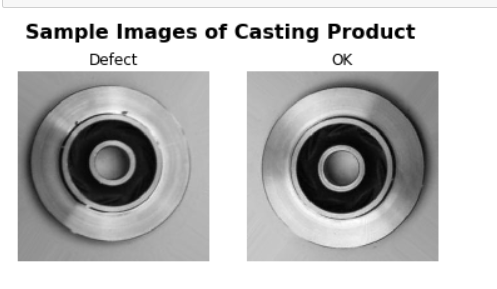

Table 2 contains the dataset details used for modelling the architecture. These all are the size of (300*300) pixels grey-scaled images. The dataset is already classified into two folders for training and testing. The images used are a top view of the submersible pump impeller, as shown in figure 1.

This study uses the above data to verify whether the bottleneck of “manual inspection” can be evident by automating the inspection process with a machine vision model in the casting products’ manufacturing process [15].

Before processing the images, multiple image processing methods to clean the images were applied to remove the noise present in them other than the impeller products. Later the image processing of the images, the training dataset was further split into the train and validation datasets. The training dataset was used to train the model, and the validation dataset was used to check the model architecture [16]. However, the test data set kept aside is to test the train model architecture to understand the model working/ prediction over entirely novel data. Since good performance in the train and validation stage has a chance to fail in the final stage of the test due to overfitting and underfitting problems, which must be taken care of by splitting the training dataset in two and keeping the test dataset untouched till the final prediction run of the model.

5. CNN Model Architecture

Intelligent machine vision systems discover errors early in the manufacturing process and ensure that the product is of excellent quality before being delivered to the client. However, while creating an ideal machine vision architecture for inspecting defective products, the proper collaboration between the various technologies involved in the manufacturing chain should be considered. Furthermore, every data created during the production process must be used to reinforce the system and go beyond defect detection to discover failure causes and improve product quality [3]. Convolutional neural networks (CNNs) have been recommended for automated inspection tasks and have demonstrated promising performance due to this rapid growth of deep learning. It can automatically extract essential information and performance evaluation in the same network. The outcomes could be comparable to, if not better than, human performance [17]. Recent study findings suggest that CNNs are still the most effective method for tackling image classification problems with high accuracy [18].

5.1. Model Processing

Images captured by the machine vision setup are represented in the form of the pixels, which are the smallest atomic element of the digital image. Pixel ranges from 0 – 255 and is displayed in multiple channels known as RGB, which are superimposed on each other to form a colour image. Rather than working directly on the captured image’s raw pixel information, which contains noise, feature extraction work is performed while working on the images to improve the efficiency of the model architecture. There are two methods to extract the feature: the manual feature extraction method and the modern feature extraction technique known as the CNN feature extraction technique. The manual feature extraction technique is less impactful than the CNN technique. In the CNN feature extraction technique, the features are from a high dimensional image and represented in the form of low dimensional without distorting the information present in the actual image.

CNN are a form of Artificial Neural Network (ANN) that works similarly to supervised learning methods in that they take in input images, detect their features, and then train a classifier. On the other hand, the features are learned automatically by CNNs, which perform all the arduous feature extraction and description work.

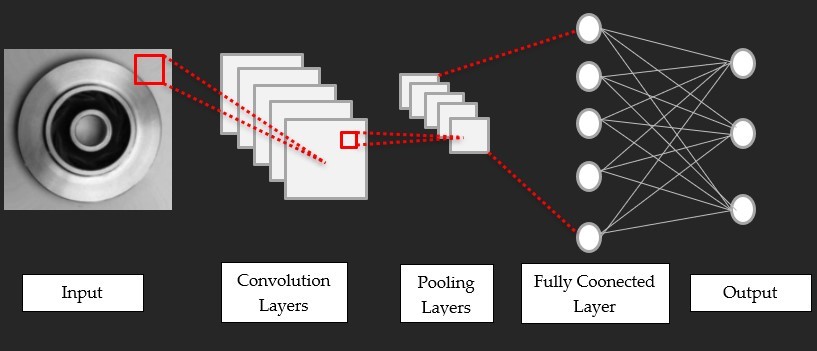

CNN is divided into 4 layers, as shown in figure 2: The most significant component in CNNs is the convolutional layer, always the first layer. Convolution is the process of adding each element of the image to its local neighbours, weighted by the kernel. And kernel is also known as the convolutional matrix or mask used for feature extraction used as a filter in the algorithm to extract the vital feature from the captured image without distorting it. Its purpose is to determine whether a set of features is present in the input images, which is accomplished through convolutional filtering [19].

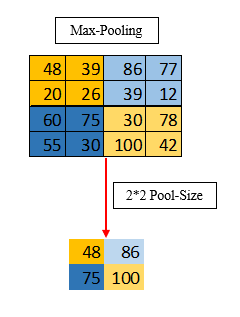

The pooling layer is usually sandwiched between two convolutional layers and receives many feature maps as input before performing the pooling function. As a result, the pooling method minimises the size of images while preserving their essential characteristics. As a result, the pooling layer reduces the number of parameters and calculations in the network, improving performance and preventing overfitting [3]. A convolutional network includes local or global pooling layers, which combine the output of neuron clusters at one layer into a single neuron in the next layer. Pooling has no learnable parameters. It only minimises the size of the images, preserving their essential characteristics. The proposed model architecture is built using the max-polling function. For example, a window of 2 and stride of 2 take the maximum value of the window and save it in the pooling layer for further work, as shown in figure 3.

A CNN is a fully connected stack of numerous convolutions, pooling, and ReLU correction layers. Each received image will be filtered, shrunk, and rectified multiple times before being converted to a vector [3].

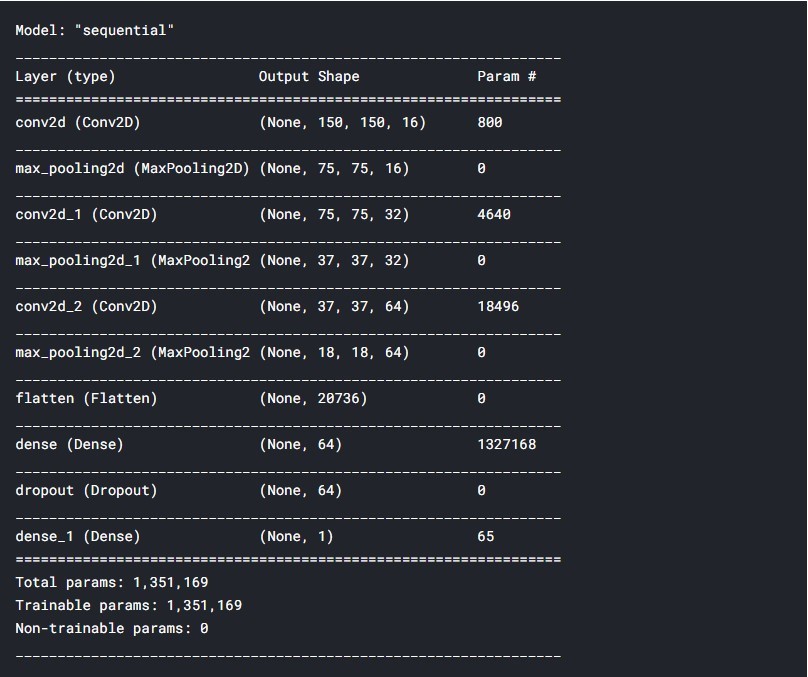

The CNN model is utilised as the base model in the suggested model architecture, and the output is saved and used as the feed for the retraining of the same model to attain the best results from the previous epoch result. CNN models and frameworks can be retrained using a custom dataset for any use case, providing deep learning and more flexibility [3]. The idea is to use the knowledge gained by a neural network while solving one problem to another. As a result, transfer learning speeds up network training while preventing overfitting [17]. The model elements applied in the model architecture are listed as shown in figure 4.

The suggested architecture’s training component is maintained at the local server, edge computation. The main advantage of this option is that the machine learning system can be used locally with no data storage and no flow. On the other hand, machine learning necessitates local hardware capable of handling the system’s inherent surges in processing demand [20]. The issue with local hardware is that it lacks a baseline offering, and the requirements of such a system alter as new information is gained. However, local hardware is utilised for the work because these studies are only concerned with the impeller dataset. Although a wide range of items must be evaluated in real-time, for which cloud computing should be used due to the computational capacity that provides us with a lot more freedom and, as a result, much faster and higher-value computing metrics. Furthermore, cloud computing boosts the system by providing data sharing, exceptional real-time accessibility, and data backup and restoration [3].

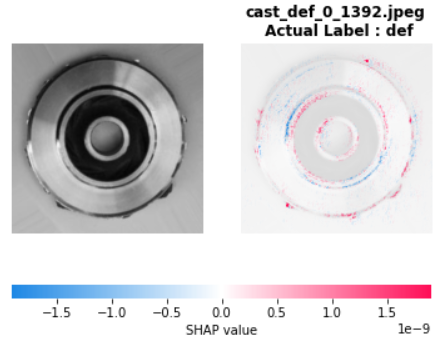

Figure 5 shows how the model interprets the images captured by the system. The left image in figure 4 shows the defective product, and the right image shows the illustrated view of how the model interprets the images for evaluating the defective or non-defective product. The colour red represents that the model is confident over the defective region, blue represents the non-defective region, and the non-colour region is confident the region is perfect. The model evaluates the input images and gets the confidence level to predict the product as defective or non-defective.

6. Virtual setup

Decision Support Systems (DSS) is a computerised information system that aids with decision-making. In most DSS, final decisions are taken by a human, but in this study, models act as the decision-maker and take the corrective decision [21].

For quality inspection, a machine vision setup can deploy in two manners. First, the setup can install after each significant process to capture the images during machining processes. If there are any defective components, they can remove during the manufacturing process.

Second, the setup can install after completing all processes by capturing the images of the product before packing. If there are any defective components, they can remove the defective product before packing the component.

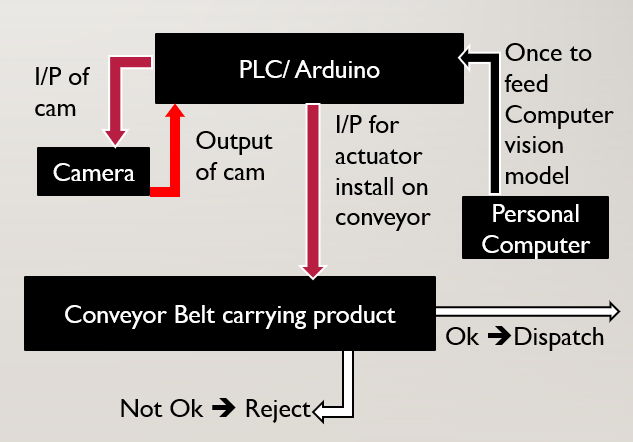

For this study, the second setup is focused. The primary reason for utilising this setup is that it’s not a complicated setup, and the capital required is also low compared to the first one. The significant devices required are a camera, PLC/Arduino and a personal computer, as shown in figure 6.

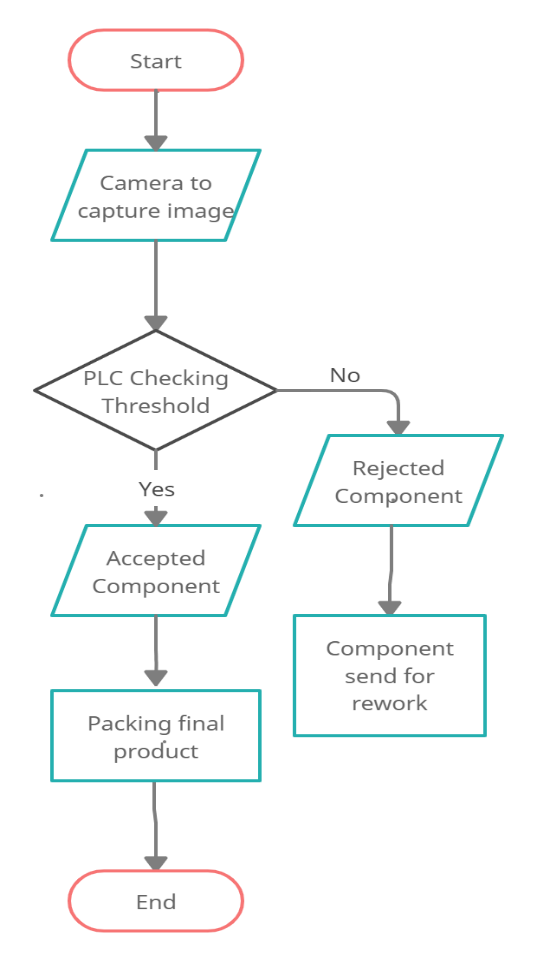

6.1. The flow of the work

The machine vision’s significant role in the inspection starts after completing all the machining processes when the batch of components is sent for packing purposes. Simultaneously, the component goes along a conveyor, and the camera captures an image and transmits it as an input to the PLC, which compares it to a threshold. Thus, PLC first processes the image to decide the acceptance and rejection of the components based on the threshold set by the model architecture installed into the PLC. If the accuracy of the component is above the acceptance threshold, the component is sent for packing purposes. However, the component accuracy is below the acceptance threshold, and the component is rejected and sent for rework. For rejecting a component, the PLC sends a command to the Actuator to remove the rejected component. This complete process moves in the if loop, as shown in figure 7.

7. Results and Discussion

In this section, the result obtained from the python software of CNN models run for precision and accuracy compared with the analytical method. The precisions test is being used for surface defect detection, which checks how many are positive among the predicted positive or vice-versa. Table no. 3 shows the results of confusion matrix terminology for different models used for the work.

Table 3: Different model results of confusion matrix terminology

Model | TP | FP | FN | TN |

CNN_Model_1 | 262 | 3 | 0 | 450 |

CNN_Model_2 | 262 | 11 | 0 | 442 |

CNN_Model_3 | 262 | 10 | 0 | 443 |

Table 4: Different model results

Model | Accuracy | Precision |

CNN_Model_1 | 99.58 | 98.7 |

CNN_Model_2 | 98.46 | 95.97 |

CNN_Model_3 | 98.60 | 96.32 |

Table 4 shows the results of different models used for the study as per equations (1) and (2).

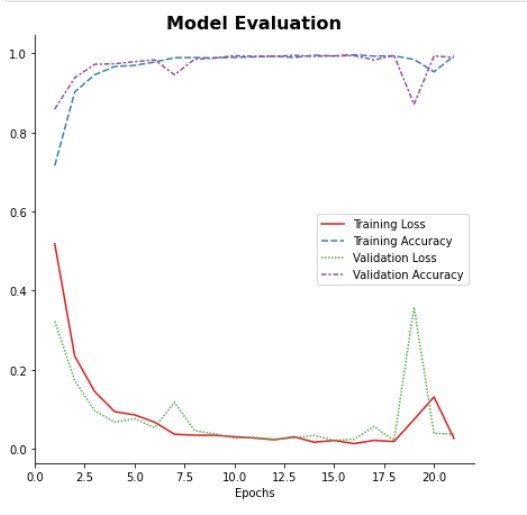

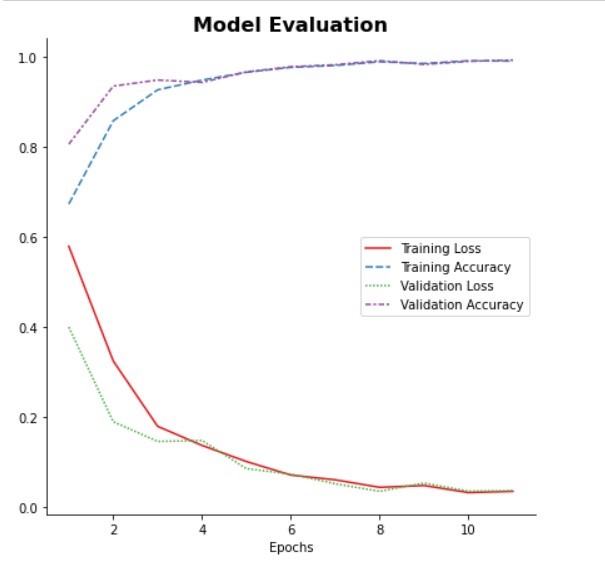

Table 3 shows the classification report for CNN_Model_1, which gives an accuracy of 99.58% with a precision of 98.87%. However, the model evaluation of CNN_Model_1 in figure 8 shows that the model is overfitting after epochs 17 to get a better result. It can be confirmed by observing the validation loss increases compared to training losses, as shown in figure 8. And the validation accuracy is decreasing as compared to the training accuracy.

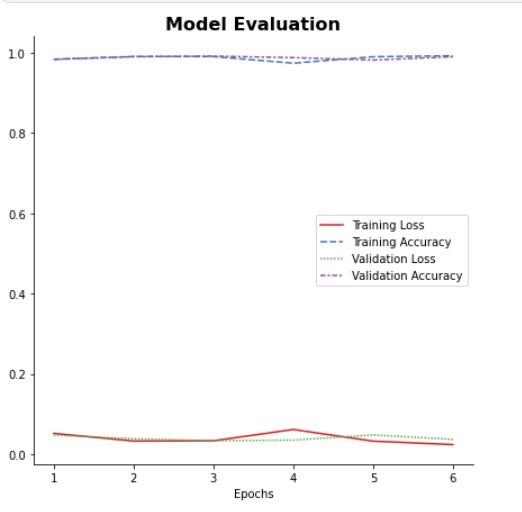

In CNN_Model_2, the output of CNN_Model_1 is utilised to retrain CNN_Model_2 as a learning process for training the model to reduce the overfitting of the model. Table 3 of the classification report, CNN_Model_2, gives an accuracy of 98.46% with a precision of 95.97%, which seems promising. However, the model evaluation of CNN_Model_2 in figure 9 shows that model had stopped overfitting and stopped earlier at epochs 17, as at epoch 17, overfitting was started in the previous model. It can be confirmed by observing that the validation loss and training losses match each other after epoch 6, and the validation accuracy also matches the training accuracy, as shown in figure 9.

In CNN_Model_3, the output of CNN_Model_2 is utilised to retrain as a learning process for the training model to get better results for precision. Table 3 of the classification report, CNN_Model_3, gives an accuracy of 98.60% with a precision of 96.32%, which seems promising. However, the model evaluation of CNN_Model_3 in figure 10 shows that model had just started to get better, which has stopped just after epochs 6. It can be confirmed by observing that the validation loss and training losses match each other from the start, and the validation accuracy also matches the training accuracy, as shown in figure 10.

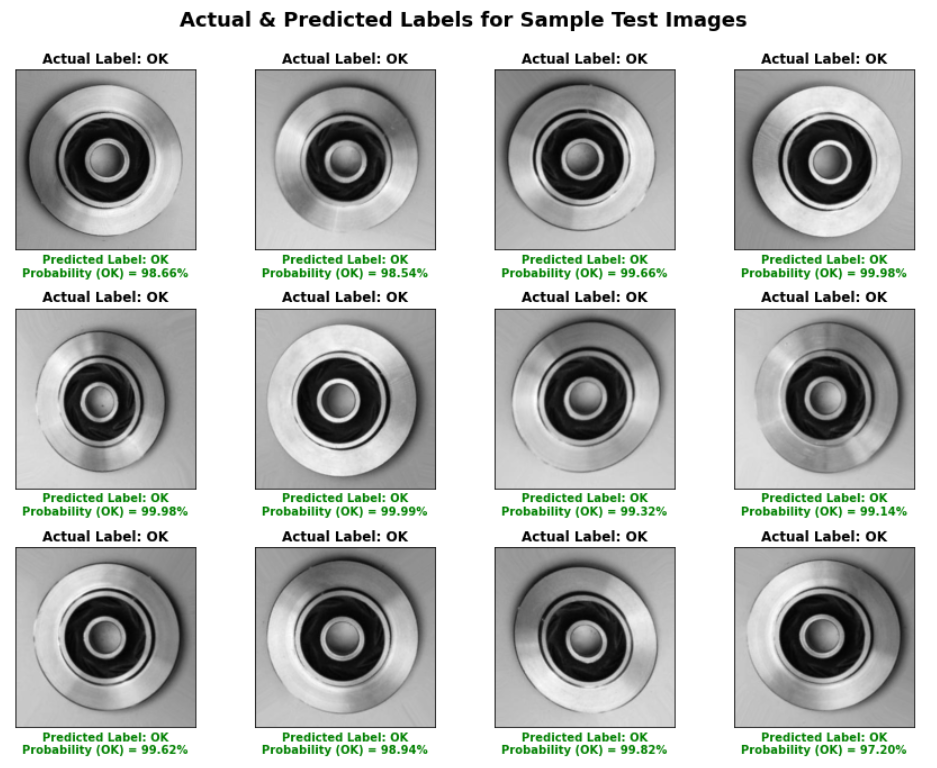

Figure 11 shows the output results for the actual and predicted labels for test sample images which were kept aside during the training and validation of the data. The test samples were perfectly predicted and labelled with the probability of being ok products. Therefore, the probability threshold percentage for product acceptance and rejection can be set as appropriate.

For this study, model 2 architecture for inspection purposes is preferred with a precision of 95.97 % and an accuracy of 98.46%. However, since CNN_Model_1 contains many losses in the validation phase, the error rate will increase in the final test stage. And CNN_Model_3 has improved with each epoch, but the total epoch used for training and validation purposes is low. However, there is a negligible loss in accuracy and precision. But CNN_Model_2 has a good amount of epochs for the training and validation of the data with a good model evaluation with negligible loss in training and testing of data, so CNN_Model_2 is preferred.

Based on a deep CNN model architecture for product inspection, a machine vision inspection model and set up for quality assurance was developed. In which investigation based on precise model architecture was followed to reduce the defective product to be dispatched into the market at a faster rate compared to the manual inspection, which was achieved using the proposed model in which the existing CNN model was developed based on fully connected layer and set a checkpoint to save the early stop function output of that particular run. So, that output can be used to retrain the same model to achieve high precise and accurate quality inspection model architecture.

8. Conclusion

The paper investigates the approach to detecting the defective product among the final production batch, ready to dispatch to the market using the machine vision neural network model. The focus of the study was to build a neural network and a virtual setup for the application to detect the surface defects for the impeller dataset. It is difficult for an experienced human to inspect the complete lot accurately. The evolution of the machine vision model provides a better quality accuracy of 98.46% with a precision of 95.97%. The accuracy achieved by the model is more accurate than human accuracy. Compared to the manual inspection, model architecture inspect each component in the batch. The manual inspection carried out in the industry achieves accuracy in the range of 70-80%, which is also dependent on the experience of the quality inspector [3], [6]. And the precision percentage for the CNN model architecture was more significant than Tajeddine Benbarrad [3], which had an 88% precision percentage.

There is further scope to improve the inspection detailing to assess the surface inspection and casting defect sizes, the geometry of the component, and the component’s separation based on the types of defects detected. It is thus worth investigating this capability as an extra feature of the network.

Another aspect that could research is developing a system to achieve and separate the defective and perfect products during the machining process using the application machine vision model. Alternatively, create a system for separating defective components based on the defect types, geometry, or size/geometry at the last stage after completing all the machining processes.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgement

Praise be to God Almighty for providing me with the chance and guidance I needed to reach my goal. In addition, my mentors, Dr Deepak Hujare and Shrikant Yadav, provided invaluable assistance in executing the work. Their enthusiasm, knowledge, and meticulous attention to detail have inspired me and kept my work on track from the first meeting to the final copy of this piece.

- Angappa Gunasekaran, Nachiappan Subramanian, Wai Ting Eric Ngai, “Quality management in the 21st-century enterprises: Research pathway towards Industry 4.0”, International Journal of Production Economics, vol. 207, pp. 125-129, 2019 Jan, :10.1016/j.ijpe.2018.09.005.

- Weichong Ng, G. Wang, Siddhartha, Z. Lin and B. J. Dutta, “Range-Doppler Detection in Automotive Radar with Deep Learning,” 2020 International Joint Conference on Neural Networks (IJCNN), pp. 1-8, 2020, DOI: 10.1109/IJCNN48605.2020.9207080.

- Tajeddine Benbarrad, Salhaoui, M., Kenitar, Soukaina Bakhat Kenitar, M. Arioua, “Intelligent Machine Vision Model for Defective Product Inspection Based on Machine Learning”, Journal of Sensor and Actuator Networks, vol. 10 (1), 2021, DOI: 3390/jsan10010007.

- Fan Tien Cheng, Hao Tieng, Haw Ching Yang, Min Hsiung Hung, Yu Chuan Lin, Chun Fan Wei, Zih Yan Shieh, ” Industry 4.1 for Wheel Machining Automation”, IEEE Robotics and Automation Letters, Vol. 1, No. 1. pp. 332-339,2016, DOI: 10.1109/LRA.2016.2517208.

- Ziqiu Kang, Cagatay Catal, Bedir Tekinerdogan, “Machine learning applications in production lines: A systematic literature review”, Computers & Industrial Engineering, Volume 149, 2020, DOI: 1016/j.cie.2020.106773.

- Anna Syberfeldt, Fredrik Vuoluterä, “Image Processing based on Deep Neural Networks for Detecting Quality Problems in Paper Bag Production”, 53rd CIRP Conference on Manufacturing Systems, Procedia CIRP, vol. 93, pp. 1224-1229, 2020, DOI: 10.1016/j.procir.2020.04.158.

- Mohamed Eldessouki, “Computer vision and its application in detecting fabric defects”, Applications of Computer Vision in Fashion and Textiles, Chapter 4, pp. 61-103, DOI: 10.1016/B978-0-08-101217-8.00004-X.

- Chao Liu, Leopold Le Roux, Ze Ji, Pierre Kerfriden, Franck Lacan, Samuel Bigot, “Machine Learning-enabled feedback loops for metal powder bed fusion additive manufacturing”, Procedia Computer Science, vol. 176, pp. 2586-2595, 2020, DOI: 10.1016/j.procs.2020.09.314.

- Bi, Z. M., Zhonghua Miao, Bin Zhang, and Chris WJ Zhang. “The state of the art of testing standards for integrated robotic systems.” Robotics and Computer-Integrated Manufacturing, 63, pp. 101893, 2020, DOI.: 10.1016/j.rcim.2019.101893.

- Dennis Michael Aaron, “Marvin Minsky”. Encyclopedia Britannica, January 20 2022, https://www.britannica.com/biography/Marvin-Lee-Minsky.

- Lee, Jay, Hossein Davari, Jaskaran Singh, and Vibhor Pandhare, “Industrial Artificial Intelligence for industry 4.0-based manufacturing systems”, Manufacturing letters, 18, 20-23, 2018, DOI.: 10.1016/j.mfglet.2018.09.002

- Soumyalatha Naveen, “Study of IoT: Understanding IoT Architecture, Applications, Issues and Challenges”, Conference: International Conference on Innovations in Computing & Networking (ICICN16),

- Jamal N. Bani Salameh, Mokhled Altarawneh, Al-Qais, “Evaluation of cloud computing platform for image processing algorithms”, Journal of Engineering Science and Technology, vol. 14, pp. 2345 – 2358, 2019.

- TingM, “Confusion Matrix”, In: Sammut C., Webb G.I. (eds) Encyclopedia of Machine Learning, Springer, Boston, MA, 2011, DOI: 10.1007/978-0-387-30164-8_157.

- Ravirajsinh Dabhi, “Casting Product Image Data for Quality Inspection”, Kaggle dataset, 2020.

- Nicholas Wells, Chung W. See,” Polynomial edge reconstruction sensitivity, subpixel Sobel gradient kernel analysis”, Engineering Reports, 2020, DOI: 10.1002/eng2.12181.

- Mahony, Niall O’, Sean Campbell, Anderson Carvalho, Suman Harapanahalli, Gustavo Adolfo Velasco-Hernández, Lenka Krpalkova, Daniel Riordan and Joseph Walsh, “Deep Learning vs. Traditional Computer Vision”, Advances in Computer Vision Proceedings of the 2019 Computer Vision Conference (CVC). Springer Nature Switzerland AG, pp. 128-144,(2019), DOI: 1007/978-3-030-17795-9_10.

- Wang Tian, Chen Yang, Qiao Meina, Snoussi Hichem, Nee AYC, Shih Albert, Lai Yinan, Tao Fei, Fang Fengzhou, Nee AYC, “A Fast and Robust Convolutional Neural Network-Based Defect Detection Model in Product Quality Control”, International Journal of Advanced Manufacturing Technology, 94, no. 9-12, pp. 3465–71, 2017, DOI.: 10.1007/s00170-017-0882-0.

- Yu-Chuan Lin, Min-Hsiung Hung, Hsien-Cheng Huang, Chao-Chun Chen, Haw-Ching Yang, Yao-Sheng Hsieh, Fan-Tien Cheng. “Development of Advanced Manufacturing Cloud of Things (AMCoT)—A Smart Manufacturing Platform“, IEEE Robotics and Automation Letters, 2, No. 3, pp. 1809-1816, 2017, DOI: 10.1109/LRA.2017.2706859.

- Salhaoui M, Guerrero-González A, Arioua M, Ortiz FJ, El Oualkadi A, Torregrosa CL, “Smart Industrial IoT Monitoring and Control System Based on UAV and Cloud Computing Applied to a Concrete Plant”, Sensors, vol. 19, DOI: 10.3390/s19153316.

- Stefanos Doltsinis, Pedro Ferreira, Mohammed M. Mabkhot, Niels Lohse, “A Decision Support System for rapid ramp-up of industry 4.0 enabled production systems”, Computers in Industry, vol. 116, 2020, DOI: 10.1016/j.compind.2020.103190.

- Sohail Shaikh, Deepak Hujare, Shrikant Yadav, “Binary Image Classification with CNNs, Transfer Learning and Classical Models “, Journal of Engineering Research and Sciences, vol. 5, no. 1, pp. 66–75, 2026. doi: 10.55708/js0501006

- Sohail Shaikh, Deepak Hujare, Shrikant Yadav, “Enhancing Breast Cancer Detection through a Hybrid Approach of PCA and 1D CNN”, Journal of Engineering Research and Sciences, vol. 4, no. 4, pp. 20–30, 2025. doi: 10.55708/js0404003

- Sohail Shaikh, Deepak Hujare, Shrikant Yadav, “An Integrated Approach to Manage Imbalanced Datasets using PCA with Neural Networks “, Journal of Engineering Research and Sciences, vol. 3, no. 10, pp. 1–12, 2024. doi: 10.55708/js0310001

- Sohail Shaikh, Deepak Hujare, Shrikant Yadav, “Classification of Rethinking Hyperspectral Images using 2D and 3D CNN with Channel and Spatial Attention: A Review”, Journal of Engineering Research and Sciences, vol. 2, no. 4, pp. 22–32, 2023. doi: 10.55708/js0204003

- Sohail Shaikh, Deepak Hujare, Shrikant Yadav, “Text-Based Traffic Panels Detection using the Tiny YOLOv3 Algorithm”, Journal of Engineering Research and Sciences, vol. 1, no. 3, pp. 68–80, 2022. doi: 10.55708/js0103008