Comprehensive E-learning of Mathematics using the Halomda Platform enhanced with AI tools

Journal of Engineering Research and Sciences, Volume 3, Issue 4, Page # 10-19, 2024; DOI: 10.55708/js0304002

Keywords: E-assessment, E-learning, Halomda educational platform, ChatGPT

(This article belongs to the Special Issue on SP4 (Special Issue on Computing, Engineering and Sciences 2023-24) and the Section Education and Educational Research (EER))

Export Citations

Cite

Slobodsky, P. and Durcheva, M. (2024). Comprehensive E-learning of Mathematics using the Halomda Platform enhanced with AI tools. Journal of Engineering Research and Sciences, 3(4), 10–19. https://doi.org/10.55708/js0304002

Philip Slobodsky and Mariana Durcheva. "Comprehensive E-learning of Mathematics using the Halomda Platform enhanced with AI tools." Journal of Engineering Research and Sciences 3, no. 4 (April 2024): 10–19. https://doi.org/10.55708/js0304002

P. Slobodsky and M. Durcheva, "Comprehensive E-learning of Mathematics using the Halomda Platform enhanced with AI tools," Journal of Engineering Research and Sciences, vol. 3, no. 4, pp. 10–19, Apr. 2024, doi: 10.55708/js0304002.

The method of assessment affects on learning by determining how students manage their time and prioritize subjects. It is widely accepted that students may demonstrate different skills in different assessment formats. The authors demonstrated how e-assessment through the Halomda educational platform can not only improve student learning outcomes, but also enrich their learning experiences. In addition, it is shown how ChatGPT integrated with two new math exploration tools into proprietary Chat-Mat™ module, can help students learn at home and in the classroom, as well as support teachers in their daily work of reviewing student assignments. The outcomes of teaching courses with Halomda not only reveal impressive student performance on final exams but also illustrate a strong correlation between exam scores and weekly assignment grades.

1. Introduction

Like other learning activities, various methods of e-learning are available, tailored to the specific knowledge and skills being achieved. As for the assessment, it is conducted considering the context and purpose, acknowledging that learners may demonstrate varying strengths in different assessment formats and that when students are actively engaged in the activities, it results in deeper thinking and long-term retention of learned concepts [1]. The method of assessment plays a key role in shaping learning outcomes, impacting how students allocate their time and which areas they prioritize to focus on [2-3]. Assessment is typically categorized into the following types: (1) formative assessment, which serves developmental purposes and occurs during the learning process, (2) summative assessment, which aims to evaluate whether students have acquired the expected knowledge or skills, (3) continuous assessment, which means that both formative and summative assignments are used during the course. The feedback provided through formative assessment assists students in tracking their learning progress and serves as a motivational tool.

E-assessment finds broad application, especially in the realms of formative and continuous assessment. Several authors [4] highlighted the fact that e-assessment employs traditional assessment methods but facilitated via online processes and digital tools. However, it could be pointed out that e-learning and e-assessment are not only digital tools, for instance, “many students take e-assessments primarily for the marks, but in doing so, they learn from the questions and especially the feedback” [5]. As digital technology becomes more accessible in education, educators worldwide are increasingly tasked with addressing its potential impact on the academic success of the students. In [6] is argued that when interacting with dialog-based AI models, the goal and context of the desired content should be clearly and concisely defined, and guidance should be provided by adding the necessary instructions and parameters for the appropriate outcome. The authors agree with the authors of [7], that there are two main approaches in technology-assisted assessment, namely – assessment with technology and assessment through technology.

Of course, e-learning and e-assessment has its own challenges. Cheating has become a greater problem with increased use of e-assessment [8-9], since cheating and plagiarism undermine the validity and reliability of the e-assessment process, eroding students’ trust in its integrity [10-12], and, of course, cheating poses a serious threat to important university exams whether they are conducted on campus or online [13]. Students’ cheating and plagiarism with regards to e-assessment and using AI technologies are object of research of different authors [14-15], [8], [11]. One approach to mitigate cheating in e-assessment is through the utilization of student authentication and authorship checking systems, like the TeSLA system [8-9], [11], [16]. However, such strategies to combat cheating are particularly well-suited for humanities courses and offer limited effectiveness in engineering disciplines [17]. Hence, biometric and plagiarism-based checking systems represent just a few of the effective measures to mitigate cheating. One potential way to prevent online assessment cheating is to provide each student with unique test instructions, questions, and results. However, creating and administering personalized assessments for each student requires significant preparation time and may not be practical for widespread implementation. To overcome this problem, the use of computer-based assessment tools can help streamline the process and reduce the time required for setup. Various tools are available in widely used learning management systems (LMS) to create tests or multiple-choice assignments, allowing students to submit written assignments to the teacher for manual grading.

Following the increasing usage of AI technologies, and specifically ChatGPT, in education and learning, and exponentially growing number of research in this field, the Halomda developed a unique technology enabling students to create prompts including complex math expressions, to feed them into the built-in ChatGPT, and to use the answers in self-learning. The authors share the hope of the authors of [6] that by overcoming concerns about plagiarism and leveraging the potential of artificial intelligence tools to remove existing barriers to learning, “the educational community will flourish in an increasingly AI-enhanced world”.

This paper is organized as follows: Section 2 discusses the related systems in the field of a math e-assessment; Section 3 presents the abilities of the Halomda system; Section 4 is devoted to the e-learning and e-assessment based on the Halomda platform; Section 5 draws conclusions.

2. E-assessment in higher mathematics courses

The use of e-assessment in higher mathematics courses has been shown to be successful in various studies. In [18], the authors stated that the implementation of e-assessment strategies, particularly using multiple-choice questions in Moodle, led to improvements in teaching and learning processes. In [19], the author further supported this, demonstrating the effectiveness of online quizzes as a form of assessment in pure mathematics. However, in [20] is highlighted the need for further research on the practice of assessment in proctored and unproctored math e-learning courses, particularly in relation to academic integrity. Some known systems that support online assignments in mathematics, are: STACK [21], WebAssign [22], WeBWorK [23], Numbas [24]. These systems primarily target basic mathematics, which imposes limitations on the complexity of problems that can be presented.

STACK utilizes a computer algebra system (CAS) called Maxima, which is capable of handling mathematical tasks such as manipulating mathematical expressions. The primary objective of the system is to evaluate students’ mathematical responses, generate problems, provide feedback, assign numerical grades, and create internal notes for subsequent analysis. The strength of CAS lies in its capacity to verify algebraic equivalence. However, if it is crucial to differentiate between different forms of answers in a given exercise, the exercise creator must specify in advance which answer formats are acceptable. Once the exercises are created, they can be reused multiple times, thereby reducing the time and workload for teachers. Furthermore, since the system operates independently of teacher supervision, assistants, or classroom availability, students can access it at any time of day or from any location. It’s evident that not all question types are suitable for implementation with CAS. Assessing skills such as proof writing and other forms of reasoning cannot yet be automated [25]. Challenges that students may face with STACK often arise from attempts to use unfamiliar syntax when entering answers [26].

WebAssign is a versatile web-based instructional system that offers students additional practice opportunities and provides convenient access to their performance assessments. The software underlying the platform identifies mathematically equivalent expressions in the background. The system offers several benefits, including immediate feedback, the ability for multiple attempts, ease of access, and access to online tutoring [26]. The primary drawbacks include the fact that technical difficulties experienced by some students, reduced interaction with instructors leading to possible neglect of assignments; the need to match the system’s format precisely when entering answers, and a less user-friendly experience when asking questions in the tutoring center [26]. From our point of view, the utility of WebAssign is somewhat constrained as it primarily serves homework assignments. Additionally, its integration with specific textbooks means that it must be used in conjunction with the selected textbook, further limiting its applicability.

WeBWorK is an open-source online homework system for math and science courses that enables teachers to publish problems for students to solve online. Supported courses encompass a range of subjects, including college algebra, discrete mathematics, probability and statistics, single and multivariable calculus, differential equations, linear algebra, and complex analysis. However, it is worth noting that the current engineering content available in the WeBWorK library is limited [27]. Furthermore, the utility of the WeBWorK system is constrained by its primary focus on homework assessment.

Numbas is an online assessment system specifically designed for mathematics subjects and offers several benefits [28]. The system excels at formative assessment and uses client-side grading, although it still requires a server to store grades. Numbas makes it easy to create questions using appropriate mathematical notation, and students can enter symbols that are displayed mathematically. The system supports the creation of complex questions with features such as randomization and automatic scoring and can easily integrate with learning management systems. However, there are limitations in using this system including concerns related to infrastructure, support, the required skills in both teachers and students, as well as time constraints [28].

3. The Halomda Platform

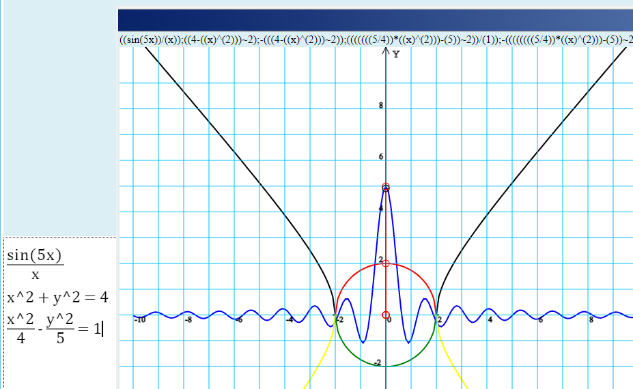

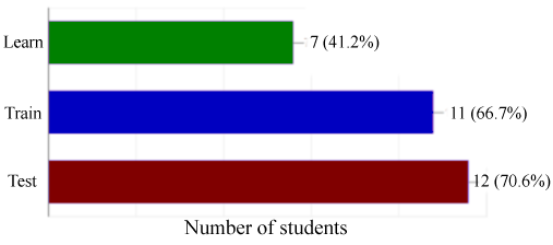

The primary didactic advantage of the Halomda platform over the aforementioned e-systems is its ability to integrate the three main methods of mathematical education – Learn, Train, and Test (Figure 1) ([29] – [31]).

The necessity for a universal, user-friendly, simple yet powerful math platform prompted Halomda Educational Software [29] to develop Math-Xpress, initially introduced in 2001 [30]. Subsequently, additional modules have been integrated into the program, forming a multifunctional Math package with a unified user interface.

These interconnected modules rely on proprietary computer algebra (CA) and interactive geometry algorithms, enabling linkage between modules that share common objects. The system comprises five modules:

- XPress-Editor – a formula editor that allows graphical WYSIWYG (What You See Is What You Get) editing of math expressions thus enables compiling of new items by unexperienced in programming people [31].

- XPress-Graph – a graph plotter.

- XPress-Geometry – a 2-D and 3-D geometry explorer.

- XPress-Evaluator.

- XPress-Tutor. For e-assessment purposes, it can be used independently; together with the XPress-Task Editor these modules form a comprehensive platform for e-teaching, e-learning, and e-assessment of mathematics courses.

Over the past few years, thousands of problems have been developed to cover courses in arithmetic, elementary algebra, and geometry for primary and intermediate schools, as well as algebra, trigonometry, and introduction to calculus for high schools. Additionally, courses in Calculus, Linear algebra, Differential equations, Probability, and Statistics have also been addressed. Throughout the course, students receive a comprehensive set of basic problems that thoroughly cover the curriculum. Typically, this set comprises 50 to 150 problems, arranged into 13 weekly assignments for one semester [31].

3.1. Typical student interaction with the platform

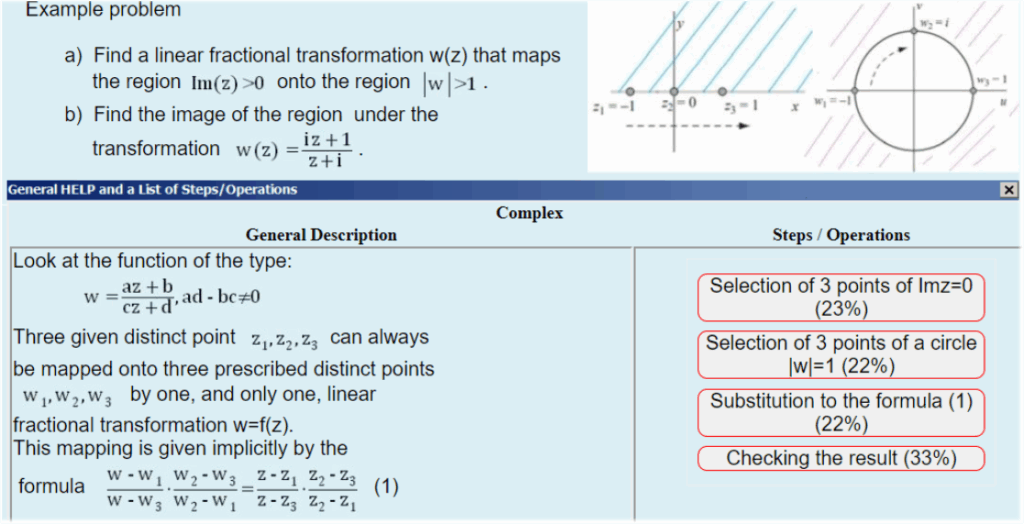

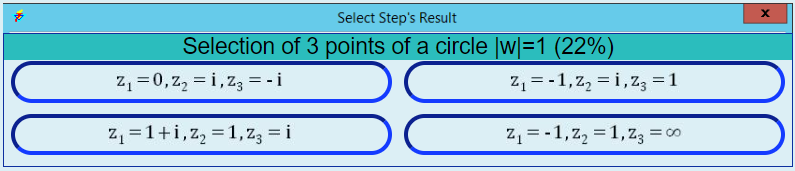

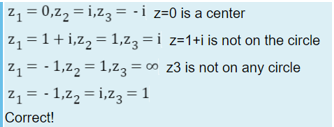

Throughout the course, students are provided with a comprehensive set of basic problems that cover the entire curriculum. Typically, this set comprises 50 to 150 problems, arranged into 13 weekly assignments for one semester [31]. In Learn mode, students are presented with a series of problems, each of which includes randomly selected parameters to ensure that different attempts produce different initial sets of parameters. Students have the opportunity to request help, which is provided at three levels: General help, which describes a solution method common to all problems within a particular topic; a List of steps to solve a problem with a description of each step; and the Result of each solution step. In Train mode, students are presented with multiple-choice options for the possible results of each step, facilitating extensive and interactive training. In Test mode, no help is provided, and the student is required to complete a series of tasks that simulate the conditions of a regular test.

Usually, students start with Test mode, attempting to solve problems without assistance. The system allows them to quit the test without penalty but limits the number of attempts for each task. If a student exits a Test mode due to difficulty, he can switch to Learn or Train modes to understand how to solve similar problems. Tasks in the Test mode differ from tasks in the Learn and Train modes only in the values of randomly generated parameters. The Learn and Train modes offer more than just problem solving; they include additional educational resources such as links to external files, websites, videos, etc. to enrich the learning experience.

3.2. Mitigating cheating through the Halomda platform

Adaptability of students’ responses significantly reduces the likelihood of cheating. For example, requiring students to enter their answers manually rather than choosing from multiple answer options greatly reduces the likelihood of dishonesty. Math-Xpress uses two approaches to evaluate student responses in exam mode: using so called “semi-intelligent” multiple choice questions (SI-MCQs) and open-ended (OA) mode. This approach is demonstrated with a problem shown in Figure 2:

During the Learn mode, a student has the possibility to get the results of each step of the problem solution, one of which is the proper answer, and the other three – wrong answers (Figure 3, a). As in usual MCQ-mode, a student can select an answer by clicking on it (in our example: ). In case of a wrong answer, a student is provided with short feedback as can be seen in Figure 4.

Due to the possibility of free-hand typing, the student may decide to enter another expression, for example, just: ), and the system will accept them. This ability of the system is provided by the task developer (teacher, instructor), who endeavors to anticipate the most expected options for the correct answer that students can enter.

In open access mode, the result windows are hidden, and students are prompted to manually enter the correct answer. Here, the task designer can choose between two evaluation options: the requirement of strict identity of expressions or their algebraic equivalence. In the first case, the student’s answer is compared with all possible answers (both correct and incorrect) given in the task. In the latter case, the comparison is carried out using computer algebra methods to determine algebraic equivalence. For example, the following pairs of expressions are considered equivalent:

$$\frac{2}{\sqrt{2}},\ \sqrt{2},\ 5^{-2x},\ \frac{1}{25^x} \ ;\ y = 3x + 6,\ x = \frac{y}{3} – 2$$

An exam may consist of various tasks, each requiring a different assessment method. It is the responsibility of the teacher to determine the most suitable assessment approach for each problem.

The operation of any math assessment system within Moodle (or another Learning Management System (LMS)) can enhance cheating prevention through a combination of random test selection and parameter randomization for tasks. This entails integrating Math core functionalities (such as computer algebra operations, expression evaluation, and test result calculation) into the Moodle code and transferring student data into the Moodle LMS.

Halomda has developed a plugin that enables the system to operate seamlessly within Moodle, facilitating the transfer of results into the Moodle LMS.

3.3. Integration of ChatGPT into the Chat-Mat™ module of Halomda

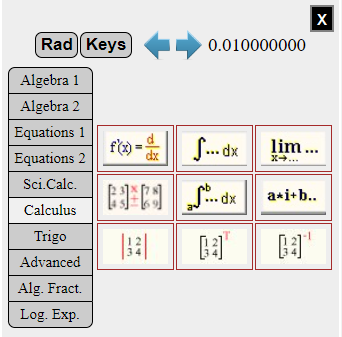

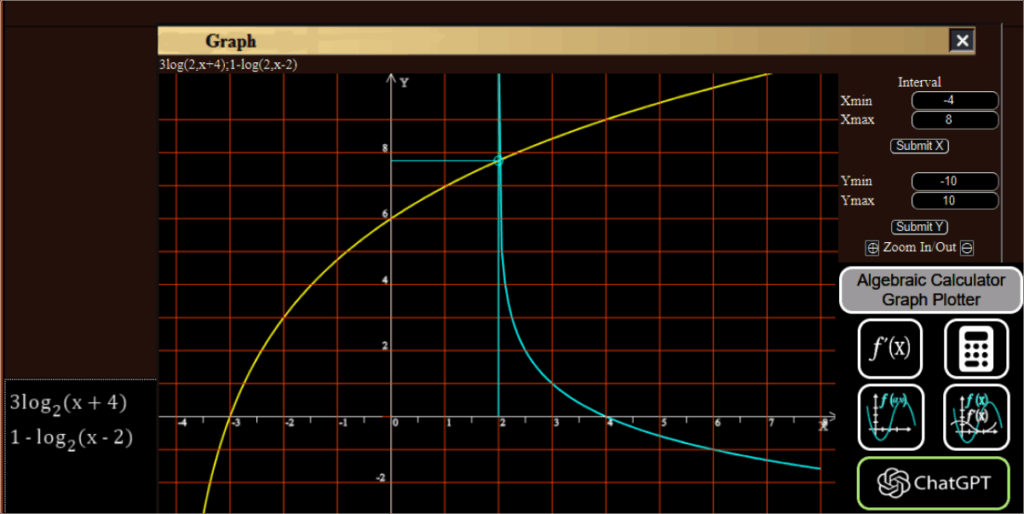

In order to provide students with an easy access to ChatGPT, the last version of Halomda platform includes it as one of a new built-in features: Graph-Men (plot and explore graphs) (Figure 5), Algebraic Calculator (Equation Solver and Symbolic evaluator in 10 areas of mathematics) (Figure 6), ChatGPT and recommended Key Prompts (Figure 7).

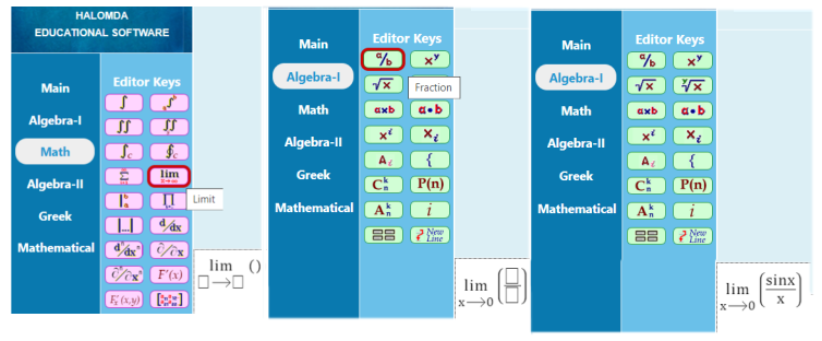

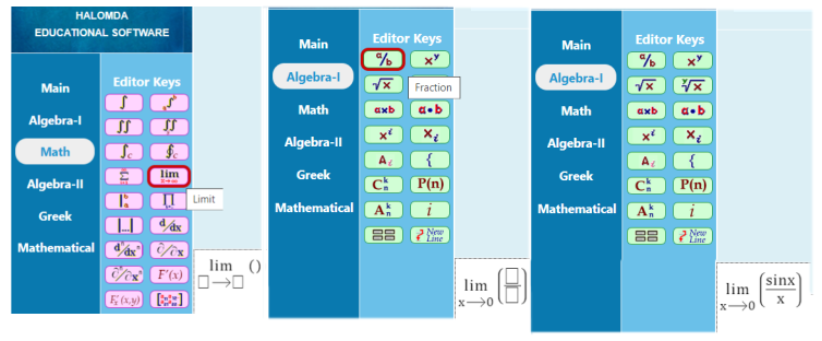

In addition to incorporating an AI bot into the system interface, Halomda developed a tool to graphically edit mathematical expressions and present them in the LaTeX format required by ChatGPT. For instance, to compose a prompt for ChatGPT including calculation of the limit: \(\lim_{x \to 0} \frac{\sin x}{x}\), it is required to type:

lim_{x\rightarrow0}{(\frac{sinx}{x})}&&

The graphical editor of mathematical expressions offers students the ability to enter limits using the appropriate editor templates (Figure 8), making it easy to include results by copying and pasting into the ChatGPT message box allowing students to easily communicate with the bot. This feature makes communicating with the bot easier, allowing students to interact with it more effectively.

2 Key Prompts as a new form of self-learning

The main didactical questions arose from most of the publications on using ChatGPT in education of mathematics ([32], [33], [34]) are:

- How to effectively address the issue of frequently encountering incorrect responses from ChatGPT?

- In what ways can students utilize ChatGPT as a math tutor beyond just direct problem-solving, considering the occasional inaccuracies?

The suggested answers, based on the teacher’s experience and implemented by Halomda in Chat-Math module, can be summarized in the following statement: A directly proportional to the time spent studying the subject. The more activities offered to students, the greater success is expected!

Following this idea, the authors suggest that the communication with ChatGPT will stimulate the students by presenting other aspects of the problem solving beyond the algebraic technique provided by the Learn and Train modes of the system. To achieve this, the authors recommend that students ask the AI bot diverse questions. These questions (called prompts) should enhance their overall knowledge and understanding of the subject and also refine their problem-solving abilities, ultimately leading to greater success in exams. According to [35], “effective prompts are characterized by the following fundamental principles: clarity and precision, contextual information, desired format, and verbosity control, which means that “writing effective prompts is complicated for nontechnical users, requiring creativity, intuition, and iterative refinement”.

To help students in composing appropriate prompts, a list of recommended “Key Prompts” is included with each problem in the Learn and Train sections of the system. It consists of 3 types of prompts reflecting the didactical ideas to engage students, including:

- Solution of the equation; performing algebraic operations; solving integrals; finding derivatives, limits, etc., relevant to the topic. Although the solution provided by ChatGPT may sometimes be incorrect and/or incomplete, its added value lies in the complementary verbal explanation it offers; the contextual Help recommends the students to verify the answer using the Algebraic Calculator or plot graph with Graph-Man – two other modules included in the Chat-Mat- package.

- Reminding definitions, theorems, proofs relevant to the subject.

- Asking broad questions about history, practical applications, “tricky” or amusing questions (like paradoxes, etc.).

Providing students with a list of possible (recommended) prompts not only teaches them to compose their own prompts but also enhances their learning experience by providing additional content required for a deeper comprehension of the subject matter.

The only tool to communicate with ChatGPT is a prompt. In the case where a student is interested in asking for help in solving math problems from class assignments, it is enough to copy the question in the ChatGPT message window, while writing the mathematical expressions in LaTeX format. To achieve this, the Chat-Mat module of the Halomda platform includes a tool for editing and copying expressions in ChatGPT.

In addition to entering mathematical expressions for evaluation, it is useful to write them so that ChatGPT generates a concise but comprehensive answer. To demonstrate the students how to write math prompts, a short Instructions containing the rules and recommendations are included in the list of Key Prompts, thus completing the didactical need for effective use of ChatCPT.

The role of Key Prompts is two-fold: firstly, to demonstrate students how to formulate math prompts, and secondly, to enhance the overall value of the course, as previously outlined.

The combination of three exploration tools – Key Prompts, Algebraic Calculator and Graph Plotter – allows students to not only observe the solution (provided by ChatGPT), but also check its correctness using the Algebraic Calculator, receive explanations from ChatGPT, and visualize and check it using the Graph Plotter.

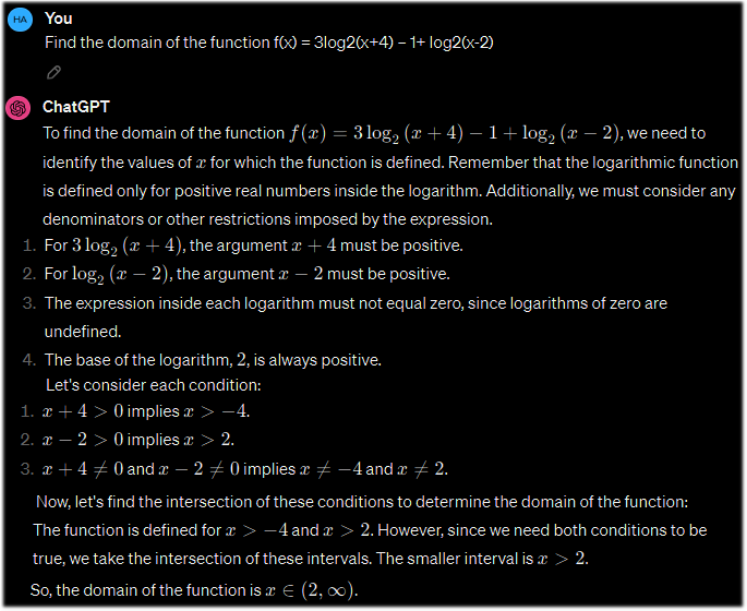

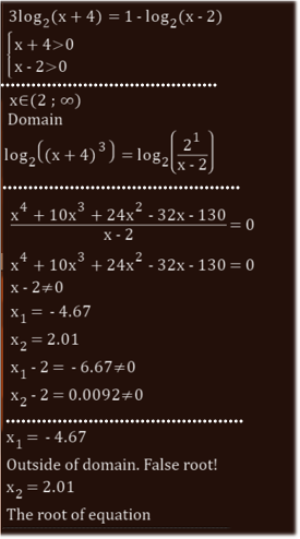

The following example demonstrates the use of the Chat-Mat™ module to solve the following:

Problem 1. Find the domain of the function

$$f(x) = 3\log_{2}(x + 4) – 1 + \log_{2}|x – 2|$$

and solve the equation:

$$3\log_{2}(x + 4) = 1 – \log_{2}(x – 2)$$

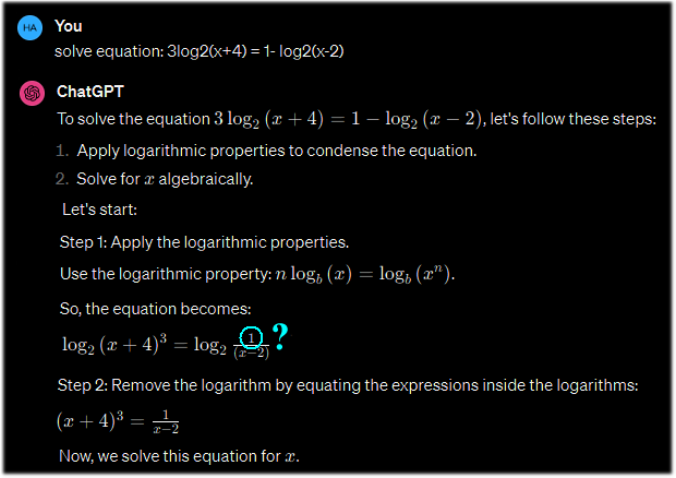

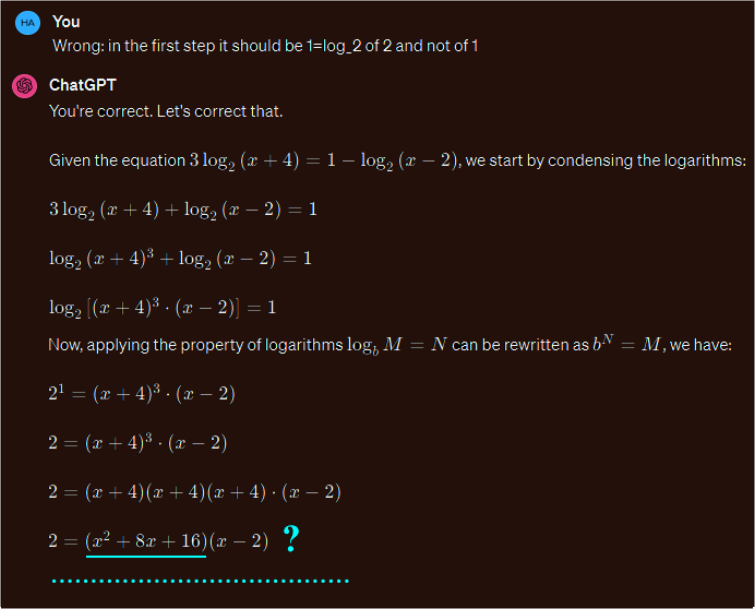

ChatGPT 3.5 gives a correct answer to the first question, including detailed explanations (Figure 9), but fails to solve the equation, giving the (correct!) comments for the incorrect calculations (Figure 10 and Figure 11), and final wrong answer (Figure 11).

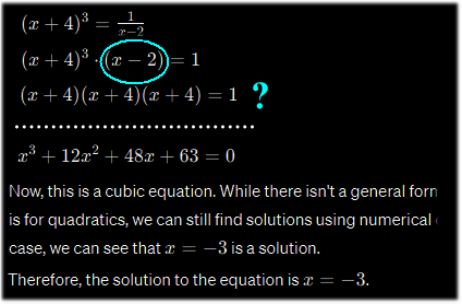

When students are aware of the bot’s possible mistakes, they can follow the Instructions and try to check the result in another way, for example, visually – by plotting a corresponding graph using the graph module of Chat-Mat (Figure 12, a), or the Algebraic Calculator (Figure 12, b).

The intersection of the two curves shown in Figure 12,a), representing the graphs of the functions from two sides of the equation, shows that the solution given by the bot is wrong. This may encourage students to analyze the reason why this happened – this is in fact, the (hidden) didactical purpose of the Chat-Mat activity!

Students can check each solution step of the bot and discover the mistake in the calculations (Figures 10 and 11), or/and use the Algebraic Calculator (Figure 12, b), compare the solutions and find the mistakes of ChatGPT. The AI abilities of ChatGPT enable them to continue the conversation and try to fix the problem (Figure 13).

Such interactions between a student and three Chat-Mat tools – ChatGPT, Graph Plotter and Algebraic calculator provide the opportunity for a deeper understanding of the subject, despite the possibility of ChatGPT solving the problem incorrectly.

In order to help students in composing their own prompts, lists of Key Prompts have been created and included into several assignments in Mathematics and Physics, like: Complex Functions, Calculus-I, Transcendental Functions, Basics of Quantum mechanics and more.

4. E-assessment via the Halomda platform

The existence of software for solving mathematical problems has always been problematic due to the possible misuse of it by students during online examination. The Halomda platform does not allow students to get help neither from the built-in Algebraic Calculator or Help option of the system, nor to get access to ChatGPT: all such options are blocked during Test mode (i.e. during the exams).

Using ChatGPT or math-solver software during online exam or even asynchronous test is practically impossible, as long as writing math prompts in LaTeX format necessities using the Halomda’s built-in tools (which are typically disabled during Test mode). Additionally, within the Test mode of the Halomda system, solution of a problem often requires answering intermediate steps. Furthermore, the randomization of task parameters helps mitigate cheating, which is crucial for ensuring trusted assessments.

So, the e-assessment offered by the Halomda platform won’t interfere with its solving AI abilities.

The benefits of availability of three learning modes: Learn (providing the basic concepts of the subject), Train (allowing practice) and Test (providing opportunities for assessment, including self-assessment) can be summarized as follows:

- For students, the platform offers a comprehensive learning tool that includes an interactive e-textbook and extensive training on common problems encountered in the final exam. Combined with the ability to provide instant feedback, the Halomda platform significantly improves the learning process.

- For educators, the platform provides an interactive lesson presentation guide along with automatically generated trustworthy grades for midterm assignments.

- Начало на формуляра

Over the past decade, five basic Higher Mathematics courses at Ariel University in Israel have employed the Halomda educational platform for teaching and assessment, serving more than 3,000 students annually. The platform’s e-assessment module proved indispensable for conducting online assessments during the pandemic [36]. Additionally, Calculus-1 and Elementary Math courses were conducted for 60 students at Talpiot and Orot teachers colleges, respectively. Each course was structured into 13 lessons, either face-to-face or online, followed by problem-solving seminars. Consequently, students were assigned 13 weekly tasks (mandatory), based on Test mode of the Halomda platform, typically comprising 10 tasks with randomized parameters. Each task included detailed solutions and explanations, functioning as an interactive e-textbook for students and an instructional guide for teachers during online sessions. The Train mode facilitated students’ necessary practice, while the Test mode encouraged them to tackle all typical problems, comprehensively covering the course curriculum.Начало на формуляра

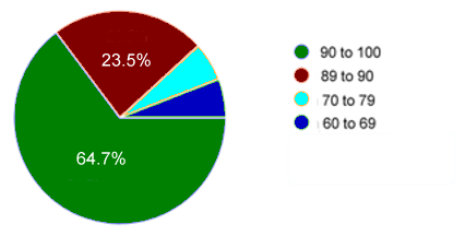

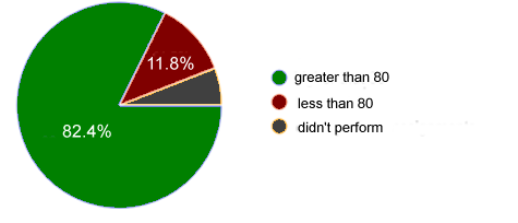

Upon completing the course, students were invited to participate in an online questionnaire. The authors asked about their exam grades as well as the average grade for all Xpress-Tutor assignments. The final exam results revealed both high scores (Figure 14(a)) and a positive correlation between exam grades and weekly assignment grades (Figures 14 a), b)).

The authors also queried participants about their preferred mode of preparation for the exam. The findings depicted in Figure 15 revealed that Test and Train modes were the most favored options (70.6 and 66.7% respectively).

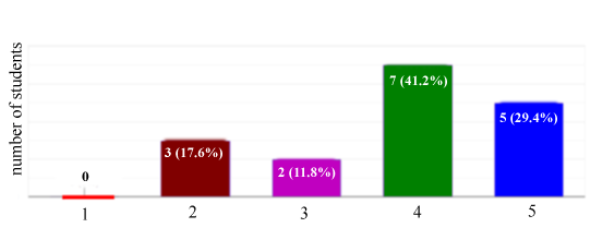

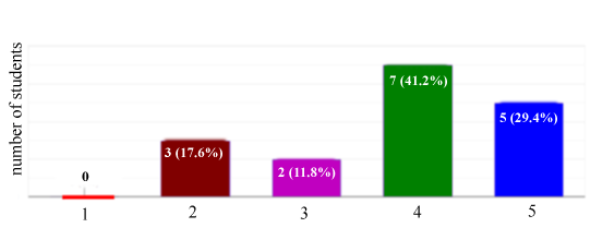

Figures 16 and 17 display the responses of students to the following queries: To what extent did the system help you in preparing to class exam? (Figure 16) and To what extent was the operation of the system simple and clear? (Figure 17) (on both diagrams the horizontal axes show the rates of correspondent values).

Figure 16: Rate of helpfulness [31]

Figure 17: Rating of clarity of the system [31]

The results show that students felt that the system significantly helped them prepare for the exam. They found that the use of the system is simple and clear.

Using the Halomda platform has demonstrated increased student interest and engagement in the subject, as well as significant improvements in learning outcomes.

5. Conclusion

This paper analyzes the most recognized e-learning and e-assessment systems in the field of higher mathematics and highlights the limitations of each of them. Addressing the needs of both students and teachers, Halomda Educational Software has developed Math-Xpress, a versatile, user-friendly and reliable math platform. Math-Xpress offers various modes of interaction with students, including Learn, Train, and Test modes. The integration of the system into LMS-Moodle is a beneficial feature that helps teachers in their routine test checking.

To facilitate the communication of math students with ChatGPT, the latest version of the Halomda platform integrates it as a new built-in feature along with Graph-Men for plotting and exploring graphs, Algebraic Calculator for equation solving and symbolic evaluation, and recommended Key Prompts for various topics across several subjects. The authors believe that dialogue with ChatGPT through properly formulated Prompts can become a new and effective form of self-learning.

Finally, the survey results show a good correlation between final exam scores (which are proctored and administered on campus) and weekly assignment scores. This shows the advantages of using the Halomda platform for e-learning and e-assessment purposes, which can be considered in different directions: the ability of students to learn from feedback, which significantly enhances students’ learning activities (more than 70% of students rated the usefulness of the system as 4 or 5 points), andthe ability to get help from ChatGPT and compare its response with other functions of the Halomda platform.

The further development of the system and the ideas behind it is planned to be focused on the fast growing if AI tools, and particularly their abilities in solving math problems in different areas of mathematics. A study on using of ChatGPT by students of three teachers’ colleges has begun and will be presented soon.

- P.E. Rawlusyk, “Assessment in Higher Education and Student Learning”, Journal of Instructional Pedagogies, vol. 21, pp. 1-34, 2018. https://bit.ly/2vPtbzT.

- J. Biggs, C. Tang, Teaching for Quality Learning at University: What the student does, (3rd ed). Maidenhead: McGraw-Hill, 2007.

- G. Brown, J. Bull, M. Pendlebury, Assessing student learning in higher education, London: Routledge, 1997.

- R. De Villiers, J. Scott-Kennel, R. Larke, “Principles of effective e-assessment: A proposed framework”, Journal of International Business Education, vol. 11, pp. 65–92, 2016.

- M. Greenhow, “Embedding e-assessment in an effective way”, MSOR Connections, vol. 17, no. 3, – journals.gre.ac.uk., 2019.

- B. Eager, R. Brunton, “Prompting Higher Education Towards AI-Augmented Teaching and Learning Practice”, Journal of University Teaching & Learning Practice, vol. 20, no. 5, 2023, https://doi.org/10.53761/ 1.20.5.02.

- P. Drijvers, “Digital assessment of mathematics: Opportunities, issues and criteria”, Mesure et évaluation en éducation, vol. 41, no. 1, 41-66, 2018, https://doi.org/10.7202/1055896ar.

- H. Mellar et al.,” Addressing cheating in e-assessment using student authentication and authorship checking systems: teachers’ perspectives,” Int. Journal for Educational Integrity, vol. 14, no. 2, 2018. DOI 10.1007/s40979-018-0025-x.

- R. Peytcheva-Forsyth, L. Aleksieva, B. Yovkova, “The Impact of Technology on Cheating and Plagiarism in the Assessment – the teachers’ and students’ perspectives”, AIP Conference Proceedings 2048, 020037, 2018, https://doi.org/10.1063/1.5082055.

- J. Dermo, “E-assessment and the student learning experience: A survey of student perceptions of e-assessment“, British Journal of Educational Technology, vol. 40, no. 2, pp. 203–214, 2009, https://doi.org/10.1111/j.1467-8535.2008.00915.x.

- S. Kocdar, A. Karadeniz, R. Peytcheva-Forsyth, and V. Stoeva, “Cheating and Plagiarism in E-Assessment: Students’ Perspectives”, Open Praxis, vol. 10 issue 3, July–September 2018, pp. 221–235 (ISSN 2304-070X).

- M. E. Rodríguez, A.-E. Guerrero-Roldán, D. Baneres, I. Noguera, “Students’ Perceptions of and Behaviors Toward Cheating in Online Education“, IEEE Revista Iberoamericana De Tecnologias Del Aprendizaje, vol. 16, no. 2, May 2021. Doi: 10.1109/RITA.2021.3089925.

- A. Vegendla, G. Sindre, “Mitigation of Cheating in Online Exams: Strengths and Limitations of Biometric Authentication”, Biometric Authentication in Online Learning Environments, 2019, DOI: 10.4018/978-1-5225-7724-9.ch003.

- J. M. Bartley, Assessment is as Assessment does: A conceptual framework for understanding online assessment and measurement, Online assessment and measurement: Foundations and challenges, pp. 1–45, 2005, IGI Global.

- S. Stacey, “Cheating on your college essay with ChatGPT”, Business Insider, https://www.businessinsider. com/professors-say-chatgpt-wont-kill-college-essays-make-education-fairer 2022-12.

- M. Ivanova, S. Bhattacharjee, S. Marcel, A. Rozeva, M. Durcheva, “Enhancing Trust in eAssessment – the TeSLA System Solution”, 21st Conf. on e-assessment (TEA2018),10-11 Dec 2018, Amsterdam, The Netherlands, Conf. Proc., https://www.teaconference.org/. arXiv:1905.04985.

- M. Durcheva et al., “Innovations in teaching and assessment of engineering courses, supported by authentication and authorship analysis system“, The 45th conference AMEE’19, AIP Conf. Proc. 2172, 040004 (2019); https://doi.org/10.1063/1.5133514.

- J. M. Azevedo, E.P. Oliveira, P.D. Beites, “How Do Mathematics Teachers in Higher Education Look at E-assessment with Multiple-Choice Questions”, International Conference on Computer Supported Education, 2017, DOI:10.21913/IJEI.V3I2.165.

- S. Zegowitz, “Evaluating the use of e-assessment in a first-year pure mathematics module”, Preprint, 2022, https://arxiv.org/pdf/1908.01028.pdf.

- S. Trenholm, “An investigation of assessment in fully asynchronous online math courses”, The International Journal for Educational Integrity, 3‘, 2007, DOI:10.21913/IJEI.V3I2.165.

- STACK, https://stack-assessment.org.

- WebAssign, https://webassign.com.

- WeBWorK, https://webwork.maa.org.

- Numbas. https://www.numbas.org.uk.

- C. J. Sangwin, Computer Aided Assessment of Mathematics. Oxford: Oxford University Press, 2013.

- D. Serhan and F. Almeqdadi, “Students’ perceptions of using MyMathLab and WebAssign in mathematics classroom”, International Journal of Technology in Education and Science (IJTES), vol. 4, no.1, pp. 12-17, 2020.

- A. G. d’Entremont, P. J. Walls, P. A. Cripton, “Student Feedback and Problem Development for WeBWorK in a Second-Year Mechanical Engineering Program”. Proc. 2017 Canadian Engineering Education Association (CEEA17) Conf.

- V. Challis, R. Cook, and P. Anand, “Priming Numbas for formative assessment in a first-year mathematics unit”, In Gregory, S., Warburton, S. & Schier, M. (Eds.), Back to the Future – ASCILITE ‘21. Proc. ASCILITE 2021 in Armidale (pp. 313–318), (2021), https://doi.org/10.14742/ascilite2021.0145.

- Halomda. https://halomda.org.

- S. Kornstein, “Xpress Formula Editor and Symbolic Calculator”, Mathematics Teacher, vol. 94, no. 5, May 2001.

- P. Slobodsky, M. Durcheva, “E-assessment of Mathematics Courses using the Halomda Platform”, AIP Conf. Proc. 2939, 050011, 2023, https://doi.org/10.1063/5.0178514.

- Y. Wardat, M. Tashtoush, R. AlAli, A. Jarrah, “ChatGPT: A revolutionary tool for teaching and learning mathematics”, Eurasia Journal of Mathematics, Science and Technology Education, vol. 19, no. 7, em2286, 2023, https://doi.org/10.29333/ejmste/13272.

- S. Zhao, Y. Shen, Z. Qi, “Research on ChatGPT-Driven Advanced Mathematics Course”, Academic Journal of Mathematical Sciences, vol. 4, no. 5, 2023, doi: 10.25236/AJMS.2023.040506.

- Rane, Nitin, “Enhancing Mathematical Capabilities through ChatGPT and Similar Generative Artificial Intelligence: Roles and Challenges in Solving Mathematical Problems”, 2023, http://dx.doi.org/10.2139/ssrn.4603237.

- J. Velásquez-Henao, C.J. Franco-Cardona, L. Cadavid-Higuita, “Prompt Engineering: a methodology for optimizing interactions with AI-Language Models in the field of engineering”, DYNA, 90 (230), Especial Conmemoración 90 años, pp. 9-17, Nov 2023, ISSN 0012-7353, DOI: https://doi.org/10.15446/dyna.v90n230.111700.

- P. Slobodsky, A. Shtarkman, A. Kouropatov, “The implementation of e-training and e-assessment system Math-Xpres in development and practical use of system Math-Xpres in development and practical use of math courses at teachers’ colleges in Israel during the Corona times”, (Unpublished communication), 2022.

- Philip Slobodsky, Mariana Durcheva, “Content Recommendation E-learning System for Personalized Learners to Enhance User Experience using SCORM”, Journal of Engineering Research and Sciences, vol. 4, no. 9, pp. 30–46, 2025. doi: 10.55708/js0409004

- Philip Slobodsky, Mariana Durcheva, “Effective Approach Experience for Practical Training using Web-Based Platforms and Tools in the Context of the COVID-19 Pandemic”, Journal of Engineering Research and Sciences, vol. 1, no. 4, pp. 22–27, 2022. doi: 10.55708/js0104003